- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What is faster? Dividing x modulo 2^n oder using bitshift x by n?

Solved!01-18-2018 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I prefer using the modulo operation over the divide operation when handling integers. Therefore I avoid the memory operation DBL to INT and back.

Now my question is: Does the compiler recognize that

1) only one output of modulo is used (divide or floor)

2) if only one is used

2a) a "x AND (2^n - 1)" operation does for floor

2b) a "x << n" operation for divide

I have to operate around 5MB/s, so it probably makes a difference.

Solved! Go to Solution.

- Tags:

- Base Package

- compiler

01-18-2018 10:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

my quick unscientific test showed quotient-remainder to be 10 times faster than logical shift, didn't expect that

If Tetris has taught me anything, it's errors pile up and accomplishments disappear.

01-18-2018 11:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I also made a test and the outcome has been that bitshift is about 3% faster.

Floor vs AND resulted in floor being about 2% faster.

100.000.000 repititions per operation and mean value over all time measurements.

01-18-2018 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just a fair warning: it can be pretty difficult to get accurate and predictive benchmarking results you can trust to within single digits of %. And it's probably rarely worth the effort.

If your pretty quick test shows you a difference of only 2 or 3% *AND* it was set up at least reasonably well, I wouldn't react to the results. To me, that's simply a tie considering all the sources of uncertainty.

There are some people around here (ahem, altenbach, and others too...) who are very knowledgeable about such things. I'll bet if you post your benchmarking code, you'll get insights from some of them.

-Kevin P

01-18-2018 11:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hmmm.....

Since we seem to be going two different directions may I humbly suggest reviewing the presenation offered by Christian Altenbach (AKA speed demon) on benchmarking you can find here?

Bench-marking is straight forward once you are aware of the issues that could affect the benchmarks.

Ben

01-18-2018 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@LabViewTinkerer wrote:

100.000.000 repititions per operation and mean value over all time measurements.

I'm no benchmarker, but isn't the minimum value more telling? I've understood that anything larger than the minimum was affected by OS shennanigans.

01-18-2018 02:25 PM - edited 01-18-2018 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If you could disable cache, prefetching, hyper-threading and what else then it might be. With these things enabled you might measure the cache access time, or the localization of the generated code rather than a meaningful indication which code is really more performant.

And with LabVIEW and other modern code optimizing compilers you have to be very careful that you really measure the actual operation and not some other artifacts. LabVIEW constant folding for instance might make look certain operations to be instantaneous since the compiler has determined that certain operations on constant data can be optimized away by storing the processed data as constant in the code rather than the original constant and then performing the operation each time on that. That way pretty complicated operations can seem to execute in 0 us.

01-19-2018 04:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@jcarmody wrote:

@LabViewTinkerer wrote:

100.000.000 repititions per operation and mean value over all time measurements.

I'm no benchmarker, but isn't the minimum value more telling? I've understood that anything larger than the minimum was affected by OS shennanigans.

Actually, you are right.

01-19-2018 04:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

rolfk wrote:And with LabVIEW and other modern code optimizing compilers you have to be very careful that you really measure the actual operation and not some other artifacts.

That's one of the main reasons, why I asked, if somebody knows. Not only guesses.

01-19-2018 04:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Tinkerer,

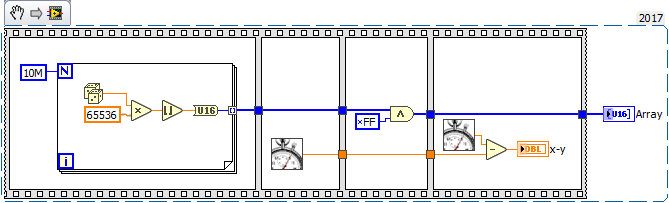

for me a quick test of "U16 mod 256" for an array of 10M random U16 values resulted in

- ~20ms using Q&R

- ~4ms using AND 0x00FF

(For this special case the SplitNumber function needed ~18ms.)

You can make such simple tests on your own too: