Our online shopping is experiencing intermittent service disruptions.

Support teams are actively working on the resolution.

Our online shopping is experiencing intermittent service disruptions.

Support teams are actively working on the resolution.

02-15-2019 04:42 PM

Hi Guys,

I am working on a labview code to read data from atmega 32 microcontroller through USB. But I am facing a problem. whenever the data crosses form +ve voltage to -ve or vise versa, the data point is lost and instead a positvie or negative spike occurs. I have tested my circuit on the CRO and no such peak exists. Thus I think the problem lies with the way I am reading the data. The (digital) hex data read, is converted to analog as per the "result representation" of atmega manual. Please find my VI, read waveform and the result representation attached. Please let me know if anyone finds my mistake

Thanks a lot!!

Have a great weekend!

02-16-2019 12:34 PM

When you have a problem reading data from an Instrument, one useful strategy is to simply collect and "look at" the data coming in from the Instrument. Sometimes the problems are as simple as a mixup in reading, a mixup in interpretation (you get 12 bit numbers stored in offset binary and you need to convert them to 16-bit "ordinary" binary), or something similar.

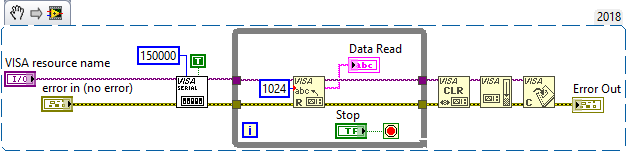

A very common error when reading Serial Data is to use "Bytes at Port" to control the VISA reads, which can result in strange happenings. I notice you have set the "Use Termination Character" input, which means you expect your Instrument to send you a complete string. Well, that's what you want to do! Here's my re-write of of your code (with details omitted):

Some obvious points (which are not entirely trivial):

If you run this code with your Instrument, you can bring Data Read out through an Indexing Tunnel and get an Array of Strings showing just what your Instrument is sending you. If you are having parsing difficulties, sending us a sample of these data would help us suggest what might be going astray in your parse.

Bob Schor

02-18-2019 04:17 PM

Hi Bob,

Thanks for getting back to me.

The problems was with interpreting the received data. I removed the bytes at port control. As you suggested I tried reading the whole data at once but that was somehow causing more problems. It was unable to read the negative values when the "Visa read" byte count was 1000. Thus I tried reducing the byte count to 2 and I was able to read the exact expected data. Only down side to this was it takes more time, but it is not a huge concern in my case.

- I cleared the error and visa lines

- I disabled the termination character as I was not using it.

- The timeout is high because my data is transferred as a whole after the experiment is complete.

- I have tried using the Visa buffer size considering max data points I want to read instead of max available size.

- As I mentioned above, Visa read of more characters was causing more problems

- I also removed the timing module

Thanks a lot for your help!

02-18-2019 04:28 PM

@pratikpade wrote:

Hi Bob,

Thanks for getting back to me.

The problems was with interpreting the received data. I removed the bytes at port control. As you suggested I tried reading the whole data at once but that was somehow causing more problems. It was unable to read the negative values when the "Visa read" byte count was 1000. Thus I tried reducing the byte count to 2 and I was able to read the exact expected data. Only down side to this was it takes more time, but it is not a huge concern in my case.

- I cleared the error and visa lines

- I disabled the termination character as I was not using it.

- The timeout is high because my data is transferred as a whole after the experiment is complete.

- I have tried using the Visa buffer size considering max data points I want to read instead of max available size.

- As I mentioned above, Visa read of more characters was causing more problems

- I also removed the timing module

Thanks a lot for your help!

It seems to me that there are still fundamental things that you aren't understanding about the communications protocols, or you wouldn't have to kludge the thing so badly. All this "buffer this" and "max data that" shouldn't really be necessary if you truly understand how to communicate with the instrument. What you are coding is very fragile; communications protocols (usually) aren't.

The closer you are to truly understanding, the more smoothly and "automatic" things should get.

02-20-2019 05:01 AM

@pratikpade wrote:

I am working on a labview code to read data from atmega 32 microcontroller through USB.

How EXACTLY are you sending the data through out of the microcontroller? I am specifically referring to the message protocol you implemented. I really hope you are not just spitting out the raw 16 bits because you will run into weird synchronization issues. Once we have the protocol figured out, then we can talk about fixing your code.