- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

LV2015 / Win / 32 Bit – Call Library, adapt-to-type array pass by pointer parameter: pointer value is invalid (points into kernel space) – works with 64 Bit version

05-19-2016 06:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

TL;DR: I just encountered a problem regarding passing arrays to a DLL function: The pointer value that the DLL function sees is invalid, namely the pointer value "points" into kernel space. It's notable that this problem does not happen with all arrays, but only with some. Details follow:

I'm interfacing with the Optores OGOP library for GPU accelerated OCT processing (I also happen to be the developer of that library). For this library I developed LabVIEW binding VIs that work perfectly well in a 64 bit environment. One peculiar property of GPU processing is, that GPU code executes asynchronously to CPU code. With the OGOP library the usage scheme is, that one allocates the data source and destination buffers, fills the source buffers with the raw data, queues corresponding source+destination buffers for processing and the GPU processes them asynchronously. Then you synchronize with the processor to wait for the queue to finish. Only after synchronizing with the queue the buffers may be touched by the CPU again.

To interface this with LabVIEW arrays I implemented a pair of array referencing/dereferencing VIs:

The idea is pretty straightforward: I create a data reference on the array, then within an in-place structure I extract the address of the array referenced using a small helper function that converts returns the pointer value as an uintptr_t, which is then stored in the "array reference" cluster as a U64 alongside the array metadata. This is kind of an "opening bracket" and there is of course a "closing bracket" counterpart, that of no interest here, though.

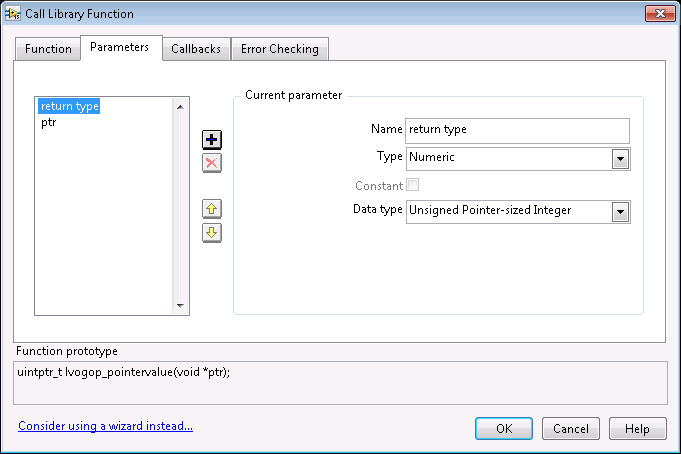

The call library node is configured as following:

And the source code of this particular function is pretty much straightforward:

/* Helper function that returns the uintptr_t of a pointer.

* Required to turn a LabVIEW array into something generic, that can be

* passed around without invoking conversion copies. */

uintptr_t lvogop_pointervalue(void const *ptr) { return (uintptr_t)ptr; }There is a "small-ish" (well as small as it can be) test program that work just fine for 64 bit. But when running in a 32 bit environment for some but not all arrays used in the program the pointer values returned are greater than 0xF0000000, which essentially means they'd point into kernel addres space.

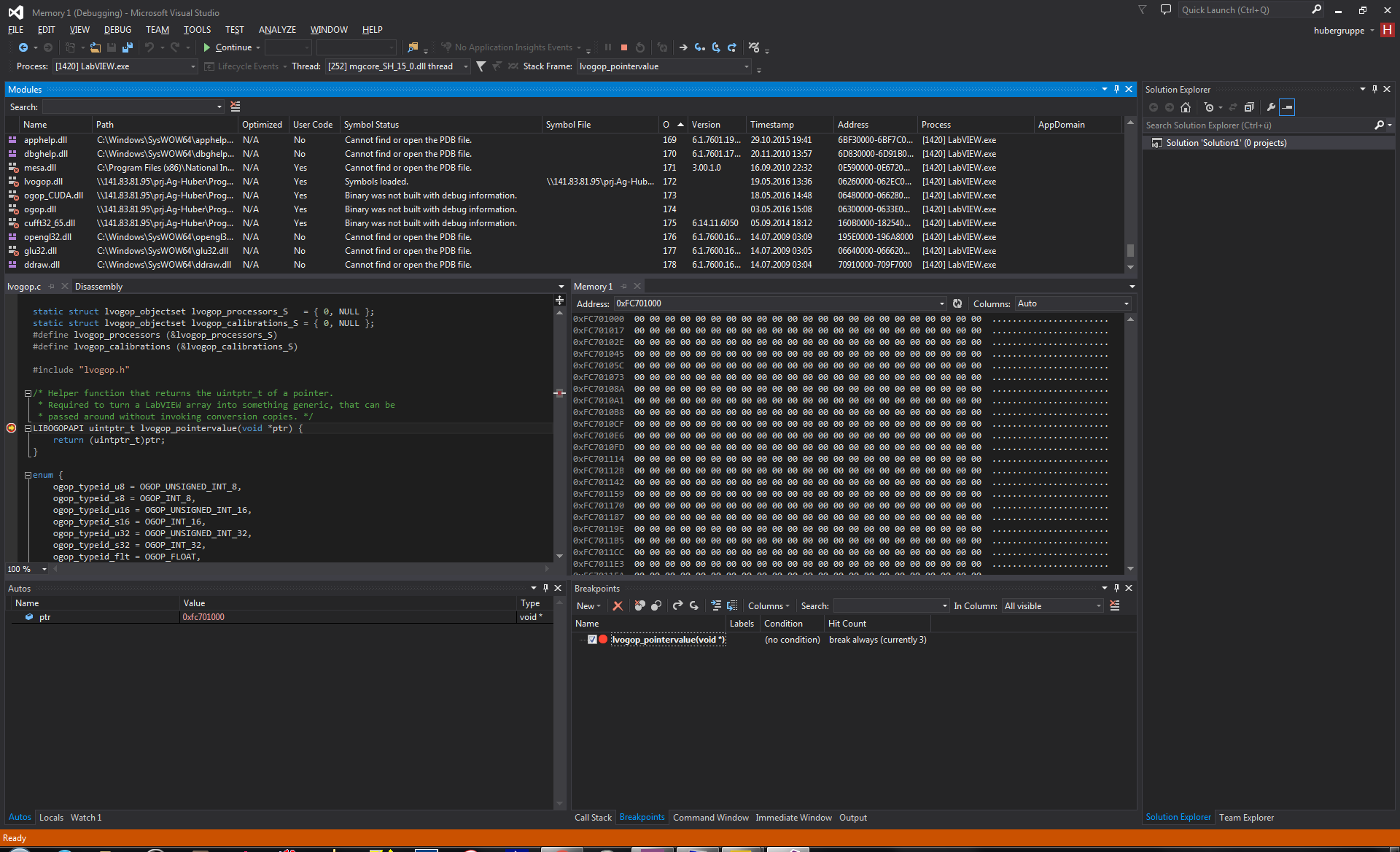

At first I thought that I may have accidently truncated or overflown a value by not having all parameter types in the call library node and the C function definition consistent, but putting a breakpoint in the function and looking at the values with a debugger gives the same nonsensical values:

I'd say, "go home LabVIEW, you silly you're drunk!", or rather that there's some serious bug somewhere in LabVIEW.

05-19-2016 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is the wrong way to go about this, because you have no control over whether the array pointer continues to be valid. LabVIEW internally stores arrays as handles, not pointers. If you resize or modify the array elsewhere in your code, LabVIEW could allocate a new array and deallocate the pointer that you've stored.

Instead, use DSNewPtr to allocate a pointer to as much memory as you need, and release it later with DSDisposePtr. Use MoveBlock to copy the data from that pointer into a LabVIEW array when you need it. These functions are all documented in the LabVIEW help, and can be called through a Call Library Function Node with the library name set to "LabVIEW".

When you're getting the unexpected pointer values, is it possible that you're passing in an unallocated (empty) array?

05-19-2016 01:50 PM - edited 05-19-2016 01:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Nathan is right, striclty speaking the pointer you pass into the CLN is only valid for the duration of the function call. Most likely it is in this particular case also valid inside the In Place Element frame but definitely not after. When you look at the GPU Toolkit from National Instruments you will see that they go through many hoops to make this work reliably, but what you do is not enough.

The pointer showing as 0xF0000000 looks suspicious indeed and might be caused by referencing an empty array handle, which LabVIEW treats normally as a null handle value. I would expect the Call Library Node to make a null pointer for such a handle but maybe it's something else. But you really need to look at the GPU examples and how they do it there. LabVIEW being a dataflow language has certain implications that you can not just ignore when interfacing to external code.

05-19-2016 02:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@nathand wrote:This is the wrong way to go about this, because you have no control over whether the array pointer continues to be valid. LabVIEW internally stores arrays as handles, not pointers. If you resize or modify the array elsewhere in your code, LabVIEW could allocate a new array and deallocate the pointer that you've stored.

Yes, I'm aware of this and that's why I jumped these hoops to create data references. As far as I understand it, these references *pin* the arrays into place; at least if a data reference-ed array is not explicitly dereferenced and LabVIEW quitted you're getting a memory leak warning.

And, as far as 64 bit LabVIEW is concerned in all my tests it works exactly that way. I went to great lengths to implement test programs that would "resize" or otherwise modify a data referenced array (and then passed them to a call library to check the pointer). Apparently LabVIEW will create copies of that array with the changed attributes, yet the pinned memory seems to stay in place. Because after dereferencing it, the array coming out of the dereferencing node will contain the very values that my test DLL code wrote to the memory after the LabVIEW array was resized.

@nathand wrote:Instead, use DSNewPtr to allocate a pointer to as much memory as you need, and release it later with DSDisposePtr.

That's of no use to me. I want to pass data that is stored within a LabVIEW-Array to the library for asynchronous processing. And doing a staging copy to an intermediary staging buffer is a no-go; in a pure C program we're pushing some 6GiB/s into OGOP, current generation Intel CPUs memory copy bandwidth peaks out at about 8.5GiB/s per single thread core; the overhead of managing several copy threads is considerable. I don't expect LabVIEW to deliver the same transfer bandwidths as well, but at least 2GiB/s are the benchmark.

@nathand wrote:When you're getting the unexpected pointer values, is it possible that you're passing in an unallocated (empty) array?

No, because a) I check for the array to actually have content (i.e. a nonzero size) before pushing it to the DLL and b) the very same VI that's loading a dataset from storage and pass it to the library works perfectly fine in 64 bit mode, but will deliver this nonsensical pointer if called in 32 bit mode.

05-19-2016 02:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:Nathan is right, striclty speaking the pointer you pass into the CLN is only valid for the duration of the function call.

I suspected that much, but I thought that creating a data reference should do the trick; if one does not dereference properly upon LabVIEW exit a memory leak warning pops up. Also I implemented several experimental programs to test what happens if an array on which a data reference exists is resized or otherwise modified. Also what happens if after the in-LabVIEW modification the memory for which the pointer has been obtained from the reference does see these changes and if modifications to the referenced memory show up in the array that is returned from dereferencing. And what I found was that:

- reference LabVIEW array with all 0xdeadbeef

- modify LabVIEW array (resize and fill with 0xcafebabe)

- memory pointed to by what the library got still shows all 0xdeadbeef

- fill memory the library has with all 0x5a5aa5a5

- dereference array => LabVIEW array comes out all 0x5a5aa5a5

@rolfk wrote:

But you really need to look at the GPU examples and how they do it there. LabVIEW being a dataflow language has certain implications that you can not just ignore when interfacing to external code.

I'll definitely look at this, but at least it's not unexpected. It's not so different from functional programming languages, where asynchronous writes to memory are an impure side effect.

05-19-2016 04:55 PM - edited 05-19-2016 04:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ag-huber-luebeck wrote:

I'll definitely look at this, but at least it's not unexpected. It's not so different from functional programming languages, where asynchronous writes to memory are an impure side effect.

There definitely is a significant difference! In LabVIEW all the data is managed by LabVIEW dynamically. In most other programming environment and definitely when you program in C/C++ you have to manage the data explicitedly (well in C++ you can use classes such as in the Standard Template library which manage their internal data automatically without the need to explicitedly new and delete it, but that has its own complications).

LabVIEW data is ALWAYS managed by LabVIEW! DVR's allow locked access to it but it's definitely not valid to dereference a DVR and use the according pointer outside of the IPE structure. That pointer can and sometimes will get invalid if you resize the data in the DVR in any way. The IPE serves as semaphore around the DVR that prevents any modification to the underlaying data by anyone else and that is not just to eliminate data races in the DVR value but also locking the data buffer in memory for the duration of the IPE. Outside of that the data in the DVR is free to be deleted, resized and whatever else.

Also note that a DVR is a LabVIEW reference. As such it will be subject to the standard LabVIEW reference garbage collection. This means the DVR lifetime will be tied to the VI hierarchy that created the DVR. Once that hierarchy goes idle (stops executing) the DVR is definitely gone from memory, even if you have stored the refnum somewhere and try to access it in some other place in your application. It's a good change that this is what actually happens in your case. You fail to handle the error from the Read DVR node in the IPE.

Any Top Level VI creates it's own VI hierarchy. This also includes VIs that you start through VI Server through the Run method or the Call Asynchonous node. Only the synchonous VI call is treated like an in-hierarchy execution.

05-19-2016 06:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

@ag-huber-luebeck wrote:

I'll definitely look at this, but at least it's not unexpected. It's not so different from functional programming languages, where asynchronous writes to memory are an impure side effect.

There definitely is a significant difference! In LabVIEW all the data is managed by LabVIEW dynamically. In most other programming environment and definitely when you program in C/C++ you have to manage the data explicitedly (well in C++ you can use classes such as in the Standard Template library which manage their internal data automatically without the need to explicitedly new and delete it, but that has its own complications).

I think you confused "functional" with "imperative" (C, C++, C#, Pascal, Fortran are imperative languages). In a functional language memory is managed by the runtime environment similar to LabVIEW does, in a dynamic way. The most widely known functional languages are Haskell, OCaml F# and Erlang. For example a common functional language idiom are list comprehensions. e.g. the following Haskell code

squareList l = [x *. x | x <- l]

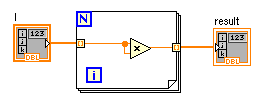

has about the same structure and effects as this LabVIEW diagram

In the Haskell list comprehension the square brackets denote something similar as a LabVIEW For Loop, where <- acts very much like an indexing tunnel. So when I refer to functional languages and impure global state modification, then this is functional language programming lingo for "stuff that happens outside the control of the runtime environment". And practically every functional language offers "unsafe" constructs to allow interfacing with impure code.

Back to topic.

LabVIEW data is ALWAYS managed by LabVIEW! DVR's allow locked access to it but it's definitely not valid to dereference a DVR and use the according pointer outside of the IPE structure.

I see. However the array must be located somewhere in the memory and unless LabVIEW uses a compacting garbage collector the location in process address space should not change.

That pointer can and sometimes will get invalid if you resize the data in the DVR in any way

Well, we're not doing that. Between creating the reference and deleting it, we don't touch the array with LabVIEW functions. So what would be the right way to lock a LabVIEW array into memory? (I guess I can find that in the GPU Toolkit). Note that for what OGOP does it's not neccesary to access the memory using LabVIEW for the duration of that "memory lock". Eventually there will be a synchronization in the execution chain. But all the performance benefits of GPUs become moot if you have to synchronize the GPU with the CPU every other step.

05-19-2016 06:18 PM - edited 05-19-2016 06:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You may be not modifying the DVR data yourself outside of the IPE but that doesn't prevent someone else from doing it. It's of course possible to write in your library documentation that this should not be done and that the user should not even attempt to access the DVR data themselves but we all know that documentation is seldom read and even if it is, it does not prevent people try to work around it anyways. Your way of trying to dereference a LabVIEW array outside of its protected environment is proof of that! ![]()

Also unless you intend to destroy the DVR after each use when finally accessing the data to give to the user you will have to copy it out of the DVR into a real LabVIEW array at some point anyhow or allow the user direct access to the DVR without protecting it in your class private data.

05-20-2016 07:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So I just installed the NVidia GPU Analysis Toolkit and looked at how it does things internally. The (not so big) surprise: They're doing it essentially in the very same way as I came up with! There's a little bit more boilerplate all around to account for the lower level scope of the CUDA API. But the principle on which it operates is the same:

Create DVR from Array data, perform CLN call to retrieve pointer value, put pointer together with DVR and metadata into a typedef cluster return that.

05-23-2016 04:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

And I remember they do various things in the DLL to actually lock the DVR during the time it is kept in the DLL for asynchronous operation. So still not exactly the same than what you do.