- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CPU update from 4770k to 13900K but not much increase in speed in a data processing VI

Solved!09-08-2023 06:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The VI itself is not reentrant.

If you are calling it with data fed from a queue, in a parallel for loop or in parallel running VIs, not being reentrant fill basically force sequential execution.

09-08-2023 06:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@obarriel wrote:

Well maybe the x10 was a bit optimistic. But for example the the CPUZ multithread bench shows 8x faster computation for the 13900k (32 cores) than for the 4770k (8 cores) : https://valid.x86.fr/bench/8 and when I run this test on both computers I get values very close to the published ones.

But in this labview VI I only get slightly over x2 faster.

With 1M elements in an array i'd assume the bottle neck is memory access. 2x increase between gen 4 and gen 13 i7 platforms sounds about right.

*double checks*

Yup, a 1150 socket typically had a 1600 MHz memory, while a 1700 socket typically uses 3200.

09-08-2023 06:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

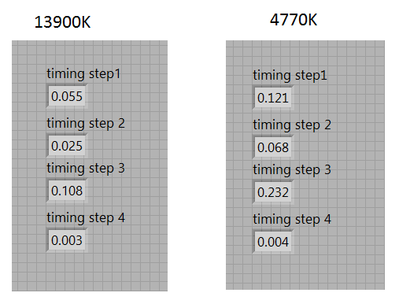

Following the advice of CA I attach the VI, timed in 4 steps.

Here is the timing comparison for each one of the steps between both processors

I also attach the same program but without using the MASM toolkit (then the pseudoinverse step becomes quite slower)

09-08-2023 08:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Obarriel,

At a quick glance there are still some simple things to try:

1. The loop for step 1 is still only set to 4 parallel instances, you will need to increase that to use the extra parallelism.

2. There are still a lot of coersion dots everywhere. The 2d arrays from step 1 are doubles which forces step 2 up to double and then it all gets converted down to SGL for step 3. If you coerce the output of step 1 to SGL then you avoid coersion up in step 2 and down in step 3.

These coercions are probably single-threaded and will account for some of the overhead that means you don't see the gains you expect from the extra cores.

Beyond that, I don't see anything that is specifically holding back multi-threading and this kind of speed-up maybe all you can expect from just throwing a new processor at the problem.

There may be other things that can be done to gain performance that will apply to both processors such as finding ways to avoid additional memory copies. I suspect step 1 could be stripped out to a more basic form to avoid some for example and the build array in step 2 is going to cause a copy.

The inverse matrix is the biggest cost though and right now that is all handled in the library so the only way to improve on that is to use a different library or different algorithm that may accelerate better.

========

CLA and cRIO Fanatic

My writings on LabVIEW Development are at devs.wiresmithtech.com

09-08-2023 09:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@James_McN wrote:

Hi Obarriel,

At a quick glance there are still some simple things to try:

1. The loop for step 1 is still only set to 4 parallel instances, you will need to increase that to use the extra parallelism.

2. There are still a lot of coersion dots everywhere. The 2d arrays from step 1 are doubles which forces step 2 up to double and then it all gets converted down to SGL for step 3. If you coerce the output of step 1 to SGL then you avoid coersion up in step 2 and down in step 3.

These coercions are probably single-threaded and will account for some of the overhead that means you don't see the gains you expect from the extra cores.

Beyond that, I don't see anything that is specifically holding back multi-threading and this kind of speed-up maybe all you can expect from just throwing a new processor at the problem.

There may be other things that can be done to gain performance that will apply to both processors such as finding ways to avoid additional memory copies. I suspect step 1 could be stripped out to a more basic form to avoid some for example and the build array in step 2 is going to cause a copy.

The inverse matrix is the biggest cost though and right now that is all handled in the library so the only way to improve on that is to use a different library or different algorithm that may accelerate better.

Is this discussion answering the original question that was (to paraphrase), "Why didn't I get the performance increase that I thought I would?" and not, "How do I make my code efficient?"

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

09-08-2023 09:20 AM - edited 09-08-2023 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Also just a quick look, and this would probably only address absolute execution time, not the relative difference for the two CPUs.

I notice in step 1 that you're generating a sine wave pattern that might contain a lot of redundant information. For example, if the "Main array 16bit" is sized at ~1M, and your detected frequency is maybe 1/100 of the sample rate, then you'd be generating 10k cycles worth of sine wave.

If so, then what exactly do you learn by doing all that downstream processing on 10k cycles worth of this generated sine that you wouldn't learn by processing a much smaller # of cycles, perhaps as small as 1?

If the 'cycles' input to the sine pattern generator is often >>1, this seems like a prime candidate to consider for speeding things up dramatically. It doesn't speak to the CPU differences, but perhaps it could make the point moot?

-Kevin P

P.S. Didn't see billko's latest comment until after posting. While fair to point out that making the code more efficient in general wasn't the original question, I'd also add that at least sometimes, the question that *is* asked isn't the one that *should* have been. Here, IMO, it seems fair to suppose that the most important goal was to speed up processing. Being surprised and disappointed that a new CPU didn't help "enough" led to the original specific question, but the CPU change was just one technique for trying to solve the speed problem. Suggestions for other efficiencies are alternative techniques for that same goal.

09-08-2023 09:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As best I can. To summarise again the direct answers:

* There are DBL-.SGL coercions that will be serialised before entering the multicore toolkit which will limit the speed up from multiple cores.

* Step 1 only generates 4 parallel cases - though now I see that is because the dimension size is 4 so step 1 won't benefit from more cores anyway - only faster cores.

And from previous answers:

* The multicore toolkit does not appear to be optimized for modern instruction sets which might be where some of the benchmark gains are (both models have these extensions, but they may have more optimization in the new processor that we don't get to take advantage of)

========

CLA and cRIO Fanatic

My writings on LabVIEW Development are at devs.wiresmithtech.com

09-08-2023 10:28 AM - edited 09-08-2023 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

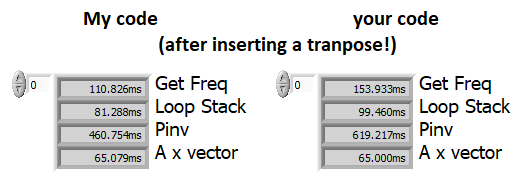

@obarriel wrote:

I also attach the same program but without using the MASM toolkit (then the pseudoinverse step becomes quite slower)

I think you have an error, because the A x vector fails unless you transpose the matrix first. (explaining why step 4 is so artificially fast in your result!)

An yes, on my ancient 8 year old 2 core laptop (I7-5600U), things are not that bad and I was able to get tens of % better by just bringing some sanity to the code, i.e. cleaning up just the coercions and making everything DBL! (This it the code without MASM!)

This is just the tip of the iceberg.

09-08-2023 10:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@billko wrote:Is this discussion answering the original question that was (to paraphrase), "Why didn't I get the performance increase that I thought I would?" and not, "How do I make my code efficient?"

I think that has been answered.

Now we're just being nerdy friendly.

09-08-2023 11:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

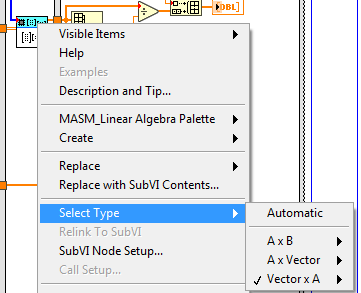

Thanks!!

There was no error in version of the code using MASM because it allows your to chose vector x A (this is not possible in the "normal" version)

But you are totally right that the version without MASM that I posted missed the transpose.

To everybody:

Yes my original question was why the performance improvement from 4770k to 13900K was so limited. And that still remains. But anyway, I also appreciate very much any tips to make the code more efficient. Trying to learn from there.