1. The counter's 32 bits aren't like an A/D converter's bits -- you don't use them to figure out resolution over a voltage range. They're just the size of the count register and tell you how high you can count before rollover.

The minimum measurable timing change will generally be 2 periods of the timebase used for the measurement. For a 62xx M-series board, the fastest timebase is 80 MHz so the minimum measurement interval will be 25 nanosec.

2. 50 ppm is already a %-like unitless spec. If your measurement takes a second, the accuracy would be 50 millionths of a second. If 10 seconds, then 500 millionths, etc. There's likely a small temperature-dependent component to the accuracy too, but generally the 50 ppm scales pretty straightforwardly.

3. No way of knowing without a reference standard. It's spec'ed to within 50 ppm, but where it lands within that +/- 50 ppm is unknown.

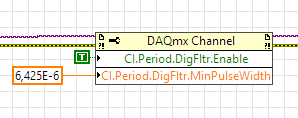

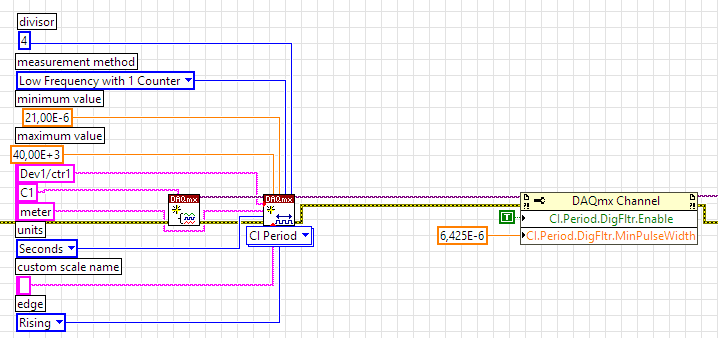

4. Can't make a blanket statement for all possible filter settings and all kinds of measurements. It might have *no* effect, but it depends on what you're measuring and how you're filtering.

-Kevin P

ALERT! LabVIEW's subscription-only policy came to an end (finally!). Unfortunately, pricing favors the captured and committed over new adopters -- so tread carefully.