- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Kinesthesia - A Kinect Based Rehabilitation and Surgical Analysis System, UK

Contact Information

University: University of Leeds

Team Member(s): Chris Norman, Dominic Clark and Barnaby Cotter

Faculty Advisors: Prof Martin Levesley, Dr Pete Culmer

Email Address:mn08cjn@leeds.ac.uk, mn08dpc@leeds.ac.uk, mn08bc@leeds.ac.uk

Country: United Kingdom

Project Information

Title: Kinesthesia

Kinesthesia: "Awareness of the position and movement of the parts of the body."

Description:

This project follows the development of a user-friendly interface between the Microsoft Kinect and National Instruments' LabVIEW. Additionally, the development of applications for use in the fields of stroke rehabilitation, gait analysis and laprascopic surgery are detailed.

This LabVIEW-Kinect toolkit is designed with the intention of allowing both ourselves and others to easily interface the RGB camera, depth camera and skeletal tracking functionalities of the Kinect with any LabVIEW system. In addition to the medical tools covered, a number of further examples are provided to showcase the potential for connectivity between the two systems, and how Microsoft's Natural User Interface (NUI) can be used for modern system control. The project has been featured on Microsoft's UBelly Blog (http://www.ubelly.com/2012/03/innovative-tech-man-3-students-and-a-kinect-sdk/) and featured on the Channel 9 as the 'Inspirational Project of the Day' (http://channel9.msdn.com/coding4fun/kinect/Kinesthesia-A-Kinect-Based-Rehabilitation-and-Surgical-An...). The team are currently undertaking work in collaboration with the University of California, Los Angeles' Neurosurgery department to supply gait analysis and ARAT software for post operative assessment tools.

The team are currently working wth National Instruments to make the KInesthesia toolkit available to the public through the LabVIEW Tools Network.

Products: NI LabVIEW, NI Vision Development Module, Microsoft Kinect, Microsoft Kinect SDK

Below is a List of the various applications that have been developed as part of the project.

VTOL Rig Control

The video below shows an example of how we can interface the Microsoft Kinect with LabVIEW to produce an intuitive control system. Using the Kinect's skeleton tracking function, we show how a Vertical Takeoff and Landing (VTOL) demonstration rig can be controlled with the body, interfacing via a NI DAQ board. Users have the option to control it using a hand control, signal generator or through body position using the Kinect. In this last case, the angle of the spine is calculated on the fly, and used to control voltage to the two motors. Additionally, the system can be operated through a manual or a Fly-by-Wire control system.

Figure 1: Kinect and LabVIEW controlled VTOL demonstration rig.

3D Model Explorer

The video below demonstrates one of the applications produced using the Kinesthesia Toolkit. User's gestures are picked up by the Kinect's skeleton tracking and these are used to manipulate the 3D picture control within LabVIEW. In the example program any .stl file can be loaded into the picture control, a. stl parser finds the size of the model and user manipulations are scaled to the size of the model, in the video a simple cube and a .stl model of a colon, gained through post processing of a CT (Computated Tomography) scan are used. This program has potential applications within operating theatres however the use is equally valid for manipulation of any 3D data such as CAD models, or as has been developed by the team to navigate slides in a powerpoint presentation.

Figure 2: Gesture based 3D picture control manipulation

The Challenges:

In addition to developing a driver set and toolkit for the Kinect, we are targetting our application development at three key areas:

Stroke Rehabilitation

Stroke, the disturbance of blood supply to the brain, is the leading cause of disability in adults in the USA and Europe, and has a profound effect on the quality of life of those to whom it occurs. The physical effects of stroke are numerous, and there exists a substantial field of study devoted to the assistance and rehabilitation of stroke sufferers. It is a complex and multidisciplinary affair, requiring constant monitored cognitive and physical therapy. As such, a need is presented for a system that provides mental stimulation to the patient whilst accurately recording body position and movement, allowing physiotherapists to both maintain patient motivation and extract detailed information on their movements.

Laprascopic Surgery

With the advent and continued development of laprascopic (keyhole) surgery, it is essential that the operating surgeon has accurate and up to date information on an area that may not be directly visible to them. Before operating, the abdomen is usually inflated to provide the surgeon with the necesessary space to both view and access the internal organs. However, it is difficult for the surgeon to accurately guage the level of inflation, and the working space available to them. A tool that can determine inflation of the abdomen, and provide an estimate of the inflated volume would increase the safety of procedures and provide the surgeon with more information about the patient prior to operation.

Gait Analysis

It has been suggested that a person's gait is more unique than their fingerprint. Indeed, the way in which we walk can offer insight into a number of medical problems which may take longer to present in other forms. Stroke, for example, can result in a defined limp in one side of the body, and the extent of this limp may offer further information into the extent of the stroke. Similarly, analysis of one's gait can also offer information into struggling hip, knee or ankle joints, and can suggest not only that a joint replacement is required, but also that a specific type of replacement would be suitable. A low-cost, investigative tool that can be used prior to expensive specialist referrals would offer a significant benefit to clinicians.

The Solution:

The Microsoft Kinect has already revolutionised the gaming industry with its ability to track users motions, marking a key movement away from traditional control systems. This project is aimed at taking advantage of Microsofts innovative technology, and interfacing it with NI LabVIEW, via the development of a fully functional LabVIEW driver and toolkit. With this in place, we are developing a selection of motion tracking tools and programs specifically aimed at tackling these the three challenges above:

Figure 3: Kinect Sensor

Stroke Rehabilitation

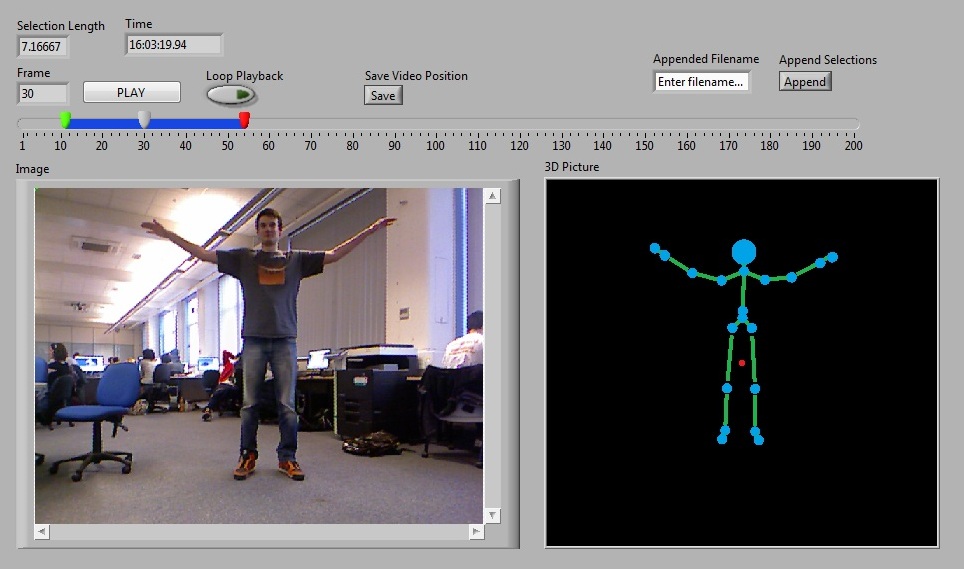

Currently, a number of technologies exist to track patient movements. However, not only can these systems can cost upwards of £40,000 per camera, they have the added chore of requiring the user to wear markers placed on the skin or clothing, and are often significantly more accurate than is necessary, providing no extra insight into patients movements at a large capital expense. As such, a significant advantage is presented by the development of an easy to use, Kinect-based system which can produce similar results at a fraction of the cost. A system is developed that can record normal camera footage of a patient, alongside a full 3D rendering of the user's skeleton, allowing the operator to rotate and explore the users movements. This VI embeds the skeletal data directly into an .avi file. A further VI has been developed which allows this video to be reviewed, chopped up and saved, giving cherry-picked footage of a physiotherapy session, providing a 3D, rotatable rendering of the patient's skeleton, alongside the raw video footage, as can be seen in figure 11.

Figure 4: Video analysis suite developed for physiotherapists to play back, edit and analyse data recorded from assessments.

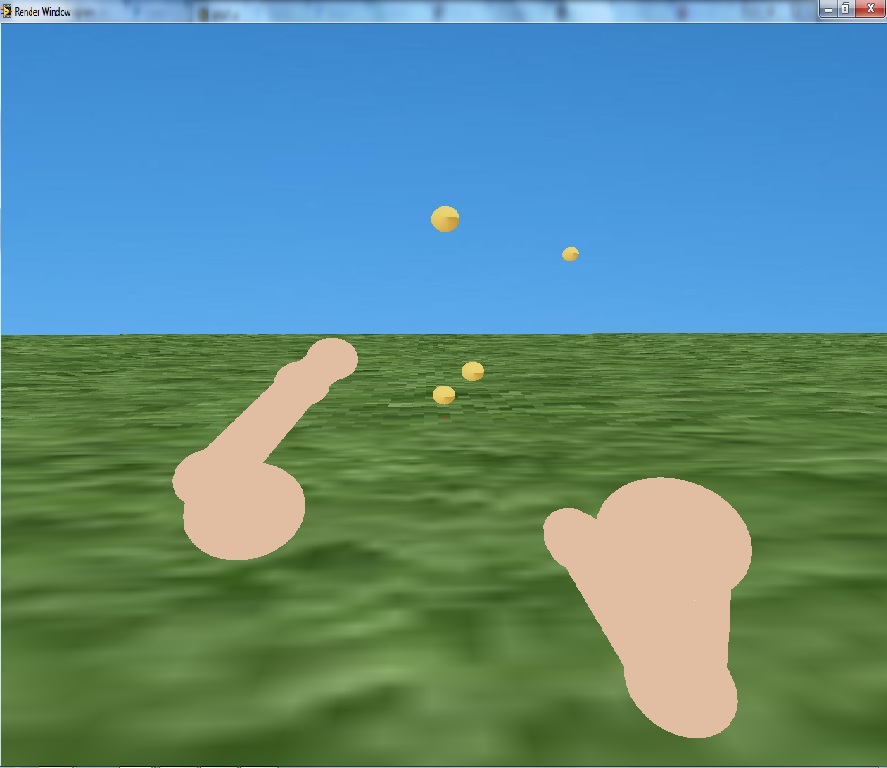

Further to this, A virtual stroke rehabilitation environment is created within LabVIEW, that provides patients with tasks to perform based upon the industry-standard ARAT (Action Reach Arm Test). These are intended to mimic day to day human movements, an example of this application can be seen in Figures 2 and 3.

-Resized.jpg)

Figure 5: A demonstration of the Virtual ARAT test being developed that shows your arms moving in real time with camera control maintained by your head position

Figure 6: shows the 3D scene created for the Virtual ARAT tests.

Laprascopic Surgery

Using the depth-mapping functionality of the Kinect, the depth from the camera to the abdomen for each pixel in the camera's range is determined. This information is fed into LabVIEW, and processed, using approximations of the geometry of the abdomen, in order to determine the inflated volume, and provide surgeons with a more accurate picture of the intra-abdominal cavity.

Gesture based manipulation of the .stl files allows surgeons to manipulate CT scans and 3D models wirelessly without the need to leave the sterile operating environment.

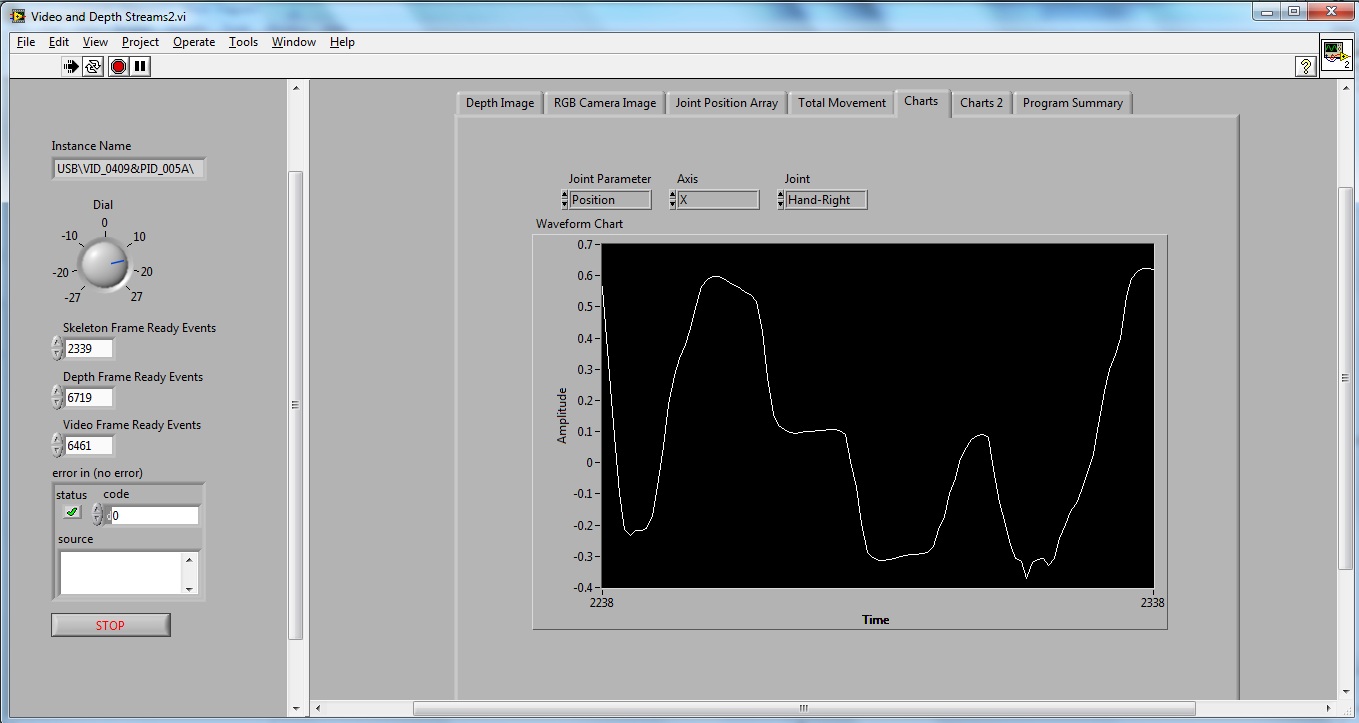

Gait Analysis

As with stroke rehabilitation, gait analysis is often undertaken via the application of various sensors to the patients body, the location of which is then fed into the computer system. This is generally a long and drawn out affair, which may result in patient discomfort. Using the Kinect to track the user's skeleton, gait can be quickly analysed and important metrics concerning the patient can be calculated through LabVIEW and fed back to the operator. Figure 4 shows the real time position tracking of a patients right hand in the X axis. A user can select any of the 20 joints to track and access a number of metrics on this joint.

Figure 7: shows an example of the data that can be obtained on the fly from the system. The program above allows the smoothness and magnitude of movements to be monitored as well as the velocity and acceleration of any one of the 20 joints in the X, Y and Z axis.

Validation:

In order to assess the accuracy of the Kinect's skeletal tracking, we tested the Kinect against the system currently used by the university, Optotrak. This camera system is the current industry benchmark, and is capable of extremely accurate marker tracking down to sub millimetre levels. The system at the university is currently set up to track arm motion in stroke patients, monitoring speed, accuracy and fluidity of motion. Small wired sensors are attached at various points on the users upper body, whose position is then determined by a triscopic camera, and relayed to a LabVIEW processing environment. The high accuracy level in this system lends itself perfectly as a validation tool; effectively providing a gold standard to which we can compare the Kinect. We used what is known as a 'far reach' exercise for our initial validation. This consists of the user sitting up straight in a chair, and attempting to touch a target on an LCD screen with their hand whilst maintaining a vertical trunk. At a later date, we performed full standing validation for compound upper body movements.

Below are photos of the Optotrak system being used to record our primary validation measurements.

.jpg)

Figure 8: Optotrak (tm) camera, industry standard motion tracking system capable of sub millimetre accuracy.

.jpg)

Figure 9: A team member using the Optotrak rig developed for stroke patient reach exercises, being compared with the Kinect (visible in upper right)

.jpg)

Figure 10: Optotrak rig from another angle, showing the LCD screen which the patient must attempt to touch.

Figure 11: Video footage of the Optotrak rig in use.

Toolkit

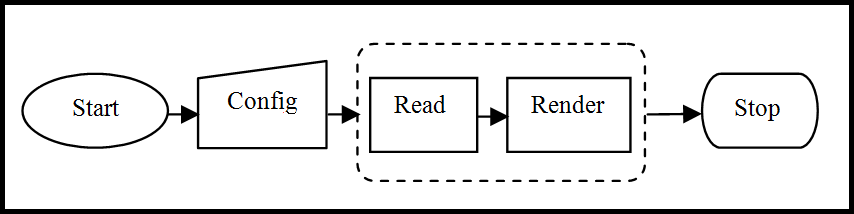

The toolkit is now complete, and allows the user to initialise and close the Kinect's different components through polymorphic VIs (RGB Camera, Depth Camera and Skeletal Tracking) dependent on the functionalities they require.

The toolkit is formatted in concordance with the official National Instruments' driver layouts:

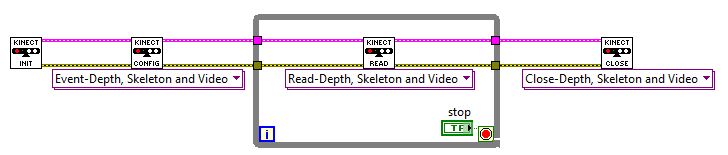

Figure 12: Operational Layout for the Kinect LabVIEW toolkit.

Figure 13 shows the block diagram of the polymorphic Kinesthesia toolkit. A developer simply has to drag these four VIs onto a block diagram and can select which data streams they which to access.

Figure 13: Complete Kinesthesia Toolkit allowing access to all data streams from the Kinect through the polymoprphic VIs.

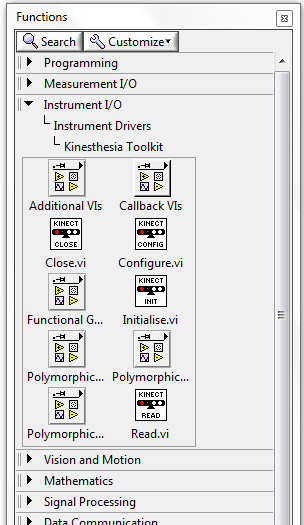

Kinesthesia toolkit can be added to the LabVIEW functions palette allowing simple drag and drop access to the Kinect sensor's features.

Figure 14: Kinesthesia Toolkit accessible from the Instrument Drivers panel on the Functions Palette

A number of sub-VIs are either completed, or approaching completion, which relate to the processing, extracting and displaying of data from the Kinect. Figure 10 shows the Polymorphic VIs that the user can simple drag into the block diagram, the code displayed lets the users access the Video, Depth and Skeleton data. In the example below the code is also producing a 3D picture control plot of the skeleton on the fly. The toolkit is colour coded such that initialise, configure, read, display and close are shown in green, purple, blue, orange and red, respectively.

Future Development:

The system has great scope for future development, and we look forward to seeing what other people use the LabVIEW toolkit for when the toolkit is released on the LabVIEW Tools Network. In relation to furthering our own work, the system has the potential to allow a rehabilitation user to be completely independent. After the initial meeting with a physiotherapist, and a rehabilitation regime in place, the user could perform all the tasks at home, with the data including recorded video, sent back to the physio who could analyse the information remotely, a practice known as telerehabilitation. This would be especially useful for users who currently struggle to leave the house, or may feel burdened by regular rehabilitation appointments.

Work will be ongoing to allow for upper body tracking of the skeleton with realeases of new SDK's for the Kinect.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Hey ChrisNorman,

Thank you so much for your project submission into the Global NI LabVIEW Student Design Competition. It looks great! Make sure you share your project URL ( https://decibel.ni.com/content/docs/DOC-20973 ) with your peers and faculty so you can collect votes ("Likes") for your project and win. If any of your friends have any questions about how to go about "voting" for your project, tell them to read this brief document (https://decibel.ni.com/content/docs/DOC-16409). Be sure to provide some pictures and/or videos in order to help others visualize your project. If your team is interested in getting certified in LabVIEW, we are offering students who participate in our Global NI LabVIEW Student Design Competition the opportunity to achieve certification at a fraction of the cost. It's a great opportunity to test your skills and enhance your resume at the same time. Check it out: https://lumen.ni.com/nicif/us/academiccladstudentdiscount/preview.xhtml.

Good luck!

Sadaf in Austin, Texas

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

I really enjoy your toolkit! It is extremly useful. I have 2 questions, what are the "units" for the joint position and whare are the maximum and minimum values for the joint positions? Tak you for your time.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

is there an opportunity to download the toolkit?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Hi Chris,

I am wanting to use the Kinect for a project that I am wanting to start.

I don't have a Kinect sensor yet and have never used an Xbox so am looking to buy a sensor. From what I have seen, you get two types, one with a PSU and one without a PSU.

To use this toolkit and connect to the Kinect sensor, do you need the PSU or not?

Thanks,

Greg

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Does anyone knows about the rights of using this toolkit for developing products

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Really useful toolkit, How is it possible to get the joints coordinates?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

i want to deploy kinesthesia into MYRIO but i canot. I created a myrio project in lw but when i put kinecthesia VIs into block diagram but deploy button is brocken ...What should i do??

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

hi..

I am using Kinesthesia toolkit for to measure angles between joints an vetor...It worked fine on computer but when i load these vi to myrio the deploy botton is brocken,,.. Why doesnt it run on myrio?.

My project requires skeletel tracking and depth imaging and the code should run on Myrio or any other hardware provided by National Instruments. So please help....

I did all steps given here https://decibel.ni.com/content/docs/DOC-34889#comment-36733 and the depth imaging and video worked, but it did not have vi library for skeletal tracking.

if i import the kinesthesia library( those are .vi files) into myrio using filezilla following the same above steps will those VIs work on myrio.

can i import .vi files into myrio and will it work?

if not is it possible to convert .vi file into .so? if yes how can it be done??

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

why doesnt kinesthesia vi run on myrio??? pls help....

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

The toolkit is awesome. I modified smoothing parameters VI to adjust smoothing parameters. How can I post it back to you so you may include it in your package?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

I'll be using the skeleton method and this system to do my final year project but i cannot find any other example of other method such as center of mass that uses LabVIEW software, any idea on that?

because i need to compare many methods and analyze which is the best method of human tracking, currently i just managed to do some analysis on the skeleton mehtod by using this example only, now im finding for other method also...

Thanks in advance

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Thanks for sharing. I was able to get the kinect up and running quickly.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

Buen proyecto, saludos desde Ecuador!!