From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

University: Tsinghua University, Beijing, P.R. China

Team Members (with year of graduation):

Taoyuanmin Zhu (2015);

Qinyi Fu (2015);

Haotian Cui (2015);

Yiyao Sheng (2014);

Faculty Advisers: Shuangfu Suo

Email Address: ztymyws@gmail.com

Submission Language:English

Title: A Webcam-based Point-of-gaze Tracking System for Hand-free Board Gaming

Description:

In the present project, we developed a simplified and economical method of fulfilling eye controlled board gaming that is entirely hand-free and "eyes-only". By locating eye movement against the gaming platform (i.e. the game board) with a webcam, the system tracks the point of gaze of the player and permits the physical movement of on-board pieces in accordance with the movement of the user’s gaze.

Products

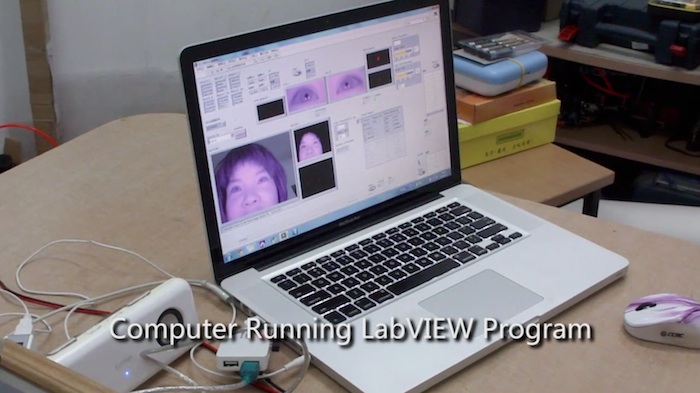

LabVIEW 2011

LabVIEW NI Vision Development Module 2011

LabVIEW NI Vision Acquisition 2011

Other Hardware:

Logitech c270 usb webcam

STC89c51 Microprocessor

stepmotor controller

OSRAM infrared LED

We strongly recommend you to watch our demonstration video for better understanding of our work.

-

http://www.youtube.com/watch?v=FQ-CUcy_1TY (For mainland China【Youku】: Click here!)

Concept video of our project. Please notice that not all the functions in this concept video have been implemented.

-

http://www.youtube.com/watch?v=tfX_2p-JUSo (For mainland China【Youku】: Click here!)

I. Introduction

The historical evolution of User Interface (UI) has witnessed tremendous changes in the past half a century. From the non-interactive batch interface that emerged in the middle of the 20th century to the command-line user interface which involves the wide use of keyboards, to graphical user interface (GUI) with the invention of the mouse that finally allowed users to interact with electronic devices using images rather than text commands, early types of User Interfaces had paved the way for methods of human-machine interaction that are simpler, and more efficient and user-friendly. More recently, touch user interface, a type of GUI that accepts touch of fingers or a stylus, has been increasingly used in mobile devices many sorts of other machines. Other trendy types of User Interfaces, such as gesture interface and Kinetic user interface, have proved successful in their preliminary stage in freeing users from external instruments and improving the effectiveness and naturalness of human-machine interaction with a promising future.

An exceedingly exciting concept of the interaction between humans and machines is, however, achieved through eye tracking. With such interface technologies, users will be able to control a system by merely looking, and the computers would react according to their eye position or eye movement without specific commands. The idea is exciting in a sense that no equipment needs to be put in physical contact with the users any longer, and that the users’ hands will be, for the first time, free from any sort of clicking, pressing, touching and waving that is traditionally required in human-machine interaction.

The primary interest of the present project is to achieve board games controlled entirely by users’ eye movement. More specifically, by tracking the point of gaze of the player, the computer reacts with the physical movement of material objects in the board game (e.g. the chess). As a result, the board games would arrive at what we call hand-free and eyes-only gaming.

II. Systematic framework

The project consist of two steps:

1) Calculating the point-of-gaze from image captured by the webcam

We used a fixed webcam for detecting the coordinates of eyes. In order to obtain the depth information, we added two fixed infrared LEDs. By detecting the glint of LEDs on cornea, 3D coordinates of the pupil were obtained. We also built a model for solving the point-of-gaze on board, which will be discussed in the next section.

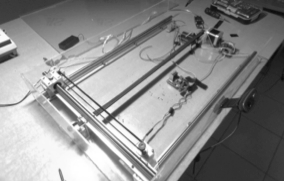

2) Moving the board or chess using physical platform

We implanted a two-axis moving platform underneath the board. In order to move the pieces or chess, magnets were mounted on both platform and chess. Apart from dragging the chess by magnets, we also used magnetic levitating system for better appearance.

III. Modeling

In order to minimize the cost, we used single webcam with two additional LEDs to determine the point of gaze. The simplified model is as follows:

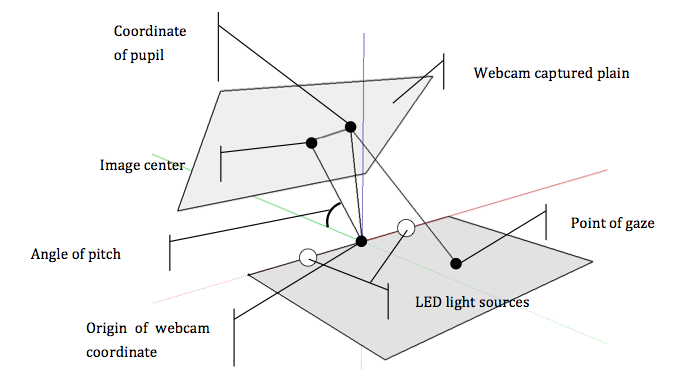

figure 3.1

figure 3.1

The detailed modeling process please refer to the documents attached to this post.

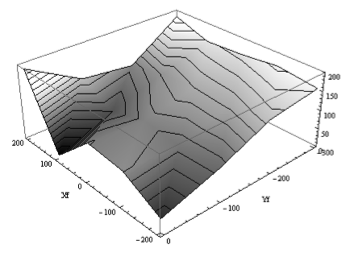

In order to fix the parameters in the expressions, we collected different sets of variables when looking at given points. Using regression methods, 6 parameters were fixed. Residuals when looking at different point can be seen from figure 3.2.

Figure 3.2 Residuals when looking at different point

As can be seen from the figure, the deviation from real data can be up to 150mm, which is too large for point-of-gaze recognition. We tried different means of data regression, finally obtained better parameters limiting the deviation to 50mm at maximum.

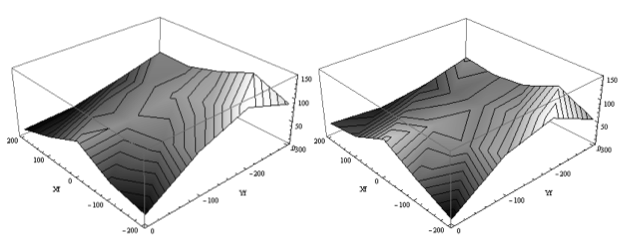

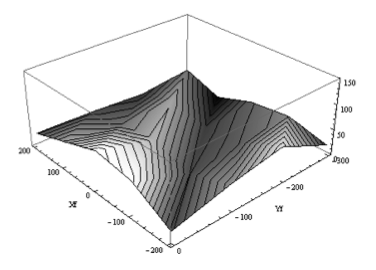

Figure 3.3 Residuals of first attempt Figure 3.4 Residuals of second attempt

Figure 3.4 Residuals of final attempt

Though the deviations are still noticeably large (36.9mm), it is sufficiently accurate for applications as board games.

The model was built assuming that the eyeballs are ideal spheres and the LED light sources are perfectly aligned with the webcam. These assumptions may also lead to defects in modeling.

IV.Eye-tracking algorithm

Eye tracking was achieved by the following methods:

1) The image of resolution 1280*960 was grabbed through USB port at 15fps. The webcam was placed close to face of the participant for better resolution.

figure 4.1 step1

2) Notice that the color of the skin is quite different from the background (partly due to the IR light emitted by the LEDs). Threshold was set both on hue and lightness in order to distinguish human face from the background.

figure 4.2 step2

3) After sorting out the face, shape matching was applied to find the coordinates of the pupils. In earlier version s of our project, pupils were detected from simple threshold on darkness. We found that, in some cases, it was hard to distinguish pupils from nostrils. By applying shape matching, such defect was prevented and only the round-shaped pupils were detected.

figure 4.3 step3

4) The last step is to collect the coordinates of each pupil and the glint of IR LEDs. These three coordinates can be easily tracked regarding their lightness. Finally, we obtained 3 coordinates (6 variables) for further calculations on point of gaze.

figure 4.4 step4

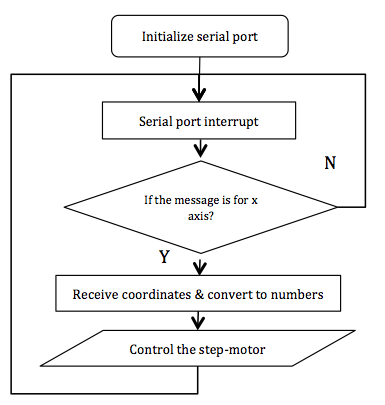

V.Implementation of platform for moving pieces

The platform hidden under the board is capable of two DOF planar motions. The two-axis moving platform is driven by two step-motors controlled by MCS-51 Single-Chip Microprocessor. The SCM is connected to the computer via serial port. The program flowchart of SCM is as follows.

Figure 5.1 SCM program flowchart (for x axis)

Figure 5.2 picture of the moving platform

VI.Game process

Huarong Dao (also called “klocki”) is a sliding block puzzle. It is based on a fictitious story in the historical novel Romance of the Three Kingdoms about the warlord Cao Cao retreating through Huarong Trail after his defeat at the Battle of Red Cliffs in the winter of 208/209 CE during the late Eastern Han Dynasty. He encountered an enemy general, Guan Yu, who was guarding the path and waiting for him. Guan Yu spared Cao Cao and allowed the latter to pass through Huarong Trail on account of the generous treatment he received from Cao in the past. The largest block in the game is named "Cao Cao". The player is not allowed to remove blocks, and may only slide blocks horizontally and vertically.

figure 6.1 The wooden traditional game Huarong Dao

figure 6.1 The wooden traditional game Huarong Dao

Step 1. The player steps into the sight of the webcam and point-of-gaze is captured.

Step 2. After the player gazes at one board for more than 2 seconds, the board is captured by the magnet mounted on the moving platform.

Step 3. After the player gazes at one vacant position for more than 2 seconds, the board is dragged to the position by the magnet mounted on the moving platform.

Step 4. Repeat step 2 and step 3 until “Cao Cao” is moved to the exit.

VII.Features

i.Originality

The originality of the present project is embodied in:

ii.Advantage

Compared to existing technologies and products, the present project enjoys several advantages:

VIII.Future outlook

The idea and technology presented in the project can be utilized in a variety of fields, in spite of the entertaining function the device is originally aimed for.

IX.Pictures

figure 9.1 moving platform and pieces for Chinese board game "Huarong Dao"

figure 9.2 USB webcam and infrared LEDs

figure 9.3 computer running LabVIEW Program

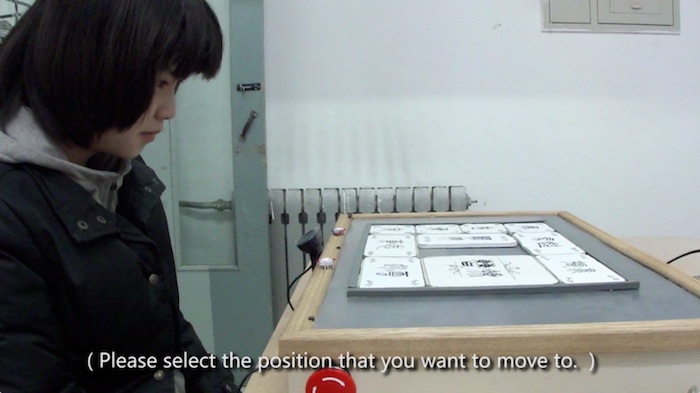

figure 9.4 system running with voice prompt 1

figure 9.5 system running with voice prompt 2

figure 9.6 real-time point-of-gaze tracking (notice that the piece is levitated)

Reference

[1] US Patent 20090100101, Marotta Diane, “Method and System for Gathering, Analyzing and Disseminating Mind-Based Perceptions”, issued on 04/16/2009

[2] Wikipedia-Eye-tracker, http://en.wikipedia.org/wiki/Eyetracker, retrieved on March 21, 2013

[3] Wikipedia-Mind-Control, http://en.wikipedia.org/wiki/Mind_control, retrieved on March 21, 2013