- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Automation of Sorting & Identification of Arthropod Samples Using LabView Controlled Robots

Contact Information:

Country: Denmark

Year Submitted: 2019

University: Aarhus University, School of Engineering

List of Team Members (with year of graduation):

- Thomas Lyhne, 2019

- Kristoffer Løwenstein, 2019

Faculty Advisers: Claus Melvad, Faculty Adviser

Main Contact Email Address: thomaslyhne@hotmail.com

Project Information:

Title:

Automation of Sorting & Identification of Arthropod Samples Using LabView Controlled Robots

Description:

Using NI products a LabView controlled Universal Robot UR5 is implemented in a setup to automate species classification of insects to help researchers monitor and investigate insect populations.

Products:

| Software |

LabView NI Vision NI MAX NI IMAQ NI IMAQdx NI VISA UR-Programming interface |

| Hardware |

Universal Robot UR5* Camera: Blackfly 5.0 MP Color GiE PoE Lens: Fujinon HF8XA-1 Tecan Cavro XE1000 syringe pump 1x servo electrical gripper 1x pneumatic gripper Species recognition system (SRS). (Already developed) Laptop Compressor Custom made 3D-printed parts |

*Universal Robots have sold more than 25.000 cobots (By Sep. 2018, Forbes) having a market share of 60 %

The Challenge:

Climate researchers are working hard to gain a deeper understanding of the climate changes that we are facing. A powerful tool in the research process is the investigation of temporal and spatial variation and compositions of invertebrate communities (Insects). Their short life cycle allows researchers to examine the changes in insect population in relation to climate data. Are the insects appearing earlier in the season? Are they arriving in smaller numbers? Are they arriving so out of time that they won’t pollinate flowers or feed wildlife? And lastly; Is this due to a changing climate?

A recent study, “Worldwide decline of the entomofauna: A review of its drivers”[1], which had its findings brought forth in an article in the news outlet The Guardian[2] showed massive decline in insect populations compared to vertebrate species.

Tracking their appearances allows observations of climate change on a much smaller time scale than by using traditional methods such as ice drillings and soil testing. However, the processing of insect samples collected in the field is very time-consuming and multiple specialists are required. Today the insect samples containing several hundred insects are brought into the lab and each sample is manually sorted into insect orders for later identification by specialists.

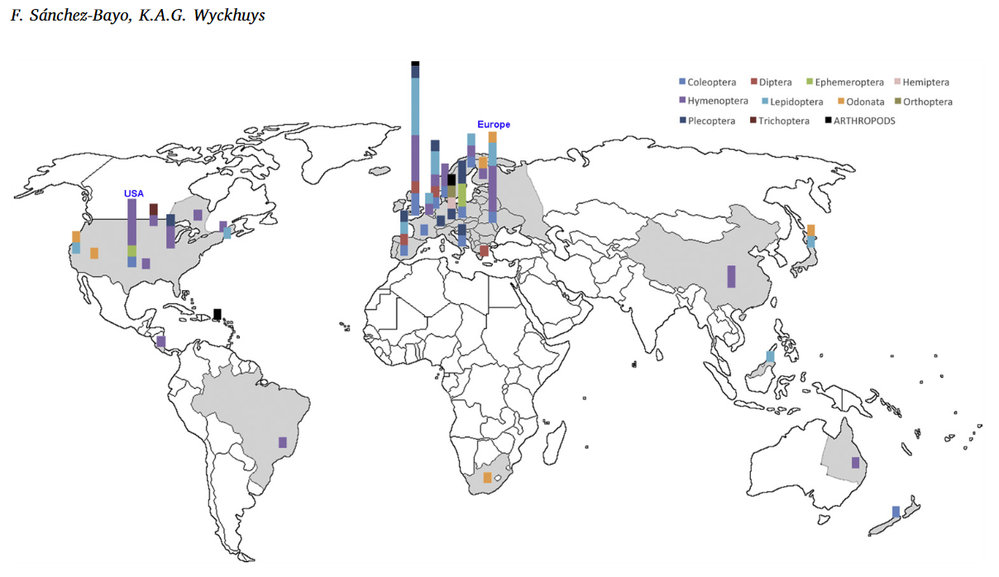

Unfortunately, this method is often neglected due to the inconvenient process and unfortunately important knowledge is lost. Collected samples are simply not investigated due to resource and time constraints. The neglect of the research is exemplified below where geographical location of the reports used for the survey is shown. Data on insect populations is almost exclusively found in the western world. The problem being that the consequences could be far worse for other areas without any possibilities for forecasting. For increased research on this area the identification process needs to be made more accessible.

New developments in computer vision and machine learning has enabled automatic identification of such insect specimens directly from images. The missing element in an automatic identification process is a method to separate, deliver and dispose of the insect specimens for individual identification.

The challenge is to develop a procedure to move each individual insect from a container with up to several hundred insects to an imaging device that identifies which species the specimen belongs to. Subsequently the specimen must be removed from the imaging device and placed for storage. Developing such a process would be a big accomplishment and the impact in climate research could be significant. Furthermore, it can easily be implemented in a diverse field of application e.g. in food production research, tracking of mosquito movements in the fight against transferable diseases and effect measurements on life condition improvements.

An automated system for species classification and counting could be used to monitor the effect of nature preserving initiatives. When studying environmental improvements and the impact of developments within pesticides on insect populations, automated insect studies enable biologists to get a much more detailed picture of whether it gets better or worse. Recently more awareness has been bought to this area of study and the need for proper testing equipment is therefore growing.

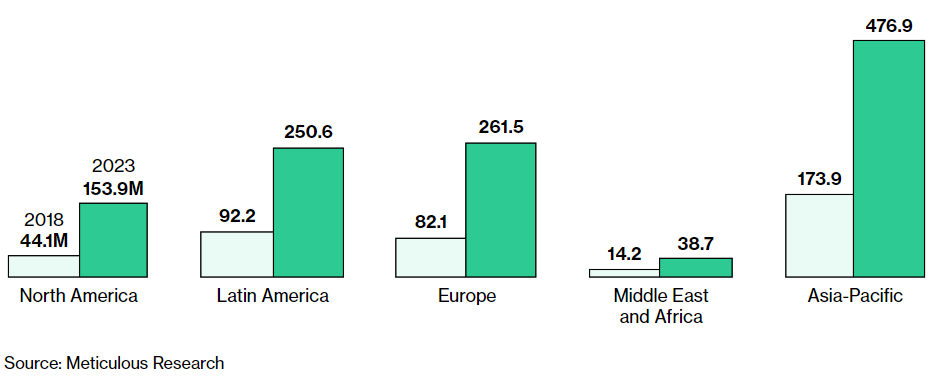

The study of insects is also becoming a game changer in the global food production as need for more sustainable food sources are required due to the rising population on earth. The global edible insect market is rapidly growing and is expected to exceed $ 1 billion dollars by 2023.

The Solution:

The work done in this project is the result of a collaboration between Aarhus School of Engineering and the Aarhus University Bioscience faculty.

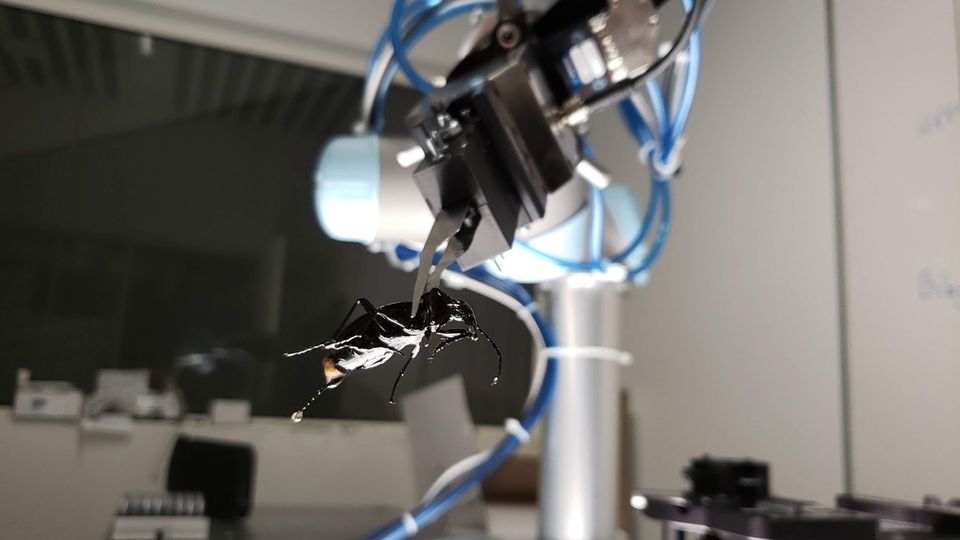

A robotic setup, using a LabView controlled Universal Robots UR5 3-link 6-DOF robot, was developed to execute the necessary procedures to successfully handle the insects. By utilizing the many advantages of NI products it became possible to develop a relatively complex system in a limited period of time. The robotic setup is developed around the use of LabView. A code is developed in LabView to handle results from NI Vision, Treatment of data and communication between several components involved in the process.

The process

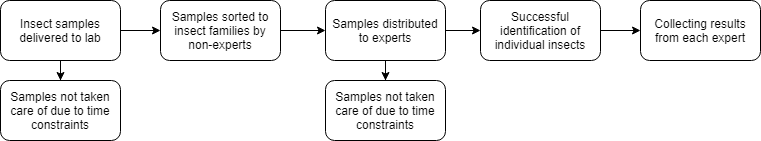

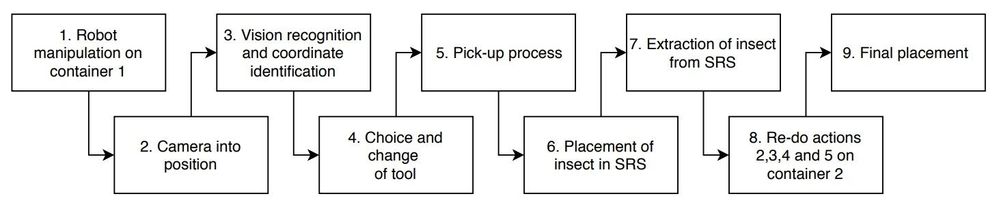

To understand the robotic setup the process undergone during sorting of the insects are explained. The flow of the process is shown in Figure 9.

Description of the process:

See video

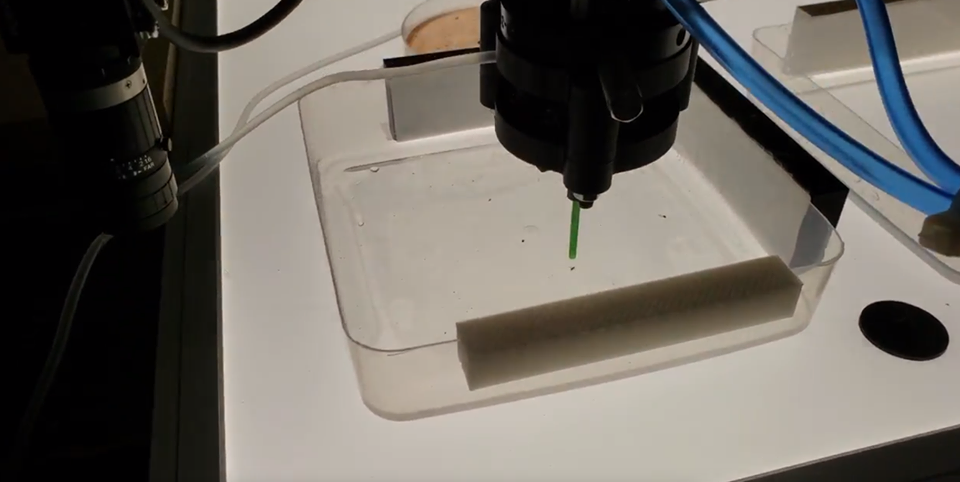

- The robot executes a circulating movement of the container to spread the insects. The container contains liquid to assist the spreading of the insects.

- The camera is moved into position above the container for vision acquisition.

- An image is acquired using the IMAQ tool box and a Sub VI developed in NI Vision is used to identify, locate and determine the size of the insects contained in the sample. Finally, the data acquired from vision Sub VI is postprocessed using LabView.

- An insect is selected for manipulation. Location calculation and tool selection is carried out by LabView.

- The insect is picked up: LabView is used to move the UR5 to the right location, ensure the right orientation of the tool and to activate the tools at the right moment such that the insect is securely picked up.

- The insect is placed in the Species Recognition System (SRS).

- The insect is extracted from the SRS into cuvette 2 by handling the cuvette the insect is placed in.*

- The actions 2,3,4 and 5 are repeated for container 2.*

- The insect is placed in a test tube. LabView is used to decide which test tube the insect is placed in.

*Due to the constraints posed by the SRS this method of handling the insects was decided for developing the first prototype such that no larger modification on the SRS was needed. For a future version of the system a rebuild of the SRS is planed such that these steps can be optimized.

The process is repeated until there are no insects left in the first container.

Programming

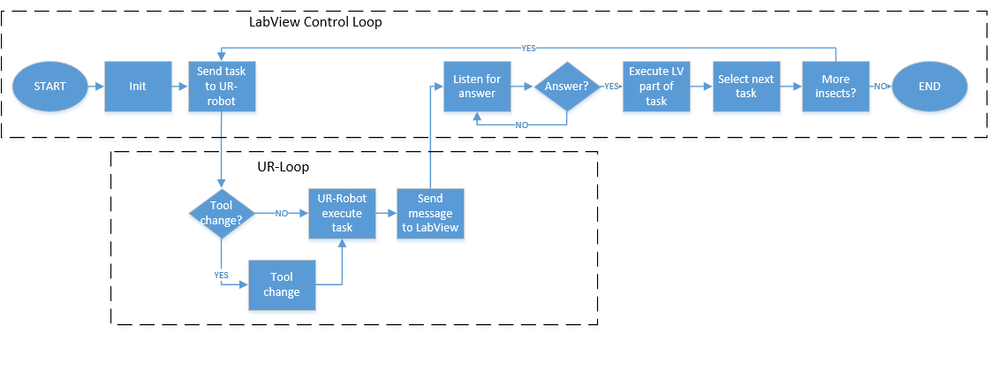

The basic idea behind the program controlling the robot is that a task is sent to the UR-robot including tool selection and a position of where the robot must move. While the UR-robot is performing the given task the loop listens for a response of whether the task is completed or not. When the task is successfully completed, tasks that must be performed by LabView at the given stage are executed. Afterwards the next task is appointed and sent to the robot.

In general the use of LabView and NI products made it easy to implement multiple external components such as the UR5 robot, develop the vision algorithm and develop functional code. Due to this more time was available to work on more sophisticated code and developing the mechanical setup of the system.

Computer Vision

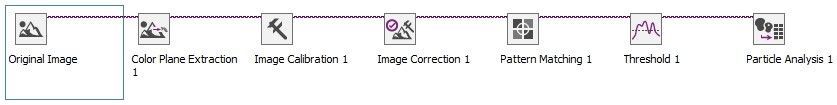

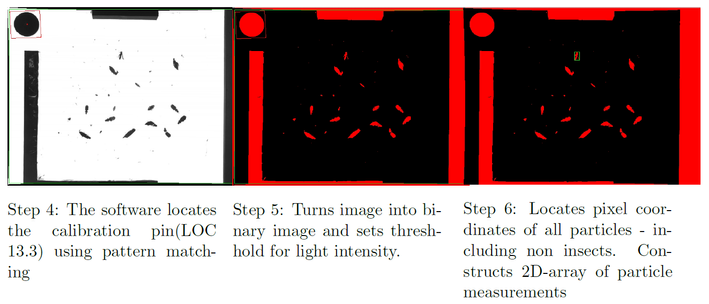

The NI Vision Assistant is used to accurately locate and measure length of the insects. 6 image processing steps are required.

The steps are explained below:

This is all configured in a Sub-VI which delivers an array including information regarding location of insect, length in pixels and orientation. The location coordinate is thereafter calculated relative to the circular calibration pin placed in the upper left-hand corner. The calibration pin has a fixed location.

Communication with UR5-Robot

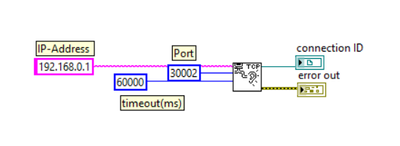

In order to communicate with the UR5-robot a TCP-connection is established when the program is initialized. The connection ID is carried on to the SubVI’s in order to communicate with the robot.

One of the huge advantages of using LabView is the ease of communicating with the UR5-robot and thereby controlling it. Simply by using a few of LabView built in code blocks the connection is established and the robot can be controlled.

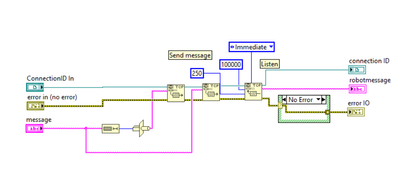

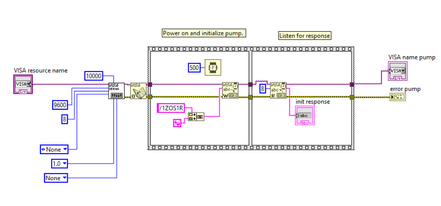

Two SubVI’s are used to communicate with the UR5. One is responsible for sending a message to the UR5 and listen for immediate response while the second only listens for a response.

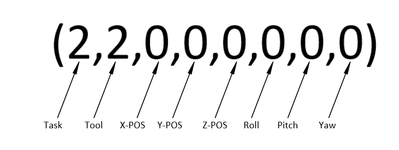

For the robot to successfully complete a task, tool and position must be communicated to the UR5. This is done by simply sending a string containing the necessary information. The task and tool are communicated with integer numbers while the position is consisting of x-, y- and z-coordinate and the roll, pitch and yaw angels.

Using the simple string command all desired tasks can be performed by the UR5. Other parts of the LabView program is used to specify the different information needed e.g. NI Vision is used in order to determine the position and orientation of the insect.

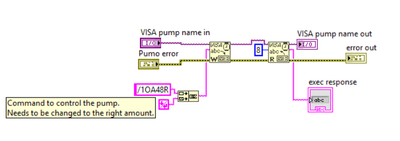

Communication syringe pump

In order to handle the smallest insects a suction mechanism is used. A syringe pump is used for the application. In addition the syringe pump is used for various practical tasks such as refilling the cuvette.

When the serial connection is established different SubVI’s are used for different tasks. Below an example is shown where the syringe pump is activated in order to pick up an insect. Again this is implemented easily using just a few code blocks.

The Conclusion:

The project has a huge impact on the future of climate research and the aim for creating a better planet. Through realization of the project experts will be able to gain a deeper knowledge and the areas that are investigated will be expanded. Research can be conducted in much larger quantities, hopefully leading to game changing discoveries on how to save our planet.

Thanks to the ease of use of NI products more time was available for learning and discoveries regarding how to improve the system for further development. Insights on how to handle fragile insects involving separation of insects, computer vision for detection and classification of the insects and custom design of tools were gained.

The project was significant and was well received by scientists working in the field characterizing the project as ground breaking. The scientists showing great excitement and willingness to implement the system into research.

Other outreach

The project was invited to attend a symposium on image-based techniques in species detection and identification. The symposium was meant to increase communication between faculties and in this case combine capabilities at the Aarhus School of Engineering and the needs presented by the Bioscience faculty.

Level of completion

The project has achieved proof of concept but has also helped outline potential issues when handling small and fragile elements such as insects.

The project is still running at Aarhus University and the learnings gained from the first project phase are being utilized. The next steps involve improving the handling tools and optimizing the process of emptying the species recognition system to further improve cycle time. The Bioscience faculty hope to utilize the robotic setup to help train a machine learning algorithm, using the robotic setup to deliver the insects to the vision system.

Time to Build:

The system was developed and built from September to December 2018 by 2 students. LabView and NI Software made it possible to go from the sketch board to realization of the first prototype in a short amount of time.

Full expert interview:

Toke Thomas Høye works at the bioscience faculty at Aarhus University. He is a senior scientist and is here interviewed about his studies regarding categorization and sorting of insects.

References:

[1] Sánchez-Bayo, F. & A.G. Wuckhuys, K. (2019) Worldwide decline of the entomofauna: A review of its drivers: Biological Conservation, 2019. Viewed on 02/18/2019 at https://www.sciencedirect.com/science/article/pii/S0006320718313636?via%3Dihub

[2] Carrington, Damian. (2019) Plummeting insect numbers 'threaten collapse of nature': The Guardian, 2019. Viewed on 02/18/2019 at https://www.theguardian.com/environment/2019/feb/10/plummeting-insect-numbers-threaten-collapse-of-n...

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report to a Moderator

I just love this project - huge impact. I particularly noticed the lack of insects while driving my car in Denmark in spring/summer. There are far less insects on the wind shield now. When I realized this, I was shocked how this will impact the ecosystem of Earth and the food crisis.

Using this project as a basis, researchers can now apply their time to do something about the problems, not just document how it gets worse and worse.

Thank you for your hard work.