From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

Hello Lovelies,

Hope you are all doing OK.

I'm a bit broken, so I'm bored, on the upside this gives me the energy to write another article....

Sam Taggart and Joerg Hampel have both talked about this subject, I recommend you go check their material too.

https://www.sasworkshops.com/debug-driven-development/

I'd also like to thank Brian Powell for excellent analogies and Fab for all her input.

Preparation

Continuing the kitchen analogy, I wonder if there's a connection to "mise en place". Of getting everything organized and in place before you start to cook.

Error Handling

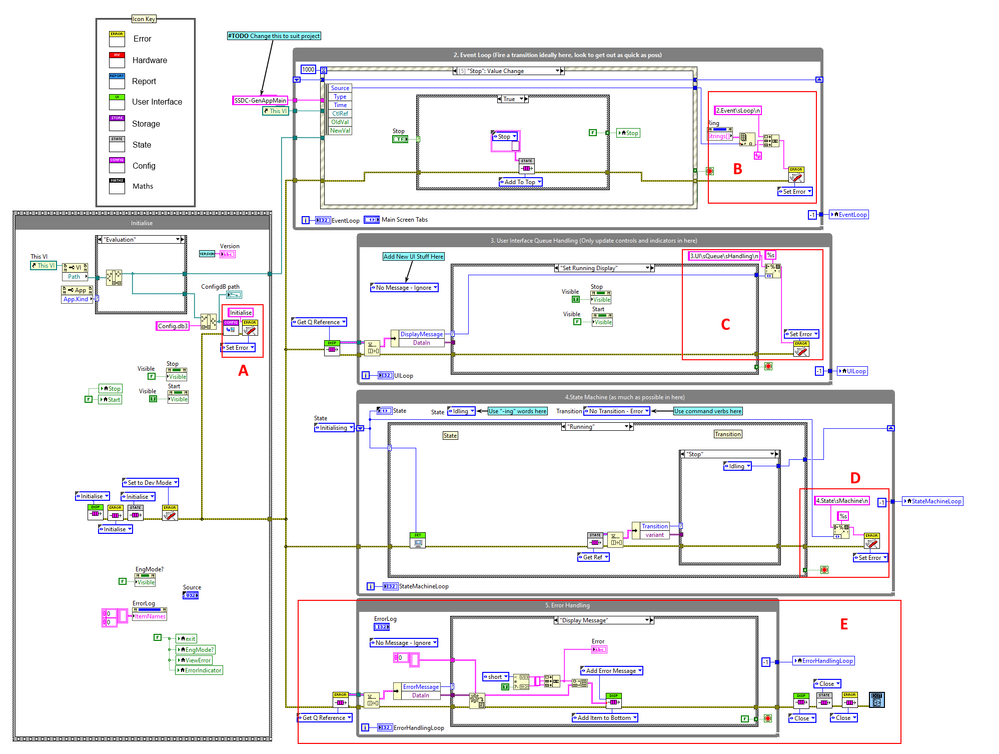

SSDC Error reporting as an important template feature

We can see in the areas marked red (A,B.C,D) that we're logging errors at the end of the event, state, UI Queue loops. We do this in multiple loop programs, because it gives better feedback to know where an error occurs. We also have a loop dedicated to reporting errors (E).

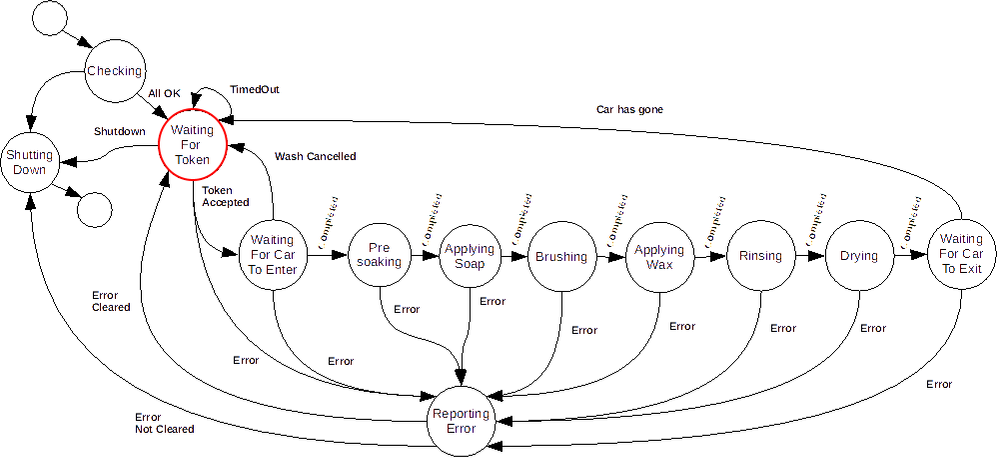

This covers reporting and logging errors, we handle errors in the state machine with dedicated "error" states. This is because the error state is an important state to model in your systems.

HSE Logger

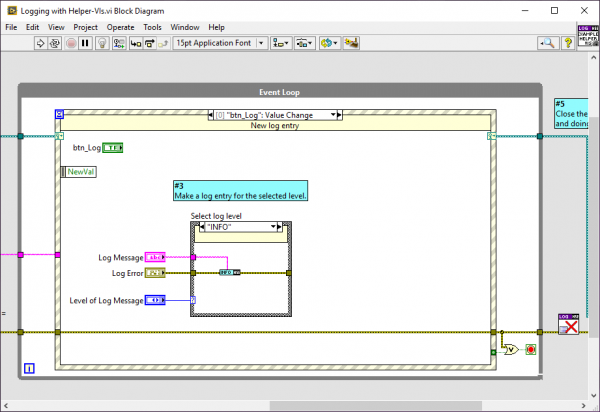

HampelSoft (Hampel Software Engineering) have tooling that they use in the development process purely to help with debugging. Credit to Manu for doing the hard work!

https://dokuwiki.hampel-soft.com/code/open-source/hse-logger

Joerg says that having HSE Logger in place first thing, before even starting the implementation makes the actual development process much simpler.

HSE Logger Helper VI

Understanding

Do your own work

Absolutely the best advice I can give is to always start your debugging session by understanding the issue, do the hard work, don't believe what you are told. Resist the temptation to change anything until you fully understand the issue at hand. In my many years of fault-finding the single biggest issue I have seen (and done) is changing more than one thing at a time. Slow and methodical will win the day.

Organization

Organized / Everything in the right place

The key to lightening the load of the debuggers brain is organization and discipline.

Continuing with the kitchen analogy a well-organized kitchen has things where you would expect them to be, e.g., based on how you use them or how often you use them, or what you use together. People that are good at debugging often have a sense of where to look faster than others, based on their mental model of the system.

One of the common issues I've found with systems that have got a bit out of control is that stuff is being done everywhere or anywhere. So stuff could be happening in the event structure, or in a queued message, or some dynamic process. Having some rules about what happens where makes that code much easier to debug.

Cohesion

I've talked about cohesion a lot in this blog and the advantage of highly cohesive designs comes back to everything being in its expected place. Got a database issue? Check your database component/module. Something iffy about a reading check your DMM module. If you are looking in lots of places to fix an issue it's a code smell for poor cohesion in your design.

It's actually a pretty nice exercise... Got a problem with XYZ, I should look in the XYZ module. Make sure XYZ is a tangible thing and you're onto design winner.

Visualisation

One essential element of debugging is being able to visualise how your code is working.

Iteration Indicators

This is a very simple thing to do and I've found it really useful.

Using iteration indicators to help visualise the system

Do this for every loop in the block diagram, group them together and you get a nice indication for loop times and when a loop is complete.

Logging

It's really useful to log events, states, messages and communications in your system. Especially in distributed systems!.

Simple State Logging

We often have an ugly debug tab hidden away, this screen can be made visible from configuration.

Configurable Debug screen.

Block Diagram Documentation

Check out my article here for more info - Commenting I like

My experience with all documentation is that is needs to be easy to get to, easy to update and locatable from the block diagram (if not on the block diagram). If all of those boxes are not ticked documentation will eventually fall into disrepair.

Ease of Navigation

I've talked a lot about this in my various presentations on Immediacy.

Speed is essential here IMO, how fast can you build a picture about what is happening, the faster you can do that the more you can fit in your brain. I view the brain as a leaky bucket. Certainly my brain is....

Depth of Abstraction

Every extra layer of abstraction is a barrier to ease of debugging, so while a very necessary design technique I would think about refactoring if you feel you are finding it difficult to find the running VI.

Scope Hardware Abstraction Layer

Use terminals to help navigation

This is another simple thing that can really help your quality of life. Simply place the terminal of the control where is doing most work. Often my technique to go to an area of the block diagram is to right-click on the control on the front panel and select find terminal.

Using terminals to help navigation

This is not an exhaustive list of things that we do purely to help with debugging, if I remember more I'll amend the article. I'm sure the comments will have other things that you lot use.

The next article will show our debugging process in all it's glory.

All The Best

Steve

Opportunity to learn from experienced developers / entrepeneurs (Fab,Joerg and Brian amongst them):

DSH Pragmatic Software Development Workshop

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.