- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

USB-6211 problem with AI and AO synchronization

Solved!09-24-2019 01:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all,

I'm working on raster scanner project using NI USB 6211. 6211's AO controls galvo XY scanner and AI reads voltage from detector. I would like to synchronize my 6211's AI and AO (2 channels) clocks, which works fine. AI doesn't retrieve any data until AO starts and once it starts, I think they are sync'ed in sample rate. The problem is, when AO starts, AI read starts late about 1/3 of cycle. Is there any way to add delay to AI task (I can throw away the first cycle and get sync'ed from the next cycle)?

Or is there better way to implement this? I get feedback position from galvo scanner, which is very low and very noise analog signal. I'm not sure if I can use this as AI's trigger signal.

Thank you in advance.

Solved! Go to Solution.

09-24-2019 03:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You're driving your AI task with the AO sample clock and you're starting AO last. That's a really good start for sync. What's left may be the finer, subtle details.

You say AI is late by 1/3 of a "cycle". Do you mean 1 cycle of the sample interval? Or some other cycle?

How are you measuring and determining this lateness?

Is your idea of perfect sync that the AO sample gets generated at the exact same instant that the AI sample gets A/D converted? Why do you want this? There's some physical reality that will *usually* prevent this from being a particularly good goal to aim for.

1. There will be a slew rate governing the speed at which the AO signal can move from its prior voltage to the new one.

2. There will be some physical response time for the galvo system to respond to the new AO signal value

3. There will be some reaction time for the PD signal to give a stable response to the new galvo position

4. There may be some small time for the AI task to produce an A/D conversion after receiving the sample clock edge.

I have often advocated an approach that runs a counter pulse train with 90-95% duty cycle at the desired sample rate. Both AO and AI use the pulse train as their sample clock and the counter is started last. AO uses the *leading* edge of the pulse train, AI uses the *trailing* edge. This intentionally delays AI by 90-95% of a sample interval, but I interpret it as "AI sample #N represents the reaction to AO sample #N b/c I've given the system time to respond."

To *my* thinking, this is usually a better way to approach sync'ing a stimulus-reponse system. I'm sure there are exceptions where it's more desirable to line up sample instants more perfectly, but I haven't personally run into any.

-Kevin P

09-24-2019 04:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

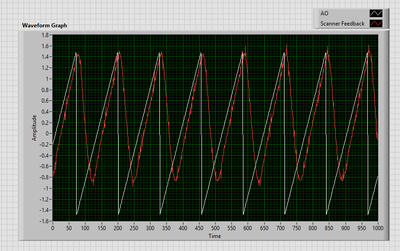

Thank you for the response Kevin. The AI measurement takes the same # of samples as generated AO per cycle. By looking at the intensity measurement, it looks like, AO generates sawtooth signal but AI measurement starts to measure mid-way of the sawtooth (please see attached image - by the way, measurement is from right to left). So, I think if I could grammatically adjust the AI start position to be sync'ed with AO generated sawtooth signal somehow, I should not see these mirrored image. AI being little behind AO is perfectly fine, as long as this is consistent. The AO is driving the scanner (each point is about 8 us apart), AI can be off by 8 usec or less.

09-24-2019 07:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your AO and AI tasks should be sync'ed to within a sample. I don't have any good idea how to interpret the picture you posted. There's something notable going on in the vertical slice where your arrow points, but I don't know how that photo-style image relates to the data acq LabVIEW code.

I don't think the problem is the tasks or how they're sync'ed.

What is your method for examining the AO and AI to test for sync? I'd recommend looping back ao0 over to ai0 to test sync. Then you can expect ai0 to show the same repeating sawtooth pattern you generate on ao0. (It may be 1 sample off due to the issues I raised in my prior post)

-Kevin P.

09-25-2019 04:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi DKFF,

Your image is perfectly normal. It's related to the acceleration of your galvo mirror. if you slow down your sampling rate you will have a better image (but it's not satisfying). One solution I found for this is to pad your image and remove the distorted part.

09-25-2019 10:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Resobis,

Yes, I have done adding extra points in the generated pattern in AO and remove those in AI but this makes the acquisition slow (if I want to keep the same number of samples). I was hoping to either delay the AI to sync with scanner's move or somehow have AI triggered off of scanner's feedback (analog)....below image is generated AO and scanner feedback (feedback is multiplied by 2)

09-25-2019 11:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm pretty sure you're still trying to solve the wrong problem.

The code you posted should keep AO and AI sync'ed. You don't need any further triggering. Try the loopback test I suggested to see for yourself.

What I *think* I see in the data you posted is a *system* with a slower response bandwidth than your sample rate. Start from the peak of an AO sawtooth signal. You suddenly command a motion to the opposite extreme position and then gradually ramp your way back to the peak again. Your system *begins* to move toward the other extreme pretty quickly (the peaks are not offset by much in time), but the observed downward slope defines its max velocity. By the time it can get ~3/4 of the way there, the AO command is now telling it to reverse direction and head back toward the peak again. It takes a little time to turn around and then starts "catching up" to the command signal, almost fully catching up by the time the sawtooth peaks and drops again.

The downward slope of your response signal probably tells you the max response speed of your system. The AO stimulus you feed it needs to take that into account.

-Kevin P

09-25-2019 11:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Kevin,

Yes, I tested the AO/AI synchronization last night and it seems AI waits until AO starts. So the AO/AI synchronization is working. I think, as you and Resobis mentioned, there is not much I can do besides lowering sampling rate and/or padding samples to compensate the scanner's return/response time.

Thank you all for your help. Really appreciate it