- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

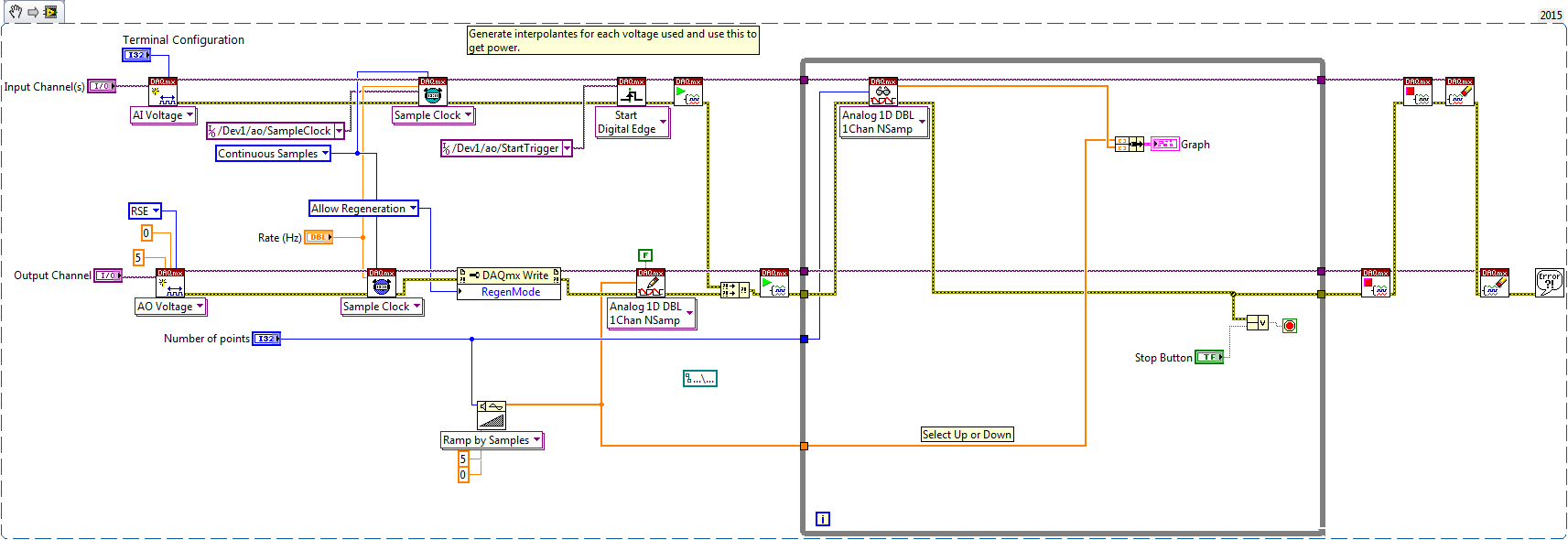

Synchronized Analog Input and Output (USB-6211)

01-26-2017 05:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

I am trying to read and write analog signals synchronously.

To test, I have linked the analog output back to the analog input on my usb-6211.

However, the output is offset by 1 sample:

What am I doing wrong?

I suppose I could hack a fix but prehaps something more fundamental is going wrong?

Thanks

Nick

01-26-2017 08:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

All the configuration looks essentially ok for the sync you're after. FWIW, I wouldn't bother with the trigger configuration though, I generally only use triggering for external signals.

I can think of two possible reasons for your off-by-one issue:

1. a fairly subtle timing race condition with the sharing of the AO Sample Clock. As the timebase gets divided down to the requested sample rate, the resulting clock signal will route to both the AO and AI subsystems. There's a possibility that the edge propagates through AI just a tiny bit faster (like something in the nanosec realm), thus your first AI sample occurs *before* your first AO conversion. It picks up whatever the prior voltage was from previous usage.

A way to check for this is to config AI timing to use the *falling* edge of the AO sample clock. Right now it's using the default of rising edge.

2. Offhand I don't know the timing relationship of the internal AO Start Trigger signal to the sample clock. There's a chance the trigger config is a contributor here, though I tend to doubt it. If anything, I would expect any issue with trigger timing to make AI trail AO rather than lead.

-Kevin P

01-26-2017 08:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your reply.

Yes it must be a race condition. In this instance, I think I can just fetch one sample before the loop to sync it up.

02-08-2017 09:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello niNickC,

It's not a race condition per se, but rather a result of how AI and AO work. You've correctly synced the two tasks together by meeting the two conditions for synchronization: the tasks start at the same time and their clocks don't drift. However you need to take into account how AO is updated. When a sample clock edge is detected, the AO channel begins its change to the value you've specified. AI on the othe hand measures on the edge of a sample clock tick.

So let's break it down, for simplicity sake, let's say that your AO is set to 0V prior to running your code. Now you've correct established a shared sample clock, and trigger. The tasks are started and wait for their start trigger. Once that arrives, the tasks wait for the first edge of the sample clock.

Tick!

AO begins it's transition to the first value you've set, but AI measures immediately! So AI reports 0V while AO is taking the required slew/settling time to reach it's value.

Technical Support Senior Group Manager

National Instruments

02-08-2017 09:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your comment Jonathan. Does the data sheet specify the two conversion times? If the AI is much faster than the AO, you might expect an error as it measures on the changing ramp, you dont see that here though.

02-08-2017 10:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello niNickC,

The slew rate and settling time for AO are specced. Why would you expect an error though? The AI is digitizing a signal without any regard for what that signal is. As far as the hardware is concerned, all it knows is when to take samples and convert them.

You can avoid this issue though by introducing some delay to your convert clock using the DelayFromSampClk.Delay property in the DAQmx Timing Property node. My first post was somewhat of a simplification. Once there is a sample clock edge then there is a separate signal, known as the Convert Clock, that actually tells the ADC when to convert samples. When sampling on multiple channels of a multiplexed device, this is what what allows each channel time to settle before converting a sample on each channel. Adding delay to this that's >= to the max slew rate + AO settling time will ensure that you'll never see your "race condition". However it might run you into trouble if you try to sample AI faster or use multiple channels.

Reference: M Series User Manual, AI Convert Clock Signal section

Technical Support Senior Group Manager

National Instruments

02-08-2017 10:55 AM - edited 02-08-2017 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Jonathan gave a better, more detailed explanation of what I had referred to previously. I'd suggest the simplest thing to try out might be to configure AI to occur on the falling edge of the AO sample clock while AO occurs on the rising edge.

I make that suggestion without any data in front of me about sample clock pulse width or slew rate for AO voltage changes. So if that method isn't entirely satisfactory, you could use a method that has become my go-to method to configure a synced AO stimulus and AI response.

It's the same idea, really, just that you generate your own sample clock signal with one of your board's counters. This lets you control the clock's pulse width as well as the rate. You still configure AO to sample on the leading edge (usually rising) and AI to sample on the trailing edge (usually falling). You also start AO and AI tasks before the counter pulse task to make sure they're sync'ed.

As a general rule, I like defining config params in terms of AO->AI delay time and sample rate. The delay time is meant to compensate for the system's dynamic response and the DAQ board's slew rate, which tend to be more-or-less constant for a given system. In the code, I use the delay time as a high time and I calculate the low time needed to accomplish the requested sample rate. I think this gives the best of both worlds -- the delay stays constant since it's meant to deal with an aspect of the system that's constant, but the sample rate can still be changed.

-Kevin P

P.S. Jonathan was quicker to finish a reply. His method is another good way to approach this.

02-08-2017 11:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I've definitely learned something today! Thanks both for your insight. I like the counter idea but Jonathans is prehaps easier to implement!