- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sinewave "noise" within RMS Measurements of each cycle (6225 & 6255)

Solved!12-14-2017 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

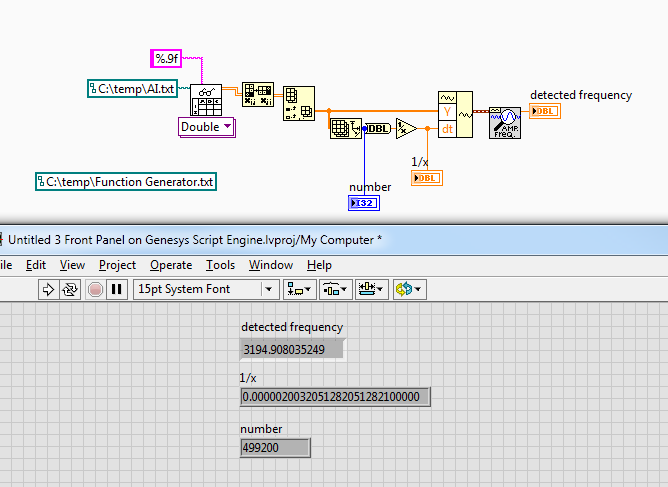

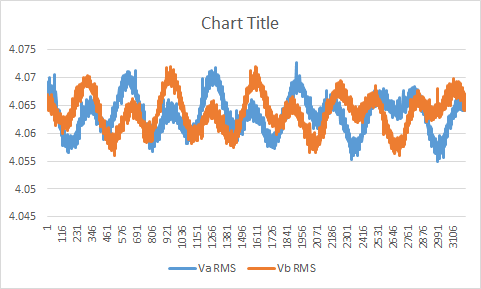

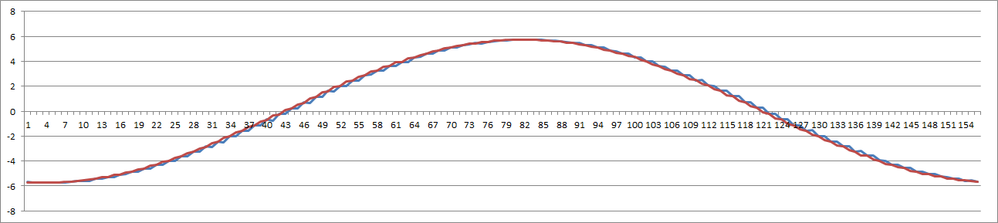

I have 2 signals of 3200Hz signal which I acquired with both the PXI-6225 and PXI-6255, and in both cases I get a noisy sinewave within the RMS of each cycle.

Quick description of the above chart:

I have AI67 and AI70 (setup as differential with AI75 and AI78 on the common), both reading a 3200Hz generated by a function generator at 449200 Samples/s for 1 second.

Each point on the chart represents the RMS of 1 sine cycle (156 points).

This also includes "ghosting filters" of 100K&47uF on the following AI channels: 68,76,71,66.

The PXI-6255 is directly connected to the Function generator through a SCB-68.

What can cause this ripple in the sinewave RMS?

When I use the Frequency calculator of the Measurement pallet I get 3195Hz with the PXI-6255 and 3190Hz with the PXI-6225.

I monitored the Tick Count before and after the DAQmx Read and it is exaclty 1000ms. The waveform data has an accurate dt and number of samples. How can 5Hz (6255) & 10Hz (6225) disapear if all the timing seem accurate?

Both a DMM and oscilloscope confirm the 3200Hz signals of the Function Generator and the FPGA generated signals.

The FPGA originally generated 6 sine signals, which I stopped to only have 1 in order to avoid cross-talk.

The original signal source is an FPGA which I have more data than the Function Generator.

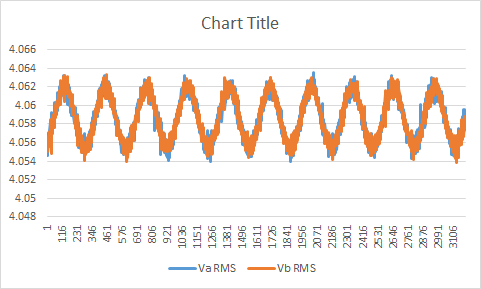

PXI-6255 (499200 Samples/s for 1s for each channel) (156 samples per cycle) where I monitor 2 signals at the same time:

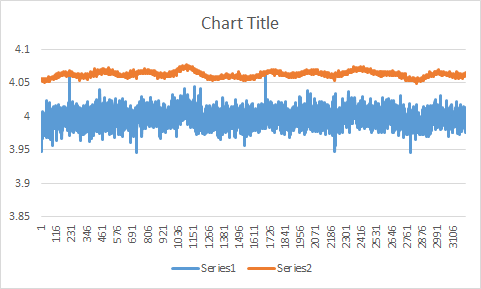

PXI-6225 (243200 Samples/s for 1 second) (76 samples per cycle) where I only monitor each signal separately:

Setting the AI at 5V or 10V range makes no difference.

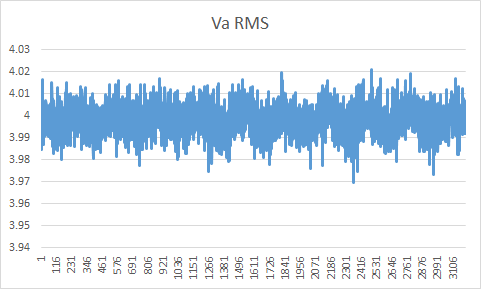

Lowering the sampling rate at 124800 just made the RMS more noisy:

The cause doesn't seem to be ghosting. I grounded the second signal with the function generator (same effect with the FGPA generated signal):

Sample of 1 cycle (FPGA generated signals with PXI-6255):

Shouldn't the "Timing Resolution" and "Timing Accuracy" be constant and not distort the measurements?

Attachments are a sample of signals from the function generator and FPGA. PXI-6255 is used for both at 499200 samples/second per channel.

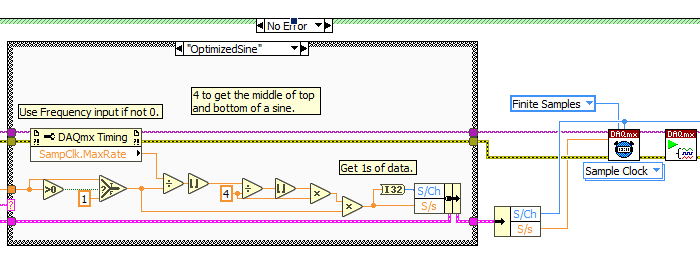

The sampling rate is automatically calculated using the maximum rate (SampClk.MaxRate):

Files are too large to be attachments.

Thanks

Solved! Go to Solution.

12-14-2017 03:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's a very thorough post! It deserves a careful response.

Point 1 - THE BIG ONE!:

You are not actually sampling at 449200 Hz. The sample timing engine will only use integer divisors of an internal 20 MHz timebase. When I tried a similar configuration on a simulated M-series board here, I requested 449200 and then queried a DAQmx Timing property node named SampClk.Rate which returned 454545.45 The actual rate represents an integer divisor of 44.

Your actual sample frequency has an approximate 1% error in it. And hey presto! Whuddya know? Your RMS sinusoidal variation has about a 1% amplitude!

*Because* your sample freq is not as exact as you thought, you were not capturing 1 exact full cycle of the 3200 Hz function generator signal as you thought. You kept missing by one or two points (1% of your 156 pts). Depending on which part of the sine wave was being missed, your RMS calculation was skewed by a variable amount.

Point 2:

Your mention of checking Tick Count before and after DAQmx Read concerns me. I can't think of a reason why a software query of Tick Count should have any relevance. Since you offer it up as confirmation of something, I have to wonder if you've got some wrong assumptions built into your analysis.

Point 3:

You say that the waveform data has an accurate dt. In your post, you calculate a dt value based on the size of a data array read from a file. It seems to be based on an *assumption* that the data array represents exactly 1 second of data. Based on Point 1, I have a suspicion that assumption is incorrect. Further, there's a potential off-by-one problem when you divide by array size. Size is the number of samples. (Size-1) is the number of intervals.

During acquisition, if you read your AI task as waveform data the waveform will hold the *actual* dt value after the driver has dealt with the integer divisor issue. Here, it reports dt=2.2e-6, the exact reciprocal of 454545.45

Point 4:

Can't say much in detail about the analysis function for frequency measurement. I suspect you're feeding it an incorrect "dt" value. I'd expect more like a 1% error though. You're getting less than that.

I'm guessing the different results from the 2 boards might be coincidence. I.E., repeat the same sequence of steps with both boards enough times and you might see both boards' results vary across similar ranges of outcomes.

Point 5:

Experiment with a sample rate of 400000 for your 3200 Hz signal. That's an integer divisor of 50 which your board can support directly. It's also exactly 125 times the 3200 Hz signal frequency. So you can do your RMS analysis 125 samples at a time and have it cover exactly 1.0000 sine wave cycles. (Not really. But pretty close. To be *completely* exact, your signal generator and data acquisition would need shared or synced timebases. DAQ boards are generally spec'ed to around 50 parts per million timebase accuracy or around 0.005%)

-Kevin P

12-15-2017 10:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price wrote:

That's a very thorough post! It deserves a careful response.

Point 1 - THE BIG ONE!:

You are not actually sampling at 449200 Hz. The sample timing engine will only use integer divisors of an internal 20 MHz timebase. When I tried a similar configuration on a simulated M-series board here, I requested 449200 and then queried a DAQmx Timing property node named SampClk.Rate which returned 454545.45 The actual rate represents an integer divisor of 44.

Your actual sample frequency has an approximate 1% error in it. And hey presto! Whuddya know? Your RMS sinusoidal variation has about a 1% amplitude!

*Because* your sample freq is not as exact as you thought, you were not capturing 1 exact full cycle of the 3200 Hz function generator signal as you thought. You kept missing by one or two points (1% of your 156 pts). Depending on which part of the sine wave was being missed, your RMS calculation was skewed by a variable amount.

Point 2:

Your mention of checking Tick Count before and after DAQmx Read concerns me. I can't think of a reason why a software query of Tick Count should have any relevance. Since you offer it up as confirmation of something, I have to wonder if you've got some wrong assumptions built into your analysis.

Point 3:

You say that the waveform data has an accurate dt. In your post, you calculate a dt value based on the size of a data array read from a file. It seems to be based on an *assumption* that the data array represents exactly 1 second of data. Based on Point 1, I have a suspicion that assumption is incorrect. Further, there's a potential off-by-one problem when you divide by array size. Size is the number of samples. (Size-1) is the number of intervals.

During acquisition, if you read your AI task as waveform data the waveform will hold the *actual* dt value after the driver has dealt with the integer divisor issue. Here, it reports dt=2.2e-6, the exact reciprocal of 454545.45

Point 4:

Can't say much in detail about the analysis function for frequency measurement. I suspect you're feeding it an incorrect "dt" value. I'd expect more like a 1% error though. You're getting less than that.

I'm guessing the different results from the 2 boards might be coincidence. I.E., repeat the same sequence of steps with both boards enough times and you might see both boards' results vary across similar ranges of outcomes.

Point 5:

Experiment with a sample rate of 400000 for your 3200 Hz signal. That's an integer divisor of 50 which your board can support directly. It's also exactly 125 times the 3200 Hz signal frequency. So you can do your RMS analysis 125 samples at a time and have it cover exactly 1.0000 sine wave cycles. (Not really. But pretty close. To be *completely* exact, your signal generator and data acquisition would need shared or synced timebases. DAQ boards are generally spec'ed to around 50 parts per million timebase accuracy or around 0.005%)

-Kevin P

Thanks for the information, I didn't know about the software using a divider of 20MHz. An odd thing about the sampling rate, that card (calibrated October 2017) with the test setup is 449200 and what I get at my dev station is 384000 (different computer, PXI and another 6255).

I tried with 80000 (dev setup) and I seem to get a narrower RMS (by x10, ±0.0009 instead of ±0.007), the overall precision of the ratio between both signals is the same, probably because of the reduced number of samples to calculate the RMS for each cycle.

12-15-2017 11:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I tried with 80000 (dev setup) and I seem to get a narrower RMS (by x10, ±0.0009 instead of ±0.007), the overall precision of the ratio between both signals is the same, probably because of the reduced number of samples to calculate the RMS for each cycle.

I'm not sure I understand what you mean about the "precision of the ratio". In general, fewer samples used to calculate RMS would lead to more error or more fluctuation, not less.

However, I think the main reason for the improvement is that your 80000 Hz sample rate is another "magic #" that should be near optimal, much like the 400 kHz I suggested. It's both an integer divisor of 20 MHz (divide by 250) and it's also an integer multiple of 3200 Hz (multiply by 25).

-Kevin P