- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

NI-DAQmx Base : Synchronize PC clock (QueryPerformanceCounter) with PCI 6221 analog input

05-18-2017 05:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

no : this simply returns the SOURCE of the clock. Not the time

05-29-2017 11:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi, recently, I have solved another similar real-time sampling problem in the digital control application using SOFT-TIMED program.

My device is NI usb 6281 and it has an internal clock source.

On Windows system, the queryperformancecounter function can count in us, and it allows us to make use of sampling frequencies over 1kHz.

However, the DAQmx function calls(Analog/Digiral read or write, for scalar and F64 both) on default setting usually have their operating time

of around 1ms, which is really annoying that we can only use up to 500Hz for our digital control program.

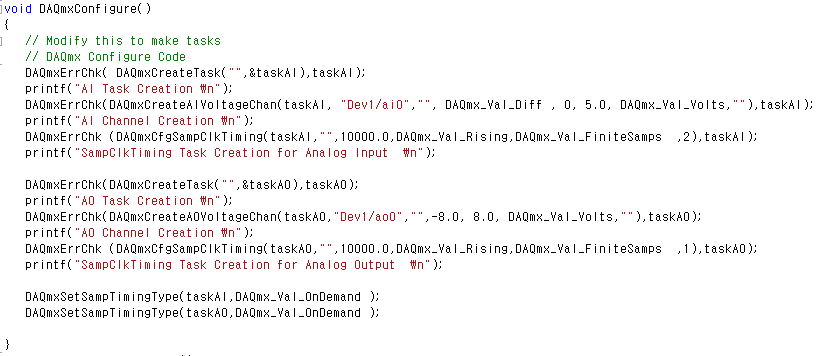

Here, I solved this by using DAQmxCffSampClkTiming and DAQmxSetSampTimingType.

The soft-timed scheduling needs to be operated in OnDemand mode, but the DAQ hardware sampling speed CAN be adjusted

by setting the DAQmxCffSampClkTiming function sampling rate parameter.

Minumum buffer size for this function to be successfully executed is 2 samples, but it's just OK for at least 1/5 of application sampling

by the rule of thumb. Please refer to my application code for example.

The sampling rate for DAQ hardware is set as 10kHz, and the software sampling is running at 2kHz.

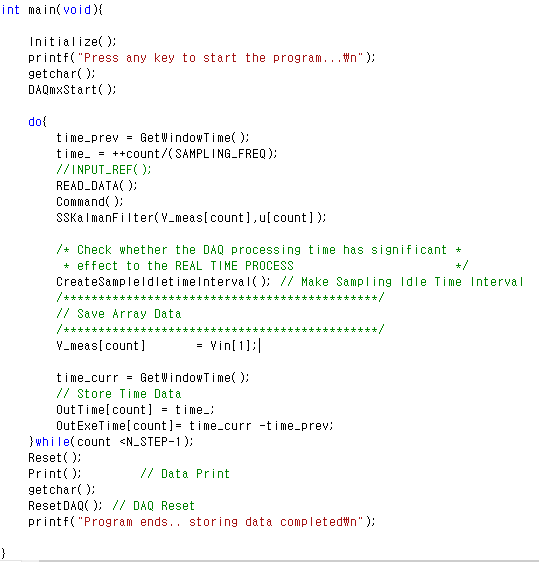

The main loop is as follows

One can surely make the idling time using the queryperformancecounter/frequency function (e.g. Polling algorithm) for the remaining loop sampling period when the tasks for control, measurements are done. Search for google and you will find it at a glance.

Unfortunately, soft-real time program depends on the system hardware(CPU clock, RAM etc.). I think to successfully run over 2kHz sampling, at least i7 core is needed.

Hope this could be helpful for your application.

Thank you.

Im, Byeong Uk

MS Course

|Active Aeroelasticity And Rotorcraft Lab. Mechanical And Aerospace Engineering

|Seoul National University 1 Gwanak-Ro, Gwanak-Gu, Seoul, 151-744, Bd. 301, Room 1357

Tel:82-2-880-1901 |C.P.:82-10-2439-0451 |W:http://helicopter.snu.ac.kr

05-30-2017 02:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

what you suggest, is to use the PC clock only, and fetch samples from the card on demand, right ? This is indeed a solution, but it has one danger : if the PC somehow "hiccups" and the mainloop is not executed for 10 milliseconds, you have no data for that "hole"...

my solution at the moment is the opposite : skip the PC clock and use the sample-count from the DAQ card as clock. The PC can still hiccup, but in that case, the DAQ will send all the missed samples at once when the hiccup is over

05-30-2017 03:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, I got what you mean. In that case, please get a look at the example code using DAQmxRegisterEveryNSamplesEvent function.

(In NI DAQmx ANSI C Ref. Directory, Synchronization, multi-function, synch AI-AO)

Before I figured out the soft-time method I suggested here, I was trying to make this event-based code to make exact sampling

which you have mentioned "the real hard-time program".

This program gets a digital edge clock from the Analog Output channel, which sends a output-done signal. Then, the event routine function is called, which contains the analog read functions. By this method, AO and AI are synchronized at a hard-real timed sampling. It's pretty much like interrupt service routine in other embedded device.

I think by adjusting the sampling rate and the number of samples to output, one can make the event signal rate manipulatable.

For example, if you want to make 1kHz sampling, choose 10000kHz for the analog output sampling frequency and set Nsamples to 10.

Then I guess the event function call would be called at 1kHz rate.

On the other hand, I don't sure this method would work. Since the event function call acts as an interrupt service routine, it is not the real-time program rather like using a delay method. All other algorithm codes and calculation codes must be finished in one sample period and the idle-time must be present to give delay margins.

At the point that you said about the sudden delays in PC program, I can assure that about 1kHz the PC counting algorithm would not fail.

You must check the execution time for each function that you call in a loop. I checked all the functions, and figured out that DAQmx Read/Write

functions are really slow if you do not change the sampling rate of DAQ.

What I mean is that, if your device does not support an on-board clock in itself, you cannot speed up the Read/Write. But I guess that your device has an internal clock source, which makes it possible to modify the clock rate. Then, a software-timed program will work.

Anyway, I would find way to make use of timer-like real-time sampling code. If I could grab a solution, I will share my algorithm.

Best regards,

Byeonguk

06-21-2017 08:09 AM - edited 06-21-2017 08:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, OK I got this solution.

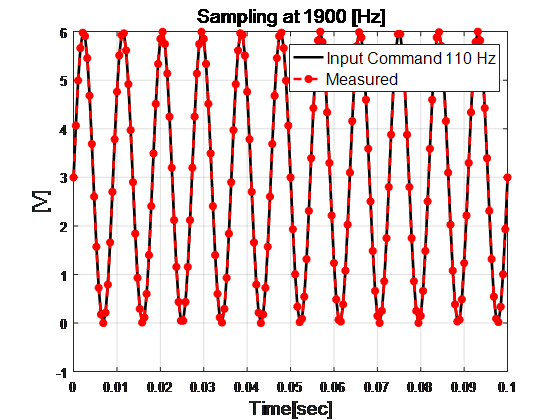

First, I will show you the sampling result.

At first, your DAQ device must support a hardware-clock.

*********** Configuration of DAQ ************

DAQmxErrChk(DAQmxCreateTask("",&AItaskHandle));

DAQmxErrChk(DAQmxCreateAIVoltageChan(AItaskHandle,"Dev1/ai0","",DAQmx_Val_RSE,-10.0,10.0,DAQmx_Val_Volts,NULL));

DAQmxErrChk(DAQmxCfgSampClkTiming(AItaskHandle,"OnboardClock ",SAMPLING_FREQ*10,DAQmx_Val_Rising,DAQmx_Val_FiniteSamps,N_STEP*10));

DAQmxErrChk(GetTerminalNameWithDevPrefix(AItaskHandle,"ai/StartTrigger",trigName));

DAQmxErrChk(DAQmxCreateTask("",&AOtaskHandle));

DAQmxErrChk(DAQmxCreateAOVoltageChan(AOtaskHandle,"Dev1/ao0","",-10.0,10.0,DAQmx_Val_Volts,NULL));

DAQmxErrChk(DAQmxCfgSampClkTiming(AOtaskHandle,"OnboardClock ",SAMPLING_FREQ*10,DAQmx_Val_Rising,DAQmx_Val_FiniteSamps,N_STEP*10));

DAQmxErrChk(DAQmxCfgDigEdgeStartTrig(AOtaskHandle,trigName,DAQmx_Val_Rising));

DAQmxErrChk(DAQmxRegisterEveryNSamplesEvent(AItaskHandle,DAQmx_Val_Acquired_Into_Buffer,10,0,EveryNCallback,NULL));

DAQmxErrChk(DAQmxRegisterDoneEvent(AItaskHandle,0,DoneCallback,NULL));

The idea is that if you designate an analog input channel for a constant sampling clock,

and it works as an interrupt service routine (Event Callback). The callback function only increments

count values and turns on the loop flag.

Main do-while loop pends for the callback function, so it has some idling time which makes it possible

for real-time sampling.

This way, you don't need any software timing functions. At least they are useful for measuring execution time.

However, there is still a major problem in this routine.

If I want to make a control signal output channel and run in the loop, the reference analog output sampling

clock cannot be used saparately. I am struggling on this. If anyone has solved this, please let me know.

I am attaching my source code here. If you have more question, feel free to ask.

Please create a new test project and include the header and c source.

Best regards,

Byeong-uk, Im

06-21-2017 08:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, OK I got this solution.

First, I will show you the sampling result.

At first, your DAQ device must support a hardware-clock.

*********** Configuration of DAQ ************

DAQmxErrChk(DAQmxCreateTask("",&AItaskHandle));

DAQmxErrChk(DAQmxCreateAIVoltageChan(AItaskHandle,"Dev1/ai0","",DAQmx_Val_RSE,-10.0,10.0,DAQmx_Val_Volts,NULL));

DAQmxErrChk(DAQmxCfgSampClkTiming(AItaskHandle,"OnboardClock ",SAMPLING_FREQ*10,DAQmx_Val_Rising,DAQmx_Val_FiniteSamps,N_STEP*10));

DAQmxErrChk(GetTerminalNameWithDevPrefix(AItaskHandle,"ai/StartTrigger",trigName));

DAQmxErrChk(DAQmxCreateTask("",&AOtaskHandle));

DAQmxErrChk(DAQmxCreateAOVoltageChan(AOtaskHandle,"Dev1/ao0","",-10.0,10.0,DAQmx_Val_Volts,NULL));

DAQmxErrChk(DAQmxCfgSampClkTiming(AOtaskHandle,"OnboardClock ",SAMPLING_FREQ*10,DAQmx_Val_Rising,DAQmx_Val_FiniteSamps,N_STEP*10));

DAQmxErrChk(DAQmxCfgDigEdgeStartTrig(AOtaskHandle,trigName,DAQmx_Val_Rising));

DAQmxErrChk(DAQmxRegisterEveryNSamplesEvent(AItaskHandle,DAQmx_Val_Acquired_Into_Buffer,10,0,EveryNCallback,NULL));

DAQmxErrChk(DAQmxRegisterDoneEvent(AItaskHandle,0,DoneCallback,NULL));

The idea is that if you designate an analog input channel for a constant sampling clock,

and it works as an interrupt service routine (Event Callback). The callback function only increments

count values and turns on the loop flag.

Main do-while loop pends for the callback function, so it has some idling time which makes it possible

for real-time sampling.

This way, you don't need any software timing functions. At least they are useful for measuring execution time.

However, there is still a major problem in this routine.

If I want to make a control signal output channel and run in the loop, the reference analog output sampling

clock cannot be used saparately. I am struggling on this. If anyone has solved this, please let me know.

I am attaching my source code here. If you have more question, feel free to ask.

Please create a new test project and include the header and c source.

Best regards,

Byeong-uk, Im

- « Previous

-

- 1

- 2

- Next »