- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

BUG with PCIe-1429: Cameralink full config Basler sprint linescan camera 70000 fps, acquisition works for approx 3 hours, then requesting the next buffer takes another 3 hours, and then again 3 hours without errors and so on

06-18-2009 04:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I have a PCIe-1429 cameralink-board running in full configuration mode with a Basler sprint linescan camera (4096 pixels), 8 Taps 8 bit, 80 MHz pixelclock, 14.2 us/line (= fastests = 70.422 lines/sec)

My PC is a HP E8400 2x 3.0 GHz, 4 GB RAM, running WinXP Pro, LabVIEW 8.5.1 and NI Vision Acquisition 8.6 (NI-IMAQ 4.1).

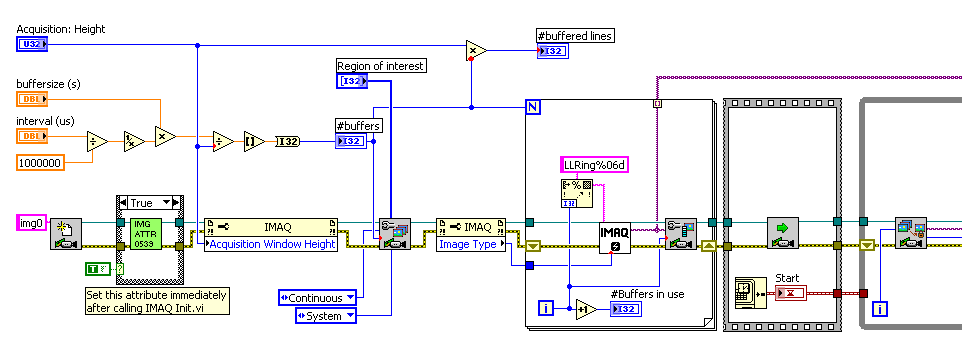

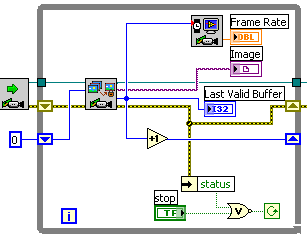

I started from the LL Ring Acquisition example (where the index-terminal is connected to the "Buffer to extract" of the "IMAQ Extract buffer.vi"),

after a few performance issues I concluded to have an optimal bufferheight of approx 4 lines with a CPU-load of approx 55% (while a 100 lines resulted in a CPU-load of approx 95%),

this is including analisys of each line individually (IMAQ to Array and scan each line, IMAQ extract line (Vision toolkit) seemed a better way, but was too slow).

The size of the ring-buffer was for 1.5 sec so 26408 were allocated. T

(The acquisition always starts too slow, but after running out of buffer after several seconds (and having some "Lost frames", the IMAQ-driver/Windows seems to wake-up,

catches up @ > 100.000 lines/sec and then stabilizes @ approx 70.422 lines/sec)

The acquisition @ max speed seemed to work perfectly but after a bit under 3 hours, the acquisition seemed to STOP (no real crash because parallel loops, debugging, etc kept on working).

After waiting for the same amount of time again, the next buffer was acquired and everything seemed normal again.

So the autoincrement of the while loop (= next buffer) increments fine for approx 3 hours, then waits 3 hours for the next buffer and then continues again, and so on...

The goal was to have a continuous acquisition for 24/7, but now I have to stop and restart again after just before 3 hours.

- I use the windows / 3GB switch,

- and the IMG_ATTR_EXTENDED_MEMORY.vi is set (http://zone.ni.com/devzone/cda/tut/p/id/3490)

- I also suspected the firmware of the 1429 which is by default the "64bit"-version, but flashing the "original"-version did not make any difference.

- I could not find any counter-overruns (except maybe internally in the IMAQ-driver???)

I was wondering if this rings a bell with someone

or am I the only one stupid enough wanting to acquire > 250.000.000 lines/sec (and therefore running into some internal IMAQ?-overruns?)

All suggestions are welcome !!!

06-18-2009 11:11 AM - edited 06-18-2009 11:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Technico,

I have a fairly good guess at what is going on...

Basically, I believe your image extraction/processing loop is getting behind and trying to extract a buffer number that has already been written over. When this occurs, the IMAQ driver will give you a different buffer than the one you asked for (based on some policy [possibly configurable] that I cannot recall at the moment, but is likely the "newest" image). Unless you look at the buffer number returned from the Extract VI you will not know this is happening. Keep in mind this is tracked differently from "Lost Frames." Lost frames occur when the IMAQ framegrabber's DMA engine becomes stalled because it reaches a buffer in the ring that is extracted (so it can't be written over). However, the other mechanism you "lose" frames is when you simply are not processing all of them before they are overwritten. I am fairly certain this is what is happening because you stated you have ~26,000 images in your ring and based on how you said the acquisition was running faster/slower before stabilizing at 70,000fps you are likely going well beyond the ring buffer's size in looking at older images.

Now, the cause for your 3-hour delay after 3 hours. is also likely explainable. To explain this, you first must know how IMAQ's buffer numbers work. The input for the buffer number to request is a 32-bit value. Thus, it will wrap around at some point. However, IMAQ reserves some "special" buffer numbers as well in the upper end of the range. The end result is that only 28-bits of the value actually are looked at for the buffer number. This again is normally not an issue as since the wrap-around is on a bit-boundary you still end up referring to the same buffer number. However, regardless of whether it wraps at 28 or 32-bits, what happens is that for any given buffer number you request, the driver must make a determination of whether the buffer number you are requesting is in the future or for an older buffer already acquired (since the buffer numbers always wrap around). Essentially the driver looks at the most recent buffer number and then assumes half of the 28-bit range prior to the current buffer will refer to buffers previously acquired and the other half of the range will refer to buffers not yet acquired.

What I believe is happening in your case is that since you have the loop index wired into the Extract VI, you are incrementing it by one each time and getting further and further behind the currently acquired buffer. It is going unnoticed because the driver is simply giving you a different buffer instead. However, after enough time, you are getting so far behind that the buffer number being requested appears to actually be in the future. Since it is in the future, it will not be overwritten so rather than resort to its fallback policy of giving you a new image it is going to instead start waiting until that image gets acquired. Now, IMAQ also does a slightly interesting thing with regard to its Timeout parameter. Normally this defaults to 5 seconds. However, what this timeout refers to is the expected timeout *per-frame* (NOTE: the IMAQdx driver works slightly different in this regard). Thus, if you are waiting for an image that is 10 buffers in the future from the last acquired one, it will actually wait for up to 50 seconds rather than just 5. So what is likely happening is that when you are requesting some image well into the future it is taking 3 hours until that image number is actually acquired and the timeout is likely being calculated as much longer than this period.

As for options, here is what you would likely want to do to modify your code. Instead of using the loop index, use a shift register initialized to 0. After each loop iteration, take the returned buffer number and add one to it and pass it out to the shift register for the next iteration. This will ensure you keep up with the acquisition even if you fall behind at some points. You can also compare your returned buffer to the requested one to see if you skipped processing any frames (although you'd have to account for that 28-bit rollover if you want to calculate how many were skipped).

Additionally, I believe you likely will have a *much* better time keeping up with your processing loop if you use a larger number of lines per frame. There is some amount of fixed overhead acquiring and processing individual frames that is fairly non-trivial at these rates. If you reduce the number of frames acquired per second, you will see a linear decrease in that overhead. My hunch would be that you are likely seeing an odd CPU load decrease at smaller frame sizes due to a couple of reasons:

-Since you are skipping images you might be not processing all 70,000 fps. How were you calculating this rate? From the loops/sec or acquired buffer numbers? If you are skipping more images then you will likely see lower CPU usage.

-Depending on how you are doing the processing, things that involve memory allocations may have more overhead with larger buffer sizes involved. If you are doing an image->array conversion on each image, it is possible the smaller allocation of 4 lines can be satisfied by the memory manager in a faster way than a larger size can. Obviously removing any sort of memory allocation from your loop is the best way around this (remove the image->array conversion). Without seeing what your processing involves I can't really give a suggestion. However, one alternative might be to acquire your images in larger sizes, then go through and copy smaller subsets of lines out into a different image of a smaller size, and do your same processing you are doing now. In general though, copies of data at these high data rates can become very costly with today's memory speeds so you likely want to do as much processing in-place on the image if possible. Maybe if you can include more details or screenshots of your processing we can give you a better idea of how to make it faster.

Admittedly, our shipping examples do not always give the best explanation for some of these concepts when processing very high-speed acquisitions. They are, afterall, designed to show the more simple use-cases in an easy fashion. However, hopefully this gives you some ideas on what you'd likely have to change to make it accommodate these higher speeds. Please let me know if you have any more questions about this.

Thanks,

Eric

06-18-2009 01:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

thanks for the reply, I will try your suggestion firstly tomorrow.

the calculated frames/sec is indeed connected to the last returned buffer

This afternoon I started a test with an imageheight of 40 instead of 4 and I already passed the 3 hour limit,

tomorrow morning - if the error is related to the buffer to extract - I would expect the "STOP" to have occured after approx 10*3hours,

but I still cannot find anything that points t some kind og 28-bits number.

After the acquisition is running (stable) I am NOT allowed to have any lost frames, so I'm curious which configuration will satisfy.

This is a setup simply for demonstrating the principle of work to our customer, in the next phase we need 2 synchronized camera's running @ 70000 lines/sec.

We were familiar with the 1430 and the 1429 and knew we could get the demo to work,

but now we are looking for an FPGA-board for connecting 2 full config cameralink cameras and doing the data analysis in the FPGA and only passing the results over DMA to the PC.

So any suggestions regarding connecting cameralink cameras to an FPGA (and programming it) are welcome.

One alternative (as final workaround) would be having 2 PC's doing the image acquisition and merging the data from both PC's, but this is not our favorite solution.

We were looking for framegrabbers to do some simple operations on the data before passing the data, but we did not found anything that did the trick.

A simple substract of the entire linescan with a predefined threshold (different threshold per pixel) would also help a lot, but the only option I bounced into were LUT's.

Another option would be something like datafiltering/averaging over the width of the linescan to decrease the complexity of the analysis (we have some difficulties getting rid of some spikes).

(Also cameras with onboard processing were also not capable of handling 70000 lines/sec.)

thx

09-28-2009 06:20 PM - edited 09-28-2009 06:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

09-28-2009 06:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Technico,

I'm not sure if this is still an ongoing project, but just so you know NI has released some new FPGA boards (FlexRIO) that are capable of acquiring the rates needed for CameraLink. They also allow you to design custom front ends, and I've heard some whisperings of a CameraLink front end for those boards. There was an unofficial one developed for a demo at NIWeek this year.

http://zone.ni.com/wv/app/doc/p/id/wv-1698

Pretty cool stuff if you ask me! Might be just what you're looking for.

Cheers,

-Matt at Cyth Systems

LabVIEW Integration Engineer with experience in LabVIEW Real-Time, LabVIEW FPGA, DAQ, Machine Vision, as well as C/C++. CLAD, working on CLD and CLA.

09-30-2009 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It seems like this could do the trick for me,

unfourtunately no additional information available on the NI-site...

09-30-2009 01:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What information are you looking for? I'd be happy to be a resource for you, and I'm sure there are a few other folks that read these forums that would be more than helpful!

Just let us know, we're here to help.

-Matt at Cyth Systems

LabVIEW Integration Engineer with experience in LabVIEW Real-Time, LabVIEW FPGA, DAQ, Machine Vision, as well as C/C++. CLAD, working on CLD and CLA.

09-30-2009 03:20 PM - edited 09-30-2009 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I was replying on the demo with FlexRIO + FPGA with cameralink IP.

This is what I am looking for but I can not find any information about the Cameralink interface they describe in the video.

The datarate in the demo is practically the same: 1.3 MP @ 500 fps would translate in approx 80000 fps in my config.

So any additional info is welcome...

10-22-2009 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator