- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

zero bytes in port in uncertain manner - VISA serial

03-19-2011 02:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

hello LV'ers,

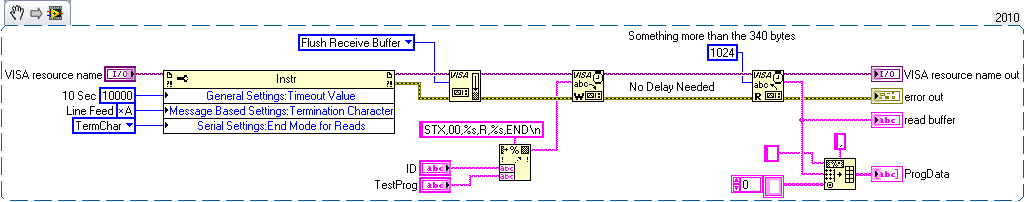

it seems i cannot really figure out if there's lack in my LV program or its just the machines' performance. I'm monitoring data from a machine (around 340 bytes) but was not consistent - oftentimes i read the data - sometimes no data. but i did'nt receive any errors, its just zero bytes in port. by the way, machine is via serial rs-232, so i used VISA serial.

i already adjusted and vary the timing between VISA read and VISA write of up to 10 sec but i still encounter this annoying situation. i even tried VISA clear and flush buffer function (though i'm not that certain with this functions) to think that i might get better-stable machine response. attached file shows the partial code that produces the problem - (inherited code from vendor w/ slight modifcation of mine).

any workaround or bright ideas with this situation? i what part of my program should i focus? or where will i add some helpful functions to eliminate this problem?

thanks for any help.

03-19-2011 03:55 AM - edited 03-19-2011 03:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

mmm, the driver looks pretty good to me (allthough you would better put this in an other structure (like state-engine) in stead of a sequence 😉 ). So I don't think it's a LabVIEW problem. Are you sure that the command you send is the correct one? And are you sure that the command you send doesn't have prerequisites in order to work? Cause I would start looking at your machine in stead of your Code.

The only thing I do see is that you only wait 100ms for a reply of the machine once you start reading. This might not be enough. What I usually do is bring this to 500ms, as long as your program doesn't get in any timing problems.

AND : onced you do start reading from the port, you're reading it at 100% of your pc's power, cause there is no timedelay in there. What you definitly should do is in your FALSE case is enter a "ms to wait" of 25ms. You'll see the performance go up. You never want to execute a loop (hardware communication) with NO time delay

- Bjorn -

Have fun using LabVIEW... and if you like my answer, please pay me back in Kudo's 😉

LabVIEW 5.1 - LabVIEW 2012

03-20-2011 06:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are you sure that the command you send is the correct one? And are you sure that the command you send doesn't have prerequisites in order to work?

- yap. in fact, i can monitor (receive) the correct/complete data more often. receiving no data were just occured sometimes.

The only thing I do see is that you only wait 100ms for a reply of the machine once you start reading. This might not be enough. What I usually do is bring this to 500ms, as long as your program doesn't get in any timing problems.

- i believe, this 100ms is the time the LV code permits to read the machine until the bytes in port returns > zero AND no error (based on the code). if in the 1st time the machine does read the data in port, then the "true" case structure will execute immediately. do you mean i will put the 500ms delay inside the "true" case structure to give the machine enough time or inside the While loop? i thought, the problem occurs first in the "bytes in port" property node and not in the VISA read (yeah, i put retain values function to determine the bytes i read in port). so in this case, i tried to vary the time delay (up to 10 sec!!!) between the VISA write and bytes in port property node to give the machine enough time to finish it data process but the problem still occur.

onced you do start reading from the port, you're reading it at 100% of your pc's power, cause there is no timedelay in there. What you definitly should do is in your FALSE case is enter a "ms to wait" of 25ms. You'll see the performance go up. You never want to execute a loop (hardware communication) with NO time delay

- ohh i missed it! its one of the standards in LV tutorial and i still keep missing it!![]()

03-21-2011 08:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ivelson wrote:

onced you do start reading from the port, you're reading it at 100% of your pc's power, cause there is no timedelay in there. What you definitly should do is in your FALSE case is enter a "ms to wait" of 25ms. You'll see the performance go up. You never want to execute a loop (hardware communication) with NO time delay

- ohh i missed it! its one of the standards in LV tutorial and i still keep missing it!

As written you do not need to add another wait in your while loop. It is true that you generally do not want to write loops that can will consume all of the CPU by free running. As written your code does not free run. The only time your loop will use all of the CPU is when you have data available. If you were streaming data then your code would be an issue but since you are only reading a small amount of data it is OK to utilize all of the CPU for that brief period.

I don't see anything fundamentally wrong with your code so I think that problem is with your device. Depending on the device you may be over running its receive buffer. I have encountered devices that required a brief delay between characters. Otherwise its receive buffers would over run and it would drop data. As an experiment try writing your command out one chanter at a time with a 1 ms delay between writes.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

03-21-2011 10:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Most problems of this type dedend on the device that you are talking to getting the settings and message in LabVIEW to match your device.

Does your device relay on any Termination Character (line feed) for reading and writing? I do not see where you are sending any.

I am only guessing here, but I hope your device uses (requires) a line feed. Or does it work only off of the "END" string.

Try sending a line feed with your write and setting the read to terminate on a line feed.

Set the port time value to something much longer than you expect a reply. 10 sec does seem a little long.

Then set the read to read some value much larger than the expected number of bytes.

03-22-2011 01:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

to Omar,

thanks for the advice! my device dont use termination character except from it only feeds "END" string in the last part of received data. i have implement your code in my program but its seems that timeout error occurs more often when i dont put "delay" from VISA write and VISA read. Actually, based on my recent actual observations, the less its delay, the more often i received no data - BUT even if i put 10 sec. delay on it, i still suffer from data loss.

to mark,

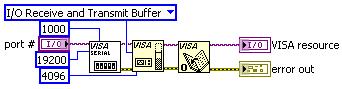

As an experiment try writing your command out one chanter at a time with a 1 ms delay between writes.

you mean i'll buffer each character for 1ms (also tried 5ms and more) and write it all in VISA write? like the figure shown below? please correct me if i'm wrong but if thats it - the problem was still occur.

and yes, i am starting to beleive that this situation might be a device problem. ![]()

03-22-2011 02:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

please let me share the device manual (5 pages only so it wont take too much of your time) and how i initialize the serial comm was shown below.

actually, i have a lots of subVI to mimic my DEVICE functionalities in my LabVIEW HMI such as controlling some functions hence, those subVI was called by the time the user used it (the user push the RUN button, STOP button, etc.) and i also get the RIGHT and CORRECT response (so i really trusted the form of commands that i wrote![]() .)

.)

the problem is one of my subVI was continuously monitor the data of device, and in that subVI (post in my 1st msg) i got the problem. maybe because that i always called that subVI, that the probability of error was higher compare to other subVI that was called by the user every once in a while. plus the factor that when i reduce the waittime between the VISA read and VISA write (of this particular subVI) to less 1sec, i receive no data bytes in port more often.

anyway, i will keep this problem for now, and try to figure out things later and will consider further suggestions from all of you guys (i'll keep tracking). thanks for the time in this issue! really appreciate!

03-22-2011 08:56 AM - edited 03-22-2011 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

No, you will write a single character every millisecond. Put the write inside the loop. I would also change your wait to 1 ms.

You may be running into the problem because VISA read and write are blocking calls and you cannot run them in paralle for the same connection. You need to synchronize your read and werites so only one of them is active at any point in time.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

03-22-2011 08:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

like this? (yeah, & i already change the code into state engine).. unfortunately, it has the same result as my previous code.