- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

not enough memory to complete the operation

02-25-2014 01:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I need to put the VI into a biger VI but it always give me the same error " not enough memory to complete this operation". is any one have a way around it. becuse I need 200,000 point to complete this operation.

02-25-2014 02:04 AM - edited 02-25-2014 02:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi engomar,

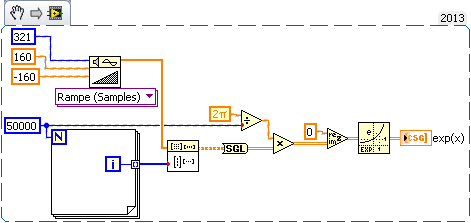

so you want to create an array of 321 × 200000 elements? After some type conversions you need that array to hold CDB data?

Each CDB takes up 16 bytes of memory!

Ever typed this into your calculator?

321 × 200000 × 2 × 8 = 1027200000 ~= 980 MiB

Does your computer allow to handle that much memory?

02-25-2014 02:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My computer is 8 GB RAM but I am using just small portion of it. I heard that LabVIEW have a limitation on RAM access is there a way to increase these limitation,

second of all, when ever I change the data type to single precision, it always give me a coercion dot.

02-25-2014 02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi engomar,

do you use LV32bit or LV64bit?

Using LV32bit you are still limited to less than 4GB on a 64bit OS. And you do create several data copies in your code requiring LabVIEW to allocate more than just one big memory block to handle all your data!

02-25-2014 03:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I use LV32 bits, if I change the whole system to single persion do you think it will work, and how?

02-25-2014 04:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

02-25-2014 04:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think that if I changed the whole eponantial to single percsion then I reduce the memory size to half, what do you think?

02-25-2014 04:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

here is the whole VI

02-25-2014 04:17 AM - edited 02-25-2014 04:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi engomar,

well, it could reduce memory footprint a little. You still have additional buffer allocations in your snippet as you can easily check using the Tools->Profiling menu item!

2pi and "0"-constant set to SGL as well…

With 50k as loop count the VI still needs ~440MB…

Why do you need such a big array? Can't you work with smaller array(s)/subsets?

Edit:

After looking at your VI: so you want to do some math on the big array created before in combination with 6 other big arrays? Do you really think by setting that exp-array to complexSGL will help in any case?

Please rethink your algorithm!

Work with smaller subsets of all your arrays!

02-25-2014 05:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi GerdW,

I tried to think of how I reduce my algorithm, but the problem is that its have to be 200,000 points because it will depend on other VI that my coworker did.

The VI accept 100,000 without any problem, is there away to divide it into 2 groups then add them together? Like maybe for loop, or duplicating the VI.