- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

multi channel timing difficulties

03-20-2012 08:05 PM - edited 03-20-2012 08:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi All,

I'm working with a 9227 c series module and a 9205 c series module both plugged into a 9178 usb.

My problem is that I'm not sure that I understand the way that the timing is worked out when I'm trying to measure using several channels.

When I use just one channel of the 9205, I set my acquisition mode to continuous, samples to read:100, Sample rate:1000.

I have a while loop with a daqmx read vi in it and a loop timer of 100ms

I configured the loop to stop after 100 runs.

I set the program to run and I get samples per channel:99 Total number of samples collected:9900. And the whole run took 10 seconds to complete. Sound feasible?

I then tried altering the loop rate to 10 ms. This time I got samples per channel:10 Total number of samples collected:1014 and this time the whole thing took about a second.

Now this I understand. 🙂

Here's the bit that I dont quite get.

I took out my single channel and created a task in MAX with three channels of the 9205 and three channels of the 9227.

I set the same acquisition mode and buffer size and sample rate as before but this time I get for my loop time of 10ms, a total time of about 1 second. 19 samples per channel and a total of 1638 samples. If I move the loop time to 100ms then I get a total run of 10 seconds, 161 samples per channel and a total of 15985 samples.

I'm guessing that the pattern of 19,161,1638 and 15985 hints to the 100 buffer being split between 6 channels in that 100/6=16.666?? I know...Wild stab in the dark there. 🙂

The real problem for me is that..How can i get nearly 16000 samples from a 1000hz sample rate over just 10 seconds??

Be gentle! I'm finding this rough going 😉

Thanks in advance

Chris

03-22-2012 12:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Chris,

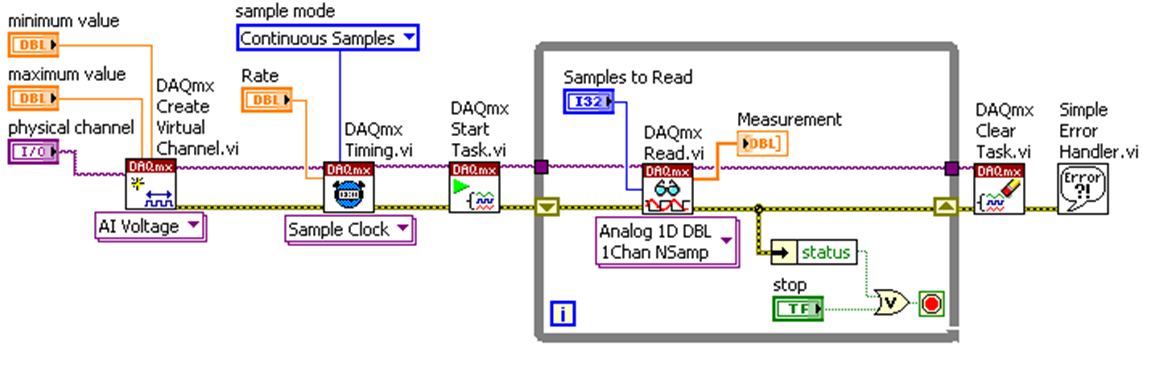

Does your code look something like this:

I wanted to explain a few points about this code first:

If you are using the Sample Clock on the Timing DAQmx VI you are effectively using hardware timing. This means that you are reliably reading 1000 samples into your DAQ buffer every second. The data from the buffer is then transferred to your PC buffer or in other words your RAM. The Samples to Read input of your DAQmx Read defines how many samples you read from your RAM into your graph which in this example is called Measurement. For example if you have set the rate at 1000 Hz and Samples to Read at 500 your loop will run every 0.5 seconds. If your Samples to Read is 100 your loop will run every 0.1 second. If your Samples to Read is 1000 your loop will run once every second. Because everything is hardware timed this is one of the instances where you don’t necessarily need timing in your loop. In order to regulate how many samples you are reading you just need to tweak your Samples to Read Value. I would suggest you keep changing this value and see how it affects the number of samples you've acquired.

I thought you would find these articles useful:

http://digital.ni.com/public.nsf/allkb/8EF7084B908ABF6686257589007C97DF?OpenDocument

http://digital.ni.com/public.nsf/allkb/546C861301BA597786256CDF007C7867?OpenDocument

http://ae.natinst.com/public.nsf/webPreview/3E3D74E26B8A5B83862575CA0053E4B5?OpenDocument

http://digital.ni.com/public.nsf/allkb/5782F1B396474BAF86256A1D00572D6E?OpenDocument

I hope this explains it a little and is gentle enough 😉

Kind regards

Applications Engineer

National Instruments UK&Ireland

03-22-2012 01:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Mahdieh,

Thanks for your help. My code does look a little like yours although I sort the timing out in the task created in MAX. I reckon I have solved this and believe that the issue lies with the minimum sampling frequency of the 9227 being 1.613KS/S. When I set the sample rate at 1000Hz and get 1600ish samples out per second per channel, something has to be wrong so I guess that the 9227, seeing a lower frequency defaults to the 'best it can do' and hence my troubles.

Thanks for the samples to read tip though. If I make my buffer bigger and just fix the samples to read, I have no need to put a timer in my loop right?

Cheers

Chris

03-23-2012 05:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Chris,

Yes you are right about the 9227. It has a minimum sampling rate of 1.613KS/S as it uses a Delta Sigma ADC. If you use an external master timebase you can get this down to 390.625Hz. These type of ADCs allow you to have the higher accuracy & sample rate and simultaneous sampling on all your channels.

The rule of thumb with the Samples to Read is to have it around half your sampling rate. This normally means that you read all your acquired samples into LabVIEW in a reasonable time. If your Samples to Read is too small you will get a buffer overflow error. The reason for this is that you have all your samples going from your DAQ buffer into your RAM which is then just waiting for LabVIEW to read it...

Likewise if you have a very high Samples to Read, e.g. Rate= 1KHz & Samples to Read= 5000, you will not see any values for 5

seconds. So LabVIEW waits until you have all your samples available and then displays them.

If you modify your samples to read from a very low value to a very high value you will see very quickly how it affects your response.

Good luck with the rest of your project.

Kind regards,

Applications Engineer

National Instruments UK&Ireland