- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

formula with U16 input and DLB result

Solved!09-26-2011 03:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello, I'm new to Labview and I'm using a VI previously made by a colleague.

I'm foundind a problem in a simple formula wich has a U16 input, X1 and the formula is (X1*10)/2**16-5 , connected to a DLB output

I'm using Labview 2011 and when I open the VI in a Windows XP, it works fine, but in a Windows 7, it gives wrong result. I've debugged and found that if placing only X1*10 in windows 7 it is limited to 65535 (16 bit?) while in Windows XP it gives the right result.

In windows xp, the "result" word and the wiring to the numerical output are orange (DBL?), while in Windows 7 they stay blue (U16?) and a red point appears on the connection to the numerical output.

Does anyone know what might be the cause of this different and annoying behaviour and how to fix that?

Solved! Go to Solution.

09-26-2011 03:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

I am an Applications Engineer at National Instruments UK.

From what I can understand, your VI works fine when used in Windows XP but gives the wrong result in Windows 7. To help with the problem it would be useful to find out what you have already attempted to troubleshoot the problem. Have you tried to right-click the numeric indicator in Window's 7 and select Representation >> U16?

If you have already attempted the above step then it may be useful for you to attach the VI in a reply to this post and I will try to run it on Windows 7 and see if I can make it work.

Let me know if this helps and if not I will continue to assist you. Best of luck.

Regards

Applications Engineer

National Instruments UK

09-26-2011 03:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Renatogsousa,

As I understand it you have a problem that when you run your VI on a windows 7 system your numeric value doesn't adapt to the needed number of bits at the same point that it did in windows XP, and thus causes the result of your calculation to be wrong.

This is because the LabVIEW program has to change the numeric value from a U16 to a DBL to be able to pass it out to a DBL format probably caused by the different operating system making LabVIEW adjust the point that it changes the numeric value from a U16 to a DBL

As simple way to fix this would be to change the representation of the U16 input to a DBL. (assuming that it doesn't need to be only 16 bits) This can be done by following the instructions on this link:

http://zone.ni.com/reference/en-XX/help/371361H-01/lvhowto/changing_numeric_represent/

If the input needs to stay as U16 then you might find that changing the representation of the constant of 10 in the X1*10 part of the calculation to a DBL will cause LabVIEW to adjust the numeric value to a DBL representation at the point that it does this part of the calculation. You would also need to make sure that the rest of the numbers in your calculation are set to DBL representation and this will make sure that LabVIEW changes the representation at the right point.

Let me know how you get on with this, if you have any problems then I can assist you further.

Regards

Controls Systems Engineer

STFC

09-26-2011 05:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you very much, David and James.

Could solve it with by changing the input variable from U16 to DBL, now it works fine on both OS.

James, I could change it to DBL but the link is not working...

What do you mean by "changing the representation of the constant of 10 in the X1*10 part of the calculation to a DBL", is is to change from 10 to 10.0 for example? If so, only with that it didn't work.

09-26-2011 07:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Renatogsousa,

Glad you've got it solved. Apologies for the broken link, hopefully this one works:

Changing numeric representations

What I meant by "changing the representation of the constant of 10 in the X1*10 part of the calculation to a DBL" was that if the constant of 10 is in a blue box (i.e.. Is not a DBL) then you could right click on it and change it's representation, in the same way that you've already done for the input, and this would have made LabVIEW change the data type from a U16 to a DBL at the point that it was multiplied by the constant of 10.

I've included some screenshots in the hope that it will make what I've said a bit clearer.

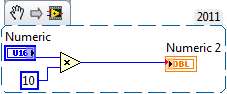

When the constant 10 is set as a U16 the VI looks a bit like this

Notice the red dot on the Numeric 2, this means that the data type is converted to a DBL at that point.

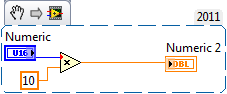

When the constant 10 is set as a DBL the VI looks like this

Notice the red dot is at the input to the multiply, this means that the data is converted to a DBL at this point instead.

Since you've solved the problem by changing the input to a DBL this is no longer relevant, but it might help you in the future if you have a situation where the input HAS to stay as U16 but you still want the result as a DBL.

Glad it's worked for you.

Kind regards

Controls Systems Engineer

STFC