From 04:00 PM CDT – 08:00 PM CDT (09:00 PM UTC – 01:00 AM UTC) Tuesday, April 16, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From 04:00 PM CDT – 08:00 PM CDT (09:00 PM UTC – 01:00 AM UTC) Tuesday, April 16, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

08-27-2009 03:18 PM - edited 08-27-2009 03:19 PM

Before you say yes...

I am using a crio device in scan-mode interface. The values that the scan mode returns are in double-precision floating point. Apparently I am supposed to be able to choose between double-precision floating point ("calibrated") and fixed-point ("uncalibrated") data, but this functionality appears to be exclusive to the fpga interface and is not available with the scan engine. Both data types are 64-bits per value, so when it comes to size-on-disk, either way is basically the same anyways.

The system is continuously recording 13 channels of double-precision floating point at 200Hz. Using the binary file write method, I have measured this to be about 92 MB/hr to disk. (over 120mb/hr with tdms and a lot more for write to spreadsheet) Simply put, this 92 mb/hr rate is just too much data to disk on this system.

The modules I am recording from, the 9236, 9237, and 9215 c-series modules, have 24-bit ADCs or less. Does this mean I don't need 64 bits to represent the number and maintain the same accuracy?

Can I coerce/cast the double-precision floating point values I am receiving from the scan engine i/o variables to another, smaller data type like single-precision floating point and still maintain the same accuracy?

Solved! Go to Solution.

08-27-2009 05:40 PM

If you configure them properly, any data you may lose will almost certainly be related to the bit noise of your ADC. SGL has a 24-bit significand (mantissa) so it can (in principle) hold the exact reading of your ADC. The conversion to DBL (performed by your interface) and then to SGL (done by you) is certainly lossy, but that loss is probably in the last bit (or two). I do not know the specifics of the models you listed, but if those ADCs have the dynamic range to relegate bit-noise to the 24th bit, I'd be surprised/interested in getting a few myself. Most likely, the last few bits are just noise and the conversion should not cause you any noticable problems. I don't see any reason not to try, the savings in disk space seems worth it.

08-27-2009 05:57 PM

08-28-2009 06:11 AM

Hi there

The ADC delivers a U32 raw value, so you will loose data when converting U32 to SGL (simply because SGL has a much wider value range than U32). Can you read out the raw U32 data from the ADC and save that? Don't knwo about c-Modules, DAQmx allows to read the ADC raw value.

08-28-2009 07:03 AM

Nickerbocker wrote:

between noise and hardware accuracy, i doubt it makes much of a difference.

You can test it by looking at the difference between a DBL and DBL converted to SGL. But I support the hint from Nickerbocker. I do not think it will make difference

08-28-2009 10:07 AM

For some reason the scan interface isnt letting me output the raw U32 values. I think it might have something to do with the fact that I am using both scan engine and fpga interfaces in the project, but I am not sure. Any ideas why this is happening?

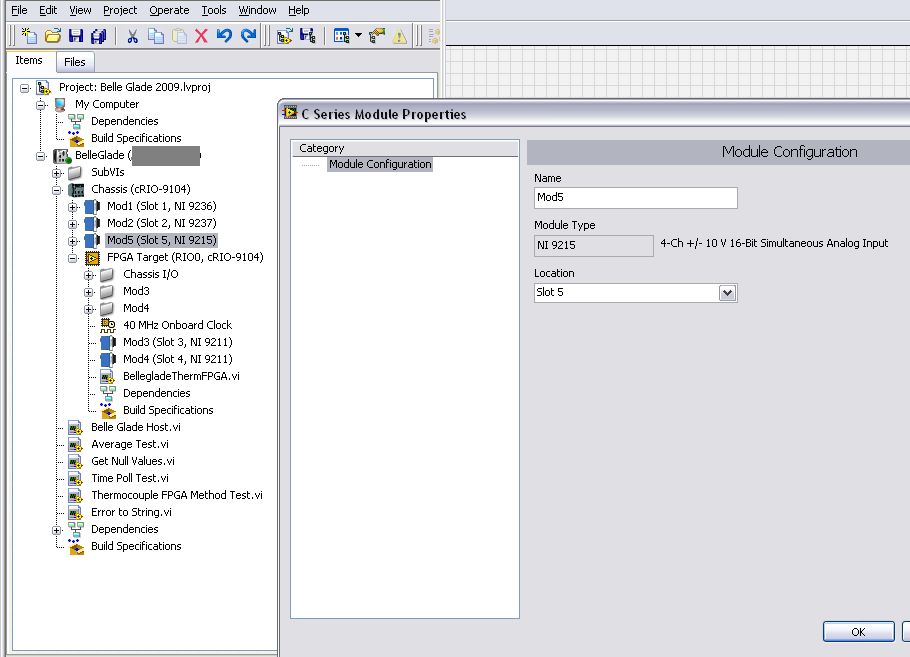

This is a picture of me right mouse clicking on one of the modules to try to get it to put out raw U32 values like this knowledgebase article says I can.

I put in a service request.. (Reference#7256901) because to be honest I have to know for sure whats going on. I am not one of the engineers/scientists that designed the experiment so I cannot say whether it is ok to chop some of the end of the number off. I would rather simply be able to record all the data in a smaller amount of disk space then go tell them we have to compromise somewhere, whether on sample rate or precision.

08-28-2009 01:07 PM

I guess I wasn't reading the article carefully enough.

"As of LabVIEW 8.6, there is an option to use the cRIO Scan Engine to interface modules directly to LabVIEW Real-Time by using I/O variables. In this case, the option to select raw or calibrated is not available in the properties dialog. When using Scan Mode, all data is automatically returned scaled and calibrated."

Is there any way around this?

08-31-2009 03:10 PM

09-01-2009 12:58 AM

Hi there

I've been curious so i did some testing for myself. I see that the voltage resolution and the difference between SGL and DBL representation are both ~ 10^-6 V, giving an error of ~1Bit, sou you will loose about 1Bit of information. But it has been already stated that the NEB is < 24 (but i haven't read the data sheet), so using SGL should be OK.

09-01-2009 03:06 AM

Technically you'll lose 2 bits of information as a Singles mantissa is 22 bits (according to help), though it's probably of zero relavance.

Just for the sake of it, you can multiply your double with 2^32 and store it in a U32 int and use it as fixed floating point ... It'd only need to be done when reading and writing as calculation can be done as doubles.

That is if you're after that last bit and still want less data to write on disk. 🙂

(not sure if it's helpful, i just like the theory)

/Y