- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What is the timing mechanism used by LV Qs under the hood?

09-08-2017 08:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This isn't a problem or anything, just something I'm curious about.

I was doing a project using a RaspBerry Pi and because the Pi doesn't have a RT clock, its system time isn't maintained between power cycles. Anyways, I was debugging a running VI which used the Q timeout input as its loop timer to execute every ~50ms if no message was received. While that VI was executing to collect some debug data, I separately updated the Linux OS time as it was a few hours behind due to the power cycling. When I did this, the Q timeout hung, I'm assuming because the new OS time was further in the future than some reference time the Q must have taken during the last timeout.

So this would point to the Q VIs, at least on Linux relying on the OS system time to preform their timeout operation. Does anyone know with certainty if this is correct? Like I said, just curious.

I saw my father do some work on a car once as a kid and I asked him "How did you know how to do that?" He responded "I didn't, I had to figure it out."

09-08-2017 08:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We do not have insight into the internals of the Queues and how they are implemented in various OS's.

I can speculate based on what I have seen of OS internals and scheduling systems.

What I would expect is the waiting thread to be placed in a "Resource Wait State" and added to a waiting queue that contains all of the threads that can not proceed until a resource is made available. In the case of waiting for a queue, it would be a handle pointing to where the received queue entry is located in memory OR a Timeout. The timeouts are the part you are questioning.

I would have to speculate what is the nature of time-outs in the OS you are working with. So it is not a LV question but more an OS related Q.

The only aspect of LV that may give you a hint would be the NI-PAL which is used to provide a uniform interface between LV and the various OS's in which it can run. But the chances of e NI-PAL developer coming down from the Ivory Tower is a stretch. I think you would be better served researching how timeouts are handled in the OS that is being used.

Just my 2 cents,

Ben

09-08-2017 08:43 AM - edited 09-08-2017 08:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ben wrote:I would have to speculate what is the nature of time-outs in the OS you are working with. So it is not a LV question but more an OS related Q.

If this is the same on Windows, I think it is a serious LabVIEW problem, not an OS problem. Even if it's just on Linux, it sounds like a bug (but I would care less).

09-10-2017 03:10 PM - edited 09-10-2017 03:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Whatever method is being used on Windows (I can't comment with any certainty on Linux but I'd be surprised if its conceptually different) it has to go through the Win32 API. Either accessing a system timer (driven through CPU timer interrupt) or, like you are suspecting, using the RTC for relative time passed.

So I created a very simple test VI (boolean queue, attempting to dequeue in a loop with 50ms timeout). Then ran this while monitoring all imported Win32 APIs for LabVIEW.exe with WinApiOverride64 (http://jacquelin.potier.free.fr/winapioverride32/). The important part of this is that the while loop contained the single Dequeue node with the timeout of 50ms as well as a Stop control (I couldn't help myself).

Interestingly in this simple VI there are a lot of continual calls to GetSystemTimeAsFile() as well as other constructs (like the critical sections and WaitForSingleObject) that suggest to me to be part of the LabVIEW Execution System scheduling logic relative to code clumps generated during compilation. The tool I am using is only monitoring imported or exposed API calls so we can't see any internals.

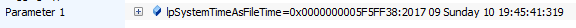

I looked a bit deeper and found a re-occurring pattern which I think tells the story:

The pattern I can see is an eventual last call to GetSystemTimeAsFileTime (I'm going to shorten this to GST), then a call to Sleep (0x37 ms is provided as input) then after that another call to GST. Notice the difference in timestamps is 50ms. This same time delta exists in a half-dozen instances of this pattern; not a coincidence. Weirdly the Sleep setting is 0x37 (hex) which is 55 decimal DWORD (32-bit unsigned); this is always the same value which is is counter-intuitive (https://msdn.microsoft.com/en-us/library/windows/desktop/ms686298(v=vs.85).aspx) but I haven't dug any further into this yet.

If I get time I will try a similar experiment with different timeout values and see if the pattern holds. But I would say that, at least on Windows, the timeout is indeed using the system time in some manner to control the timeout functionality.

09-10-2017 04:01 PM - edited 09-10-2017 04:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Looking into this a little further I can see that I was too hasty in my first conclusion (that's what you get when you make assumptions). For one the Sleep is being performed by a different thread (perhaps thats the LabVIEW scheduler thread) so I think it is entirely unrelated to this. I also think the timeout is not linked to the GST function relative time specifically; here is my reasoning:

Looking at the thread that is calling the GST function it is waiting for a single object (WaitForSingleObject) before continuing. This is where the 50ms delay comes from (the dwMilliseconds parameter). When I adjust the Dequeue timeout to 20ms I see that the value to this parameter changes and the time between the GST calls before and after drops to 20ms. The return value from the WaitForSingleObject is 0x102 which indicates a timeout (https://msdn.microsoft.com/en-us/library/windows/desktop/ms687032(v=vs.85).aspx). So I'm guessing that the Queue primitives use a Mutex or similar internally to signal when data is available but that is simply speculation.

It also suggests that the timeout is unrelated to the system time per se and is purely managed via the Win32 API call. Why the GST functions are being called is anyones guess; perhaps this is part of LabVIEWs internal processing or debugging features.

As to the timing of how WaitForSingleObject() API function determines timeout and if this is related to the system clock; that I can't say for sure without a lot more digging. I guess the only ones who can confirm for sure will be someone in the ivory tower at NI.

09-11-2017 04:34 AM - edited 09-11-2017 04:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm pretty sure that any timeouts in LabVIEW are related to the GetTickCount() API on Windows. It's the only API that has traditionally allowed some kind of ms resolution since the beginnings of LabVIEW (more accurately in Windows 3.1 it had really a resolution of 55 ms which is the traditional IBM PC timer tick interval, Windows NT 4 improved that to 10 ms).

The important part in the MSDN documentation in relation to the original topic in this thread is this:

The resolution of the GetTickCount function is limited to the resolution of the system timer, which is typically in the range of 10 milliseconds to 16 milliseconds. The resolution of the GetTickCount function is not affected by adjustments made by theGetSystemTimeAdjustment function.Also

Also it seems unlikely that the adjustment of the system time would cause any irregularities in the monotonicity of the GetTickCount() value. The interaction with this is rather in the opposite direction, where the system time is driven by the GetTickCount() value to provide ms resolution and regularly synchronized with the realtime clock value which is traditionally a pretty low speed IO device that you don't want to hit every few ms to read its value.

Linux may be somewhat differently implemented although I would think that the Linux syscall API also provides a similar functionality to the Windows tickcount, that is not really driven by the realtime clock. By further investigation it seems that the LabVIEW MilliSecs() API at least in older LabVIEW versions does call gettimeofday() in order to retrieve the seconds and microseconds value under Linux, which is suboptimal. Better would be to call clock_gettime(CLOCK_MONOTONICITY, &value) which it likely still doesn't do according to your findings. Most likely clock_gettime() wasn't available on Linux systems back in the mid 90ies of last century when this code was written and the routine has not been revisited since because it usually just works anyways.