- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Use Mean.vi or just math primitives? (performance, long term stability)

03-29-2023 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello all. I have 5 dbls where I calculate the mean, using Mean.vi. This may happen 10's of thousands of times per day in a 24-7 program, which got me wondering, since there's only 5 numbers, if the dll used in Mean.vi is "better", or is writing out the very simple math using primitives "better"? Or a math node? It may come down to preference or philosophy, but what would you do, and why?

03-29-2023 10:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If it is always 5 DBL scalars, I would go with the primitives of a compound addition and a division.

Soliton Technologies

New to the forum? Please read community guidelines and how to ask smart questions

Only two ways to appreciate someone who spent their free time to reply/answer your question - give them Kudos or mark their reply as the answer/solution

03-29-2023 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Mean adds lvanlys.dll as a dependency.

Not a terrible thing per se, but this particular dll had issues with AMD CPUs (but those are supposed to be solved in LV23)

Mean PtByPt VI doesn't have this dependency, but it's in a lib, so not disconnecting the lib when building an exe might still add lvanlys or other dependencies.

03-29-2023 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

Mean adds lvanlys.dll as a dependency.

Not a terrible thing per se, but ...

Thanlks. In this case the math is so elementary and the inputs so few, that ANY reason is good enough to do the math "longhand" rather than using Mean.vi

03-29-2023 08:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I always use the the array summation node and divide by primitives. I have found they are faster/less memory than the Mean VI.

But the Mean VI does some error checking, ie, looking for NaN's in the stream; primitives don't do that.

03-29-2023 09:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My Preference (for whatever it is worth) is to use the built-in statistics functions. The key factors driving it are Modularity, VI Documentation and scope-creep / Engineering Change control.

Suppose your Test Engineering team decides that Mean is just not enough and would really like to see Std Dev or Varience. Or perhaps Mode is a better statistic for the application? One vi to change, VI Documentation change done automagically by Relink to the new help tag in the LabVIEW Help file. Engineering Change Order method of change simple to write and Code Review is bullet proof! Oh yeah, no matter how may times you construct array sum ÷ array size from the primitives you will always run the risk of crossing a wire or dropping the wrong primitives Or worse! having some make-spaces drift the primitives so far from each other that the Meatball gets lost in the Spaghetti. Food for thought 🤔

"Should be" isn't "Is" -Jay

03-30-2023 03:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

I always use the the array summation node and divide by primitives. I have found they are faster/less memory than the Mean VI.

But the Mean VI does some error checking, ie, looking for NaN's in the stream; primitives don't do that.

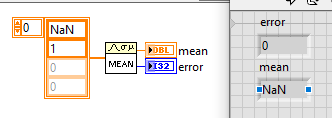

The PtByPt might do that, but the normal Mean.vi doesn't.

03-30-2023 10:47 AM - edited 03-30-2023 10:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

@mcduff wrote:

I always use the the array summation node and divide by primitives. I have found they are faster/less memory than the Mean VI.

But the Mean VI does some error checking, ie, looking for NaN's in the stream; primitives don't do that.

The PtByPt might do that, but the normal Mean.vi doesn't.

Thanks for the correction. Maybe in the past it did? Or maybe it was this thread that led me to believe that. I should have reread it before responding.

EDIT: Show what error codes are returned?

03-30-2023 03:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Errors may be representation overflow, inf in array in or broke link in dll. If you see the last you really worked hard to overload the mean call.inside a dll you should have not replaced with your own junk.

"Should be" isn't "Is" -Jay

04-03-2023 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

this particular dll had issues with AMD CPUs (but those are supposed to be solved in LV23)

Really? What was this issue?