- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

U32 + "digits of precision" (??)

Solved!05-31-2016 12:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

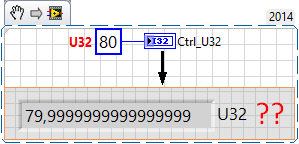

What the meaning of this behavior ?

Is this an expected behavior ? and, if so, why ?

I asked this question previously on the french forum (french is my mother tongue) .. here .. but I didn't get any answers.

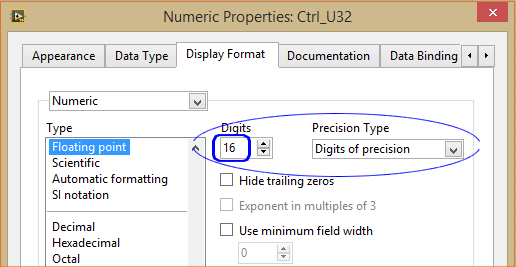

For a U32 (the same for a I32, U16, I16 ...), is it normal to be able to set the "digits of precision" ? (for me, that makes no sense)

Moreover, this can cause display errors (79,99999 .. )

What is your view of that ?

Thank you all (and sorry for my poor english)

Solved! Go to Solution.

05-31-2016 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The numeric controls don't have an integer display format, just a floating point format. It is also probably related to the other format s that would legitimately apply to integers, (Such as scientific notation which would use both decimal values and still be an integer 1 million = 1e6 999,9999 would be 9.99999e5.

As a result, you are taking an integer value and it is going through code designed to render floating point values, and also scientific and engineering notation.

There is no specific reason why you'd want to display an integer with digits after the decimal point, so 0 digits of precision would be normal. But there is no reason to prevent someone from display decimal digits. And when you get down to displaying 16 digits of precision, it doesn't surprise me you are reaching the limits of floating point numbers, which is the underlying code that needs to be used to display decimal values on integers.

In the end, I think this could be considered expected behavior, and not anything that should cause anyone problems in the practical world.

05-31-2016 01:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

>> The numeric controls don't have an integer display format,

Hmmm, I have Decimal display format, the same as Hex, octal, binary.

I would say that 80 as integer is exactly 80. 2^6+2^4.

When you use floating display, RavensFan is correct: first it converts 80 to double, then tries to show it. And 80 can not be represented in floating format exaclty.

05-31-2016 01:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The "problem" is that you are displaying an Integer using a "Floating Point" display! If you used any of the display formats from the Integer category (Decimal, Hex, Octal, Binary), you wouldn't see this. I was going to say that the same applies if you try to display a Float using an Integer Display Format, but see that LabVIEW doesn't allow you to make this particular "mistake" ...

It is curious, however. It turns out that for precisions up to and including 15, you get trailing zeros. With 16, you get trailing 9's. With higher than 16, you start to add trailing zeros after the trailing 9's. It's the same for positive and negative I32s.

For more fun, I tried an I64. 40 digits of precision still had all trailing zeros.

This is beginning to look more like a "bug" in LabVIEW's formatting rules -- I should stop trying to "shoot the messager", so please accept my apology.

Bob Schor

05-31-2016 01:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Alexander_Sobolev wrote:>> The numeric controls don't have an integer display format,

Hmmm, I have Decimal display format, the same as Hex, octal, binary.

True. There is decimal in the grouping with Hex, octal, binary. And when you pick that it does treat the indicator as an integer and disables the precision and significant digits portion of the display which prevents you from showing. In this case decimal means base 10 display, just has hexadecimal means base 16, and octal and binary mean base 8 and base 2 respectively. And all 4 of those displays are treated as integer data types as opposed to floating point.

There can be some confusion of terminology as in the non-programming world, "decimal" means any values after the decimal point (and thus are fractions of integers), while in the programming world, "decimal" means integers displayed in a base 10 format.

At the end of the day, it is just semantics, and the issue the OP shows is not a real world problem because there is no logical reason for someone to want to display an integer value with 16 "decimal" digits after the decimal point.

05-31-2016 02:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@RavensFan wrote:

At the end of the day, it is just semantics, and the issue the OP shows is not a real world problem because there is no logical reason for someone to want to display an integer value with 16 "decimal" digits after the decimal point.

While I basically agree "you shouldn't do that", it is curious (and, to me, suggests a potential bug somewhere in Conversion code) that 16 digits is "special" for I32 but not I64. It also isn't bad (in my opinion) to allow people to make "stupid mistakes" without scaring their socks off. Now that this "feature" is revealed, it might actually point to a here-to-fore unnoticed bug ...

BS

05-31-2016 03:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

RavensFan : " The numeric controls don't have an integer display format, just a floating point format ..."

thank you for your explanations.

Bob_Schor : (display an integer value with 16 "decimal" digits)

" While I basically agree " you shouldn't do that " ... " of course that we shouldn't do that, I fully agree with you ! (Obviously)

"and, to me, suggests a potential bug somewhere in conversion code"

I agree with that .. a (small) bug in the conversion code seems obvious to me

it shouldn't be possible to display "79,999" with a Control whose the format is I32 (or U32, I16 ...)

05-31-2016 03:35 PM - edited 05-31-2016 03:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Do you think it should be allowed to have a control display 80.000 for an I32, U32, I16?

And if so, why?

(Not trying to argue the point, just want to see your use case. And the fact that the issue doesn't show up until 79.9999999999999999 (16 digits of precision) )

I also just noticed that if you have decimal digits display (>0 digits of precision), and you change your datatype to another I or U integer, LabVIEW automatically resets the display format back to 0 digits of precision.

05-31-2016 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@RavensFan :

"Do you think it should be allowed to have a control display 80.000 for an I32, U32, I16?" ... no !

" ... just want to see your use case."

my use case ? i have no use case, it would make no sense to do that.

it was just an "academic question" about the labVIEW behavior. I understood your point of view as well as all your explanations.

I'd very much like to talk more about this topic with you, unfortunately my english isn't good enough to do that

However, upon reflection, I agree with you and with your conclusions.

Many thanks RavensFan.

06-01-2016 01:56 AM - edited 06-01-2016 02:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Bob_Schor wrote:

@RavensFan wrote:At the end of the day, it is just semantics, and the issue the OP shows is not a real world problem because there is no logical reason for someone to want to display an integer value with 16 "decimal" digits after the decimal point.

While I basically agree "you shouldn't do that", it is curious (and, to me, suggests a potential bug somewhere in Conversion code) that 16 digits is "special" for I32 but not I64. It also isn't bad (in my opinion) to allow people to make "stupid mistakes" without scaring their socks off. Now that this "feature" is revealed, it might actually point to a here-to-fore unnoticed bug ...

BS

No, it's not a bug. It's an inherent attribute of floating point precision. A double has about 16 digits of precision. 80 can not be exactly represented in the binary base 2 system that is inherent to the binary notation used in computers. Therefore the integer value 80 is converted to the nearest possible floating point value which happens to be about 79.999999999999999<some arbitrary digits that don't fit into the 64 bit binary notation for double precision floats anymore>. Now you tell LabVIEW to display that number. The string conversion routine will actually look one digit further than you want to display and use that digit for rounding. As long as you tell it to display less than about 14 to 15 digits after the decimal point this will result in the rounding of all the 9's to end up with the 80.0000.....

Once you start to display more digits than what can be possibly represented in the double precision float, LabVIEW has to guess those digits. Since they do not exist it assumes them to 0 and you start to get this effect. It's not only not a bug but actually defined in that way by the IEEE standard for floating point values. Yes it is not perfect but there have been many matematiciens thinking about this before they drafted that standard and a lot more who reviewed the draft and eventually gave their approval. For the ideal solution, computers would need infinite precision floating point values but that also requires infinite amounts of memory and processing power, so is not an option. The next best thing are high precision floating point libraries which support arbitrary precision. However they are very slow and in 99.999% of the cases total overkill. And they require the user to make specific decisions about the desired precision when using them.

And then there comes the limited precision floating point numbers as implemented in modern computers and which exhibits these traits. It's possible to integrate in hardware and because of that reasonably fast but has caused many threads like this on many computer fora and also here on the LabVIEW board about the "buggy" implementataion of floating point numbers in software.

@ouadji wrote:

"and, to me, suggests a potential bug somewhere in conversion code"

I agree with that .. a (small) bug in the conversion code seems obvious to me

it shouldn't be possible to display "79,999" with a Control whose the format is I32 (or U32, I16 ...)

If you meant "should" here more as like "could", I would agree with you. But I don't see a strong case for mandating this to be impossible, other than helping to prevent such threads on the discussion fora about the badly understood limits of floating point numbers.

The default for an integer control is the Decimal format. If someone went to the trouble to change that to one of the floating point formats, he hopefully has a reason for that and probably understands the implications of it. If he didn't and then complains about being able to shoot his own foot ...., well maybe most people should indeed not be allowed to own a gun! ![]()