- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Triggering DMD device with Delay

Solved!07-12-2021 10:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello!

I was wondering if anyone had some knowledge or suggestions on how to solve a problem I've been experiencing. I'm using the DAQmx software to start a digital trigger off a signal from a DMD, a micromirror device, so that I can acquire a signal. The signal is acquired through a lock-in amplifier, and basically of the electronics of the system I need to acquire the signal at 90 microseconds after the trigger is sent. So the problem I've been encountering is how to halt that signal for the data acquisition.

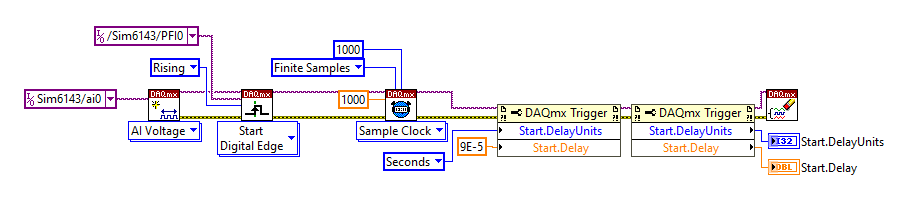

I've been using the DAQmx trigger property node in order to try and write out the delay, as displayed in this forum post:

I've also attached my own code down below. The code itself works entirely without the property node, simply taking the data immediately after the trigger, so I know that something is wrong with how I'm trying to use the property node, but I'm not sure exactly what it could be. For my research, I need the signal at 90 microseconds after, unfortunately I can't use a larger time frame, as the trigger of changing an image is at 105 microseconds so any longer and it would take the data of an entirely different pattern. I've attempted this using both the 'ticks' and 'seconds' basis, but neither have produced the same results the full program does.

The DAQ card I'm suing is a PCI-6601, which should (I'm not quite sure) have a timebase of 20 MHz, using an MFLI 500 kHz/5 MHz lock-in detector and a DLPC6500 DMD, for all the technical specifications. This is done on the LabVIEW 2017 software.

Any help would be very appreciated!

Solved! Go to Solution.

07-12-2021 01:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

07-12-2021 01:18 PM - edited 07-12-2021 02:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oh, apologies about that mistake, I was confusing two devices we have on the same computer system. The device we're using as a DAQ card for the purposes of clock ticks and triggering is a PCI-6143.

*For editing purposes, as with my account I'm unable to post again in response to the inquiry, I hope this is visible.

I attempted the shift, and unfortunately the same problem occurred. The loop of data averaging, which is set to 1000 averages, is done in one second with no data actually taken. There's no error given in the code itself, everything runs, but when the property node is set to give out the adjusted trigger, no data is taken and it's finished in about 1 second, as opposed to the ~40 seconds it usually takes to collect all of the data. Essentially, when I conduct the program without the adjusted trigger property node, the data is triggered from the DMD's inputs, which is a set of 128 image patterns every 105 microseconds. Data is collected a couple of microseconds after a new pattern appears, which is the trigger edge. Ideally the property node sets the data acquisition to 90 microseconds after the trigger edge, but instead it seems to disable data acquisition entirely and collect nothing.

07-12-2021 01:49 PM - edited 07-12-2021 01:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Using a simulated device, I get an error if I try to set the property node before configuring the timing. Can you try putting the timing configuration (VI with a clock picture) before Trigger property node? If that doesn't help, can you go into more detail about what error or problem you are encountering?

07-12-2021 02:33 PM - edited 07-12-2021 02:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

A couple of notes:

You will not see any errors because you have a "clear errors" function on the right side of your for loop. If you delete that, I think you will start seeing errors.

You can delete the "clock source" input to your timing VI and it will use the default clock.

07-12-2021 03:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, you're *clearing* any errors every loop iteration so you'll never see them.

With a similar X-series device, I get error -200436 which states that the start trigger delay property is not available when using an external sample clock source. The vi you posted uses the external terminal PFI3 as the sample clock source, hence the error.

I'll bet there's a way to get the timing you need, probably via an intermediary counter, but I'd need to understand some details better.

1. What are the signals at PFI0 (your trigger source) and PFI3 (your sample clock source)?

2. I'm pretty confused about your data acq timing needs. You seem to want to sample AI 90 microsec after a trigger. You also imply that sampling more than 105 microsec after that same trigger wouldn't be good because the image pattern will have changed. But then you set up a 1-second AI task that takes 1000 samples at 1 kHz, and further run it repeatedly in a loop to do averaging. I don't understand how to make sense of that.

3. It sounds to me like your window of opportunity for AI sampling is the time from the signal you call "trigger" until 105 microsec later when the image pattern changes. So I suspect that what you should *really* be doing is treating the signal you call "trigger" as an external "sample clock". This would give you 1 AI sample for each pulse, which would correspond to one of the image patterns. With 128 image patterns, you'd create a finite AI task with 128 samples using an external sample clock and no trigger at all. Each sample will correspond to a particular image pattern.

Is that the kind of thing you want?

4. The simplest way I know to delay a sample clock would involve an intermediary counter. But first let me know if these speculations are on the right track before I get into the specifics.

-Kevin P

07-14-2021 12:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Apologies about the late response, since my account was new I couldn't respond swiftly. The key to the problem was an intermediary step, as you suggested in the third question you had, and I was able to implement a DDG in the line of the connections to formulate a delay.

For clarity in case the problems confuse anyone else, my trigger source at PFI0 was from the DMD I was using, a trigger that was created at the start of a sequence of 128 patterns. PFI1 (The vi might be different translating, currently using PFI1) is derived from the DMD as well, but is instead the beginning of each pattern shift, which occurs at a rate of 105 microseconds. I was a little confused initially and my advisor helped clarify, the delay we were looking for was for the clock source itself.

So for this program, the idea is that every 13-ish milliseconds, the set of patterns will be run through entirely, and the point was to acquire a data point for each image in the 128 pattern set, but instead of doing so right after the shift, it would be 90 us after, to allow for the mirrors to properly shift. I'm not sure how to accurately describe the process of the AI task you were asking about, I'm fairly new to Labview formalism.

Thanks for the aid in getting through the process!

07-14-2021 05:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

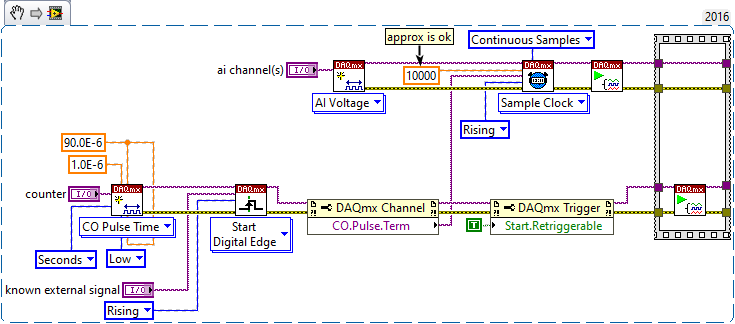

Sounds like you used a piece of external equipment to delay the signal you're using as an external sample clock for your AI task. So the following is more for any future readers since you've already got a solution.

Here's a partial snippet of how one could use an intermediary counter task to accomplish a similar delay. Here I set both low time and initial delay to be 90 microsec with high time set for 1 microsec. (Be careful not to go "line-to-line" on timing by making the high time 15 microsec when you expect the trigger to repeat every 105!)

-Kevin P