- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

TDMS write performance

Solved!07-22-2015 06:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Dear community members,

I've been working on a CompactRIO project for about 2 months now. I've started with zero experience with LabVIEW and thanks to the large amount of tutorials and these forums I've been able to get everything I needed to work so far, so thank you for that! Unfortunately though, I'm running into issues with CPU usage on the cRIO system at the moment.

My current system reads out 9 values from various sources (temperatures mainly) at a rate of 100S/s. These values are moved into a RT FIFO Shared Variable and logged using the Producer-Consumer principle. The system can aquires this data just fine without logging while using roughly 36% CPU. When I turn logging on, the usage goes up and stays at 99%. I figured I was writing too often, so I increased the buffer size and started logging at 50Hz instead. CPU was still 99%. Then I moved to logging at 10Hz, CPU still at 99%. However, when I look into the TDMS files I find that I am not missing any samples.

When I search the internet for relateable issues I find people logging arrays 100 times the size of mine at rates running into the kHz region before they run into trouble. I feel like I am missing something obvious here but I can't find it. I've attached the code. I've added some notes in the code as well which relate to my troubleshooting attempts. My apologies for it being a bit of a jungle perhaps - the problem should be inside the first case structure inside the big main while loop.

TL;DR: Only logging 9 channels at 100S/s in TDMS, CPU at 99% whatever I do. What am I doing wrong?

P.S.: The Shared Variable 'disc data' is Array of Double, RT FIFO Multi-Element with Number of Arrays: 200 and Numer of Elements: 9.

Solved! Go to Solution.

07-22-2015 06:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ruben92 wrote:

My current system reads out 9 values from various sources (temperatures mainly) at a rate of 100S/s. These values are moved into a RT FIFO Shared Variable and logged using the Producer-Consumer principle. The system can aquires this data just fine without logging while using roughly 36% CPU. When I turn logging on, the usage goes up and stays at 99%. I figured I was writing too often, so I increased the buffer size and started logging at 50Hz instead. CPU was still 99%. Then I moved to logging at 10Hz, CPU still at 99%. However, when I look into the TDMS files I find that I am not missing any samples.

How did you change the logging rate? Besides, since you have the FIFO turned on, it is so that you will not miss any data. So you are logging as fast as you can trying to get all of the data. I do not think you actually want this. From what I can tell, you just want to log the current values. Therefore, I would turn the FIFO OFF on the shared variable and add some sort of wait in the logging loop.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

07-22-2015 11:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The logging rate should be controlled by the timeout value in the Shared Variable read block. If I diable the FIFO I will most likely miss a few values since I can't oversample some of my thermocouples. Either way, does that mean this is simply the best the cRIO can do?

07-22-2015 01:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Ruben92 wrote:

The logging rate should be controlled by the timeout value in the Shared Variable read block.

Not true. The timeout is just how long to wait for data to enter the FIFO if it is empty. So if there is data in the FIFO when that node is called, it will immediately return the next item in the FIFO. You are not throttling the loop at all.

@Ruben92 wrote:

If I diable the FIFO I will most likely miss a few values since I can't oversample some of my thermocouples.

That makes no sense at all if you set the logging rate. If you want to log the current data every 50ms, then you will not log all of the data. If you absolutely care about every single value, then just use a normal queue. It is easier to understand and works more efficiently. There are also tricks to read the queue until it is empty and then log the bulk of data at once. This will help your performance some.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

07-23-2015 02:52 AM - edited 07-23-2015 02:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@crossrulz wrote:

@Ruben92 wrote:

The logging rate should be controlled by the timeout value in the Shared Variable read block.

Not true. The timeout is just how long to wait for data to enter the FIFO if it is empty. So if there is data in the FIFO when that node is called, it will immediately return the next item in the FIFO. You are not throttling the loop at all.

@Ruben92 wrote:

If I diable the FIFO I will most likely miss a few values since I can't oversample some of my thermocouples.

That makes no sense at all if you set the logging rate. If you want to log the current data every 50ms, then you will not log all of the data. If you absolutely care about every single value, then just use a normal queue. It is easier to understand and works more efficiently. There are also tricks to read the queue until it is empty and then log the bulk of data at once. This will help your performance some.

Unless he means sending the data to the consumer loop at full rate and accumulating the data until writing every X ms. This decouples the disk activity fromt he primary data source a little, perhaps resulting in better performance if teh disk is truly the limiting factor.

But as an aside: Are you opening and closing the file each write? That will slow things down considerably. You should be able to stream faster than that. What file format are you writing to?

Disclaimer: I an't open the code, I only have LV 2012 so my comments may be way off track

07-23-2015 04:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Crossrulz

I see, I misunderstood the function of the timeout feature. I'll throttle the loop with a wait function instead and see how it goes. Also like you said, I'll try using a queue instead because I indeed prefer not to miss any values.

@Intaris

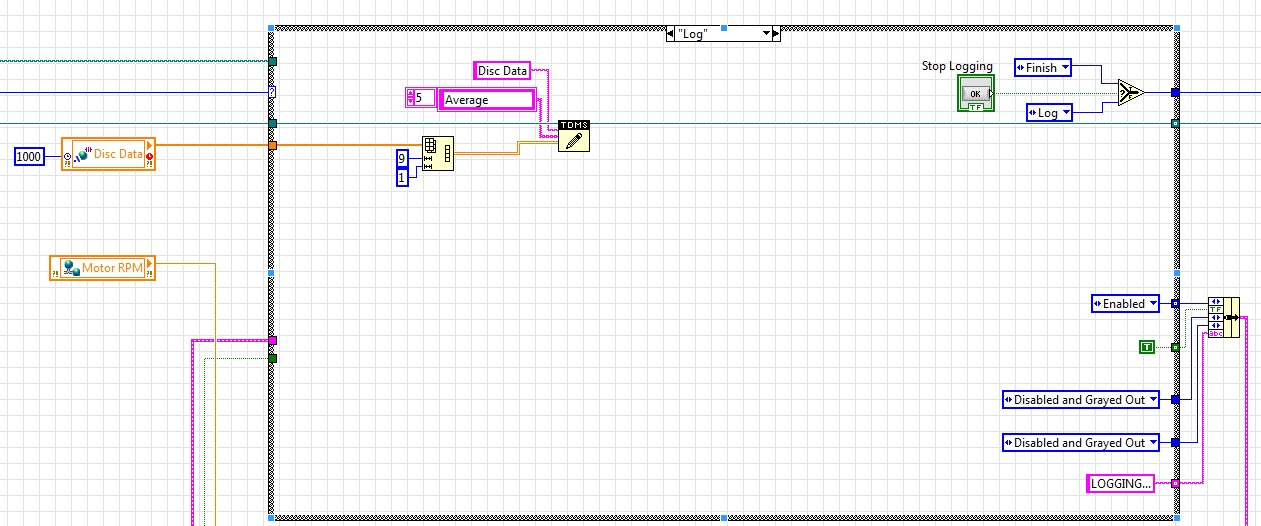

No, I'm not opening and closing the file. A snapshot of the code in question is below:

Either way I'll try to throttle the loops properly now and get back with the results.

07-23-2015 05:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Maybe you could try setting the buffer size for the TDMS write (allowing the driver to cache information for you, reducing the number of individual write operations).

http://zone.ni.com/reference/en-XX/help/371361H-01/lvhowto/setting_tdms_buffersize/

Shane

07-23-2015 07:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your help guys. I've marked it as solved since ultimately the issue was my misunderstanding of the timeout function.

07-23-2015 08:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Cool.

07-23-2015 08:12 AM - edited 07-23-2015 08:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It seems like I'm still not where I want to be. When I call the shared variable read node, it returns the first value in the buffer as it should. Instead, I want to read out the entire buffer in one go. How can I do this most efficiently?

I understand that a queue might be better for this since I can flush the queue when I want, but I have problems with the queue data type. If I try to flush a queue that has an 1D array of double as data type, it returns a 1D array of a 1 element cluster containing the 1D array of double. I can't for the life of me figure out how to turn that back into the 1D array of double I am after. Also my code gets stuck trying to use a queue but that is for me to figure out later.

Is there a way to avoid using a queue here? How do I read say 100 values from a shared variable FIFO before I dump it to disk?