- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Strange benchmark result need some explanation

07-29-2009 03:42 AM - edited 07-29-2009 03:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I was testing the efficiency of the transpose function in Labview so I did a simple test setup starting with by using a constant as source. At some time I changed the constant to a control, and the processing time dropped from about 4200 msec to a number between 1 and 2 msec. I could reproduce the result at every run. Why is it like this?

I include my test VI in 8.0

Edit: I was using Labview 8.6 for this test, and Could not see any major differences in buffer allocations

Besides which, my opinion is that Express VIs

(Sorry no Labview "brag list" so far)

07-29-2009 03:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Its strange. According to the data flow whether it is control or constant the data will be passed to the for loop and it gets processed,I don't know why the strange difference between control and constants only for this function or for all the functions?????????????

Thanks Coq Rouge to bringing the issue.

Ever tried. Ever failed. No matter. Try again. Fail again. Fail better

Don't forget Kudos for Good Answers, and Mark a solution if your problem is solved.

07-29-2009 03:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi CoqRouge,

you found one of LabView's memory handling optimization mysteries ![]()

I changed the attachment to disable parallelism as much as possible and changed to use ShiftRegisters instead of tunnels. Now both cases are same in speed (more or less).

My guess: LV is optimized for handling data from controls. You have wired a constant and LabView has to copy it's data over and over again, hence the speed penalty. Just a wild guess...

07-29-2009 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

GerdW wrote:

My guess: LV is optimized for handling data from controls. You have wired a constant and LabView has to copy it's data over and over again, hence the speed penalty. Just a wild guess...

But, maybe a correct guess. ![]()

07-29-2009 04:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

GerdW wrote:Hi CoqRouge,

you found one of LabView's memory handling optimization mysteries

My guess: LV is optimized for handling data from controls. You have wired a constant and LabView has to copy it's data over and over again, hence the speed penalty. Just a wild guess...

In my first test I did not have any parallelism, but changed the source from control to constant by right clicking on the source. But I get the same results regarding timing. Anyway, perhaps this demonstrate that in some cases a control with with default values is better than a constant. Or that a shift register is better if "Disable Indexing" is enabled on a loop output.

Besides which, my opinion is that Express VIs

(Sorry no Labview "brag list" so far)

07-29-2009 05:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

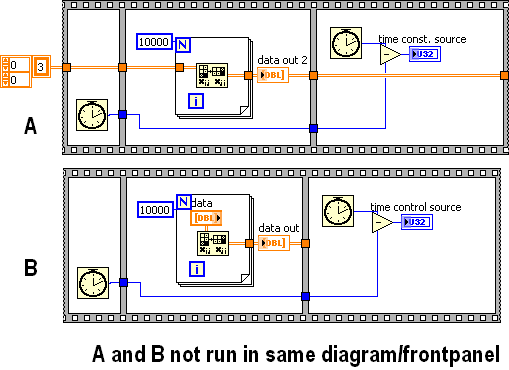

Then I was testing as shown in the figure below, the time was about equal and around 4200 msec

Then I tested like this in the same diagram the run time was about equal but the time jumped up to approximately 7800 msec

Besides which, my opinion is that Express VIs

(Sorry no Labview "brag list" so far)

07-29-2009 05:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I Guess this explain my first question. Then using a constant the data is read in every iteration even if it is placed outside the loop. Perhaps this is a flaw, and a correction is needed?

Besides which, my opinion is that Express VIs

(Sorry no Labview "brag list" so far)

07-29-2009 05:59 AM - edited 07-29-2009 05:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There is something else going on here. The Transpose Array does actually not always physically copy data around. Instead it creates something called a subarray. This subarray is a special internal datatype in LabVIEW that contains not the real data but just a pointer the the original data. In addition to that it contains extra information such as the order of dimensions, the size of each dimension and even the direction of indexing. How it does this exactly is beyond my knowledge but basically when you do transpose an array what LabVIEW does is creating a subarray that contains the information that the dimensions are actually swapped to what they are normally expected.

Many array functions including the one that displays the data on a front panel for instance do know how to treat subarrays properly and also how to treat one, more or all special cases of such subarrays. If a particular function operating on a subarray does not know how to do its work on that particular type a conversion routine will create the real array out of that subroutine anyhow to let it be processed by that function.

Your original difference was most probably due to the fact that LabVIEW stores constants in a diagram differently than runtime data. A constant is stored with the VI to disk and loaded together with the VI into memory but marked read only, since it is a constant. Apparently the Transpose VI optimization decided for some reasons that the subarray approach will not work for constant data, so a new and realy transposed memory area gets allocated by it. This causes the time delay you see. For the case where the data comes from a control the buffer is not constant and Transpose Array simply produces a subarray and the only real runtime overhead is in displaying the data on the front panel.

Rolf Kalbermatter

07-29-2009 06:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Coq Rouge wrote:I Guess this explain my first question. Then using a constant the data is read in every iteration even if it is placed outside the loop. Perhaps this is a flaw, and a correction is needed?

No it is not a flaw. It is a decision about producing always the right result or being optimised and produce sometimes a wrong result. LabVIEW memory optimization is probably one of the most hairy areas in LabVIEW and there have been issues in the past where some dev went in to make a great optimization only to find out after the release that this did actually produce wrong results under some complicated situations.

I'm pretty sure some of the LabVIEw devs could come up with a very detailed explanation why it is the way it is in this case, but with NI Week in front of us, I doubt they have even time to read these boards ![]()

In general you can have trust that such apparent shortcomings do have a very good reason and what is more important, I prefer a possible optimization to be dropped from LabVIEW than even having the remote change to run into calculation errors by over zealous memory optimization. Been there, had that and feeling still not happy about it! ![]()

Rolf Kalbermatter

07-29-2009 06:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Besides which, my opinion is that Express VIs

(Sorry no Labview "brag list" so far)