- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Speed up write to text file

03-13-2017 10:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

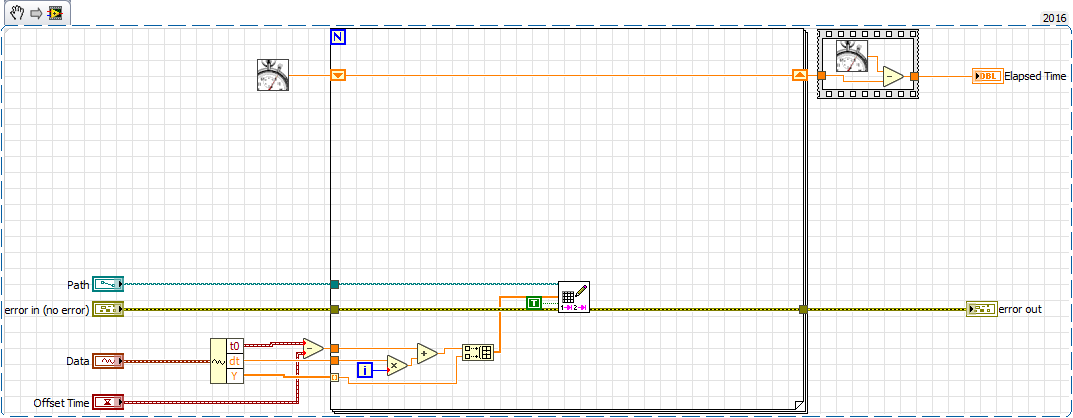

I've been trying to get this VI to work more quickly. It's a VI that simply outputs a text file of a WFM in a

Time <tab> Value

format. Writing to the actual text file seems to be fairly quick, but the conversion of the WFM values to strings isn't. I experimented with using the "Format Into File" VI with no luck. That VI has a tendency to degrade in performance the larger the waveform (Non linear). So I tried to use my own shift register (In the attached VI) which at least stabilizes the write performance. Yet, that's not really the driving issue. It's the conversion to strings that's killing me.

So, what's the most efficient way to generate formatted strings if not using the Format Into String VI?

Thanks

XL600

03-13-2017 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@xl600 wrote:

[...] It's the conversion to strings that's killing me.[...]

How can you tell this as you benchmark both disk access (write) and string handling?

Have you checked on disk throughput in task manager?

Your VI looks quite clean. How does the benchmark change when writing each entry directly into the file without 'custom' buffering?

Please note that your benchmark is inaccurate as taking time stamps runs in parallel to preparation and cleanup (e.g. flushing "remnants") so you cannot tell about influences there......

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

03-13-2017 10:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You didn't include a data file so I haven't tested it for speed, but I would do this.

=== Engineer Ambiguously ===

========================

03-13-2017 10:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's just one of many versions of the VI instrumented in different ways. The format into file VI generally took a few seconds longer on my test data (a few 10's of MB waveform) which is why I tried manually buffering. The flush operation I ignore given it's a very small time period at the buffer size I'm using (Not trying for perfect timing... just general to see big improvements or delays).

03-13-2017 10:39 AM - edited 03-13-2017 10:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

1) You are not writing your full buffer to the file (1000 bytes). 2) You are losing a line of data when you do write.

I managed to get a little extra performance by going a modified Producer/Consumer route. It really seems to be my disk access that is limiting my performance.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

03-13-2017 11:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

RTSLVU:

I just tried the write spreadsheet approach and the results were just a little slower than the buffered approach. I opted for the buffered approach due to the large data sizes of the waveforms I'm going to be working with. I've attached a comparison using both approaches (Your example would attempt to open/close the file for every element of the array btw...)

Crossrulz:

I just tried to see the difference in write time to determine if I'm IO bound. On my system (Using a 3,640,000 long array), writing back the text to a new file takes about 1.5s. Writing the text initially takes about 6s. From that, it seems the string conversion is about 3 times slower than the text file write in all. It's hard to know what caches might be doing here. I may just have to live with it.

BTW: Thanks for pointing out the missing line of data! That would have driven me nuts if this had gone into use...

03-13-2017 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@xl600 wrote:

I just tried to see the difference in write time to determine if I'm IO bound. On my system (Using a 3,640,000 long array), ...

Formatted text files is plain expensive in terms of storage, writing speed, and reading speed. Formatting a binary value in terms of decimal digits and trimmings (decimal point, E, minus signs, etc.) is a complicated operation. Same for the inverse.

It is safe to say that no human will ever read a file with 3+Million lines, so the only reasonable approach is a flat binary file. What is the purpose of this file? What other app will be reading it?

03-13-2017 12:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We have some tools (Unix FORTRAN based) that no one wants to rewrite (Or to put it another way, no manager wants to cut budget to re-write, re-validate, and re-verify) which expect this DAT format. My task (At the moment) is to write the exporters to support our other toolchains though I tend to stay within tdms for everything LabView.

03-13-2017 12:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If there is so much data that the queues fall behind too much using the producer/consumer method, you could always choose to write to the binary file. Then when the VI is done, reread the binary file and write it back out to the text file for the other FORTRAN tools to be able to use at a later time.

03-13-2017 12:40 PM - edited 03-13-2017 12:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That's essentially what I'm doing. I don't output the text files unless the user actually wants to export the data. Otherwise, I use TDMS (Binary) for everything. I'm not using a producer/consumer approach at this level BTW. Above this VI, I chunk out the data in easier to handle blobs as I'm reading from my TDMS files. It's a work in progress and I still need to get text file appending in place (Instead of the replace or create mode I'm currently using).