- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Saving data in 30 or 40 seconds intervals with millisecond accuracy

Solved!06-24-2016 11:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are you really trying to update a chart at 1KHz? Humans can't "see" that fast -- I typically update at a rate of at most 50 Hz (which means that if the data are actually arriving at 1KHz, I either show every 20th point, decimating the data, or show the average of 20 points at a time, smoothing the data).

What is a "scan backlog"? Note that the DAQ loop should have only two functions in it -- a DAQmx Read and an Enqueue. In particular, there should be no "massaging" of the data coming from the Read -- it should just slam into the Enqueue Element function.

Bob Schor

06-24-2016 12:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

This is what I have right now. I am going to throw away a bunch of data for the display, since it's purely for display. Thanks for the tip. For saving though, I am going to try and aggregate 15k data points before saving so I can save once per second. You were suggesting having this "saving process" be a state? I guess we should enter this state by using the ticks from the producer loop? Should I use a notifier, queue, or local variable to send out the ticks to the consumer loop?

Scan Backlog was the name of an output in old DAQ AI read drivers. It's now called Avialable Samples Per Channel, which tells you the number of samples left in the buffer, I believe.

The operations in addition to reading and enqueing in the producer loop are just for monitoring the process and I am assuming that it doesn't drastically slow down the process(?)

In the VI attached, I have yet to fix the end loop behaviour, please ignore weird behaviour when ending loops.

Thanks.

06-24-2016 12:41 PM - edited 06-24-2016 12:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Upon more consideration, it seems very plausible that invoking the Property Node of "Samples to Read per Channel" in the loop can take a long time. I have taken it out for now and changed F to 15 (1ms ticks). Of course, this again begs the question... how do I ensure that the reading loop finishes within 1ms every single time?

06-24-2016 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

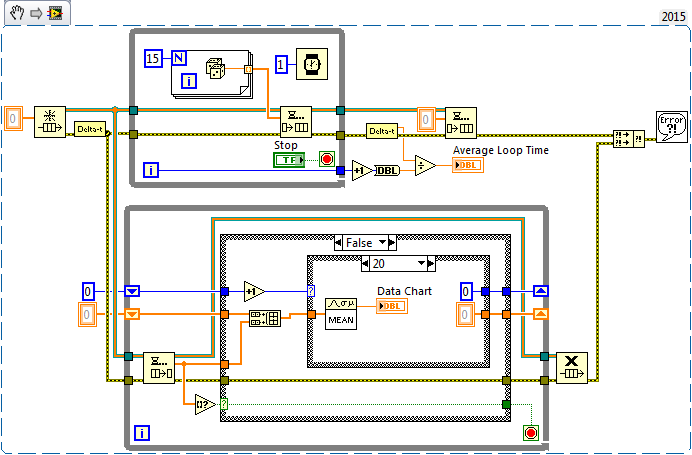

Well, I don't have a DAQ device handy, so I'm (ab)using the PC's clock. Here's a test routine.

The top loop is a simulated 15KHz Data Sampler. The While Loop has a 1 msec "Wait" inside it, so it runs at a nominal 1KHz. Inside, it generates 15 random numbers and puts them in the Producer/Consumer Queue (and that's all). At some point, you push the Stop button, which exits the Loop, puts the Sentinel on the Queue, and waits for the Consumer to end. It uses a high-precision timer to compute the elapsed time (Delta-t) spent in the loop, divides it by the number of loops, and reports the Average Loop Time (which we expect to be 0.00100000 seconds).

The bottom loop is the Consumer Loop. It basically dequeues 15 samples at a time, concatenating them into a single Array. Every 20 data chunks, the Array is averaged, the average is plotted, and the Count and Array are reinitialized for the next 20 data chunks. Note that although the random numbers are between 0 and 1, the average of 20*15=300 of them will have a much smaller variance (roughly 5% of the raw samples, which you can verify by replacing the Mean computation with choosing the 0th element of the array to plot).

When I ran this, the measured average loop time differed from 1 millisecond by 1-3 microseconds. It really does run that fast. Try it yourself.

Bob Schor

- « Previous

-

- 1

- 2

- Next »