- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Question about converting time stamp to double

08-04-2017 02:17 PM - edited 08-04-2017 02:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello, All,

I'm a little confused about how timestamp to Double conversion works. Could someone explain to me a little bit. I was doing a test to convert the timestamp to unix time. The snapshot is attached.

I set the timezone of my PC to UTC and run the VI with LabView2017. The time on my Windows is showing 7:33PM, the unixsec I get is 1501857207, which converts to GMT Friday, August 4, 2017 2:33:27 PM.

I wonder why there is 5 hour difference. 2:33PM is the actual local time here(GMT-6)

Thanks

08-04-2017 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

08-04-2017 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Sorry. I was editing the post, as I noticed that it behaves the same on Windows and RT-OS

08-04-2017 03:00 PM - edited 08-04-2017 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Are you adjusting for your time zone properly? Try this:

=== Engineer Ambiguously ===

========================

08-04-2017 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks. I wonder where Labview grabs Timezone info. I changed timezone in Windows time settings as UTC, but it still shows Central Daylight Time when I run the VI on my PC. I also did a test on CRIO. I set CRIO as UTC through NI Max, and run the VI, it shows UTC.

08-04-2017 03:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

One thing I found is that LabVIEW only looks at the timezone when it first loads. If you change the timezone in the midst of having the development environment open, it won't immediately realize it. You will need to close and reopen LabVIEW before it sees the change.

08-06-2017 08:51 PM - edited 08-06-2017 08:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think you avoid this whole issue if you subtract before you convert?

oops I mean the issue with worrying about conversion to double.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

08-07-2017 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

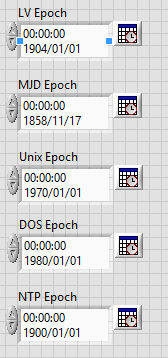

Thanks. I figured it out. When converting to double, it is the total seconds from Labview Epoch to local time. In my code, I made a mistake on the constant that converts Labview Epoch Unix Epoch.

08-07-2017 09:53 AM - edited 08-07-2017 09:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I use globals like this so it is a little more self-documenting:

(Be aware that, due to the forum software formatting the picture for display, this is no longer a valid snippet. Download the file provided, instead.)

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.