- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Performance issues when operating on arrays in clusters when using in-place structures and inlining?

10-27-2014 10:56 AM - edited 10-27-2014 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

We have implemented a fast marching algorithm in LabVIEW 2014 (part of an eikonal solver), but struggle to see how we can get any closer performance-wise to our C implementation of the same algorithm...The latter is about 8 times faster. (We would like to keep the code in pure G to make it simpler to use on different targets...).

One of the core components of the algorithm is a binary heap, which we have defined as a cluster where one of the elements itself is an array of a cluster. A few years ago we would never have dreamed of using such a data construct or SubVIs in loops that run so many iterations - it would obviously have had a huge performance hit, but now - with VI-inlining and IPE-structures it seems to work quite well (i.e. much faster than expected initially).

Replacing the clusters with arrays of primitive data types would make the code seriously messy (instead of 1 wire and shift registers we would have 7-8, and all array replace operations etc. would have have to execute on each separate wire...)...).

So the question is - is it realistic to get much closer to a C implementation? And if so - could the use of cluster arrays within a cluster be the main source of the performance hit even when we are operating on it in-place, and inlining all subVIs operating on it (there are a *lot* of repeated calls going on in the fast marching algorithm...)? The preliminary tests we have done so far suggest no.

10-27-2014 12:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Mads,

when I read "binary heap" I think of Red-Black trees in Variant attributes. Could you employ them?

10-28-2014 01:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello Mads,

Is there any example code you are able to share (in any way)?

There are alot of people on the fora (as you probably have seen) that see these types of performance optimizations as a nice challenge.

Sharing an extract of what you have done might make it easier to provide feedback.

Also note that IPE-structures doesn't always improve performance.

It can also "block/forbid" the compiler to do certain optimizations.

Thierry C - CLA, CTA - Senior R&D Engineer (Former Support Engineer) - National Instruments

If someone helped you, let them know. Mark as solved and/or give a kudo. 😉

10-28-2014 02:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

GerdW - the heap is used to find minimum values, for that particular use I think it will be faster than the red-black tree. The variant attribute trick is superb for key-value lookups though :-).

10-28-2014 04:46 AM - edited 10-28-2014 04:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

ThiCop - I can share the implementation of the fast marching algorithm (it is a well known and documented algorithm after all).

The attached code (back-converted to LV2013) with the current default inputs (set for the convenience of the test) will execute in about 1,65 seconds on our machines (just open the "Fast Marching Test.vi" and run it).

This function is called a *lot* in the overall system, so those seconds add up to a very long processing time.

10-28-2014 07:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Any chance of a LV 2012 version?

This kind of challenge always interests me.

10-28-2014 07:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

MTO,

first off: It is a pleasure to look at your code. It is not very often to see a clean code like this!

The LV compiler got better and better in improving performance over the years (as Thierry already stated). Therefore, i just checked on my machine what would be the impact of removing the IPE from your code.

The results are not very clear and obvious, but it seems that performance was increased by a little (1.9s => 1.88s) with the expected jitter of Windows. So stated numbers are an average of multiple executions.

I haven't dug into the details of the algorithm (i am not familiar with that fast march), but what struck me is your parallizable for loop. It appears that you try to get as much parallel as possible. Still (ok, about 2s is too less to get fundamental data) it seems to me that the application only uses one core for the majority of computation.

Granted, i expect the C code to be one-threaded. So it doesn't explain the performance difference. But as we dont see the C code, we cannot see differences in memory management.

Honestly, i havent seen any performance increasing optimization without questioning the algorithm itself.

Norbert

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

10-28-2014 08:25 AM - edited 10-28-2014 08:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You really only need to apply the Pathagorean Theorm once- its lasted 2500 years without change. the optomizer probably migrates that code anyway though.

Configuring loop parallisim on the outer most loop of fast marching Test.vi sped up execution by about 4dB on my machine. and do watch for IPE's that can be removed.

that unbundle / bundle with the same cluster elements is "The Magic Pattern" and the compiler knows how to recognize it and operates inplace (go ahead and show buffer alocations and prove it to yourself) Since AT least 2011 but not in 2009 I never ran my old benchmark to try 2010 but that was a major overhaul of the optomizer and I would expect it to have been an early optomization.

On a side note: I was discussing the exact issue with another developer when Brian Powell asked me if I meant "The Magic Pattern" I said I wasn't sure--- Brian was sure that was what the team called it in all those meetings.

"Should be" isn't "Is" -Jay

10-28-2014 09:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Norbert - The parallizing is indeed a nice improvement when we have more cores available, thanks for the tip. ![]()

I do not know how we overlooked the parallizable outer loop...(we ran VI Analyzer on it early on without getting that suggestion, but perhaps we've made changes later that made it parallizable).

The only thing I have managed to optimize a little bit so far since I posted the code earlier today was the Get Neighbours code (got rid of the Equals 0 comparisons and case structure)...

10-28-2014 11:13 AM - edited 10-28-2014 11:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

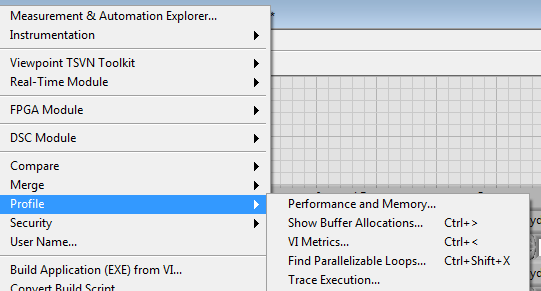

Tools>>Profile>>Find Paralizable loops is so convienient I have a custom hot key assigned to it (Ctrl+Shift+X)

I keep a couple others in that area too!

Refresh often while editing

"Should be" isn't "Is" -Jay