- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

One data point every 10 seconds. How can I smooth the data in "real time"?

Solved!01-19-2017 05:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

I have a program that returns one data point every 10 seconds (particle size data). As the data points scatter quite a bit, I would like to perform a smoothing on the data. I used a pre-recorded data set to determine which smoothing algortihm works best (I used OriginPro 9 for that). The best results I get with a Savitzky Golay filter.

Is it possible to implement such a filter to take into account the last couple of values and return the smoothed value?

An do that repeatedly until the process is finished. I am afraid that this is not possible. Yet, I am not very experienced with LabVIEW and hope I am wrong 🙂

It would be great if someone of you could help me.

Solved! Go to Solution.

01-19-2017 06:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Shift register with preallocated array and replace array subset method to put new value into the array.

As you most likely do not require a ring buffer (assumption: index position is important for you!), you can rotate the array and replace the most current value [N-1].

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

01-19-2017 06:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

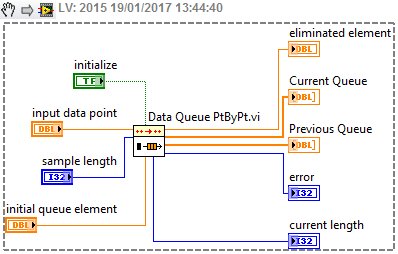

You even do not need to program this feature yourself. It is already implemented in LV (Signal Processing, Point By point, Other Functions):

01-19-2017 06:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Nice one, Blokk.... never looked into that subpalette 🙂

----------------------------------------------------------------------------------------------------

CEO: What exactly is stopping us from doing this?

Expert: Geometry

Marketing Manager: Just ignore it.

01-19-2017 07:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you, Blokk and Norbert,

Blokk, that vi help me a lot! Thank you ![]()

01-19-2017 08:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You might also consider oversampling followed by filtering/averaging/fitting, and then discarding all but one result every 10 seconds.

For example: sample at 1 kHz and set up a loop to read 1000 samples at a time. Every 10 iterations of the loop, retain the latter portion of that 1000 sample set, do your processing on it, and turn it into a single representive data point. This is *much* more real-timeish than running your signal processing off data from 10, 20, 30+ seconds ago.

-Kevin P

01-19-2017 09:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It is a nice idea.

OP: do not forget that it is important to decouple your DAQ from your data processing part. A Producer/consumer design can give you this feature. Even better, in this way you send an array of doubles via the Queue to the consumer from the DAQ loop, so you do not need additional Queue/Shift register, etc...

01-19-2017 09:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What exactly do you mean?

I get one data point every 10 seconds and inbetween nothing. I could probably read the same value for 10 seconds at a high sampling rate but I don't see the benefit of it.

01-19-2017 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am using a rather large QMH design. I should be covered there ![]()

01-19-2017 01:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Qbach wrote:

...I could probably read the same value for 10 seconds at a high sampling rate but I don't see the benefit of it.

Your question was about smoothing your data. Since you aren't satisfied simply living with the raw reading you take 1 time every 10 seconds, I'm suggesting that the estimate you can make by oversampling and locally smoothing right near those 10 second marks will be an improvement. Otherwise, the only data available to run a smoothing algorithm is 10, 20, 30+ seconds old. I find it hard to believe that'd be *more* relevant.

The whole point is that you won't be reading the same value for 10 seconds at a high sampling rate. Variations or noise in your process, your sensor, or your signal path will see to that. If none of those things were changing, you wouldn't have asked about smoothing in the first place.

-Kevin P