- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Non-zero timeout causes chart refresh instability

03-17-2021 07:02 AM - edited 03-17-2021 07:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Greetings everyone,

I came across an interesting case that drove me crazy for the past day. In the attached code (LV2017), "Sound Input Read.vi" accepts a timeout value. This defaults to 10s which I assumed I would never hit so I left it be. However, my chart always suffered from unstable updates with lots of jumping, flickering, and minor pixel intensity variations in the spectrogram. Also, while the loop time was generally on the order of single digit milliseconds, it would occasionally spike to 200ms. After much digging and on a whim, I finally set this timeout to zero and wrapped the code in a case structure to do nothing on timeout. It works flawlessly now.

However, I am still scratching my head as to why this occurs. --Even if-- turning off the timeout checker in the .dll saves some time, I don't see how it would have such a drastic effect on the operation of the program. Can anyone chime in on what is going on here?

03-17-2021 11:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

(I don't have an explanation and have not looked at it in detail. I simply confirm that I see the same in LabVIEW 2020.)

03-17-2021 01:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Each iteration this VI performs FFT (fast fourier transform) which obviously is processor intensive.

Take a look at Tools/Profiles/"Show Buffer Allocations". Arrays and Clusters are the default setting where it will show a black dot (LV2019) where arrays copies will be created (memory allocation slower than processor). The Buffer Allocation color used to RED in older LV.

If you can't see the black dots, toggle the Buffer Allocations for Arrays and you will see dots appear and disappear. I counted 4 Arrays Buffered and 2 Clusters Buffered each iteration.

The main loop does not have a clock to free up processor time for external task.

03-17-2021 04:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@richjoh wrote:

Each iteration this VI performs FFT (fast fourier transform) which obviously is processor intensive.

This does not explain that there is a difference based on the timeout setting. With and without timeout, the loop rate is nearly identical, but occasionally spikes dramatically, but only if the timeout is nonzero.

03-18-2021 09:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ConnerP wrote:

Greetings everyone,

I came across an interesting case that drove me crazy for the past day. In the attached code (LV2017), "Sound Input Read.vi" accepts a timeout value. This defaults to 10s which I assumed I would never hit so I left it be. However, my chart always suffered from unstable updates with lots of jumping, flickering, and minor pixel intensity variations in the spectrogram. Also, while the loop time was generally on the order of single digit milliseconds, it would occasionally spike to 200ms. After much digging and on a whim, I finally set this timeout to zero and wrapped the code in a case structure to do nothing on timeout. It works flawlessly now.

However, I am still scratching my head as to why this occurs. --Even if-- turning off the timeout checker in the .dll saves some time, I don't see how it would have such a drastic effect on the operation of the program. Can anyone chime in on what is going on here?

Not sure what you mean here by "accepts a timeout". I don't see a Control for this timeout value. Your Signal plot and Spectrogram Plot Chart buffer are set to 102400 points. Both Charts are set to 10seconds on the FP, but adding up these data points, 102400 X 2 plus your HUGE buffered arrays and Clusters plus the calculation of FFT via a dll you will overRUN your threading here causing freezes in the GUI.

Whatever your setting to zero fixes this, you got too much memory being buffered each iteration.

03-18-2021 11:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@richjoh wrote:

Not sure what you mean here by "accepts a timeout".

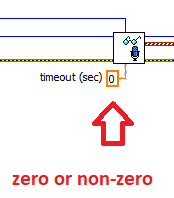

Look again (now I show the label of the constant for clarity. Default is 10):

@richjoh wrote:

Whatever your setting to zero fixes this, you got too much memory being buffered each iteration.

The memory is independent of the timeout setting. With a timeout=0, all problem disappears, LabVIEW is able to handle the SAME memory thrashing gracefully. The problem is elsewhere.

(This code is actually adapted from a LabVIEW shipping example)

03-18-2021 12:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

OK, thanks for pointing out the timeout control.

"Sound Input Read.VI", timeout Ctrl is a parameter to a DLL. There are many simple ways of improving this VI. If the sample size is known, "number of sampes/ch", then input same size a 2D array. Next, the use of the sequence structure, just to get current time, in the VI, kills any possibility of multithreading in that LV code section. Then this VI has all kinds of buffer allocation going on... "vi.lib\sound2\lvsound2.llb\_2DArrToArrWfms.vi" .

I don't expect any fast operation from this code. Then we come to the FFT section where there is tradeoff between speed processing and sampling length.

I see the constants for samples/ch are 10240 are sent to a buffer to be read by 128bytes constant input to the main loop, from his code. The first 128bytes are read, the remaining bytes are never read.

03-18-2021 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First, thanks for the confirmation of this issue Altenbach.

richjoh, thanks for thanks for taking an interest here, but I think you are really hung up on this memory thing. The reason the history on the charts is so long is because I was tweaking every single thing trying to figure out what the heck was going on. I didn't reset everything to default (from the builtin example) before submitting the code here.

I agree the waveform packaging is less than optimal for this case. If I actually have to implement this code in something that isn't a quick and dirty demo, I will clean it up.

As far as the buffer size vs samples read, there is no data being overlooked. The main loop is reading data from the buffer faster than the driver is filling it up.

I admit, the code is messy on account of me turning so many knobs trying to figure out what was going on. I am at home right now and can't double check, but I am 99% certain if one were to open the stock example from NI and wire a 0 to the timeout, the chart would update smoother.

03-18-2021 04:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What happens when you set the timeout to -1?

03-19-2021 02:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There is something wrong in the dll call SIRead in Sound Input Read.vi. It has nothing to do with buffers or other stuff. If you strip away everything but the dll call, the behavior is still there.

It's as if the dll tells it has finished collecting data much later than when it has actually collected the data, and by setting the timeout to 0 you ignore an dll-internal timeout-loop.

I made a test-VI which is a stripped version but I added some info plots like samples/s and loop time. If you like, you can disable everything but the dll-call in Sound Input Read.vi too, but that will not change anything.

Setting timeout to -1 does not change anything.

If number of samples/ch is changed above 1/4 of what it was set to at Init (2560 in this case), the readings will halt if timeout is set to 0, but it will work with a timeout. It looks like the 1/4 is regardless of how many channels, sample rate or bits.

The original LabVIEW example has the same number of samples/ch to the Sound Input Configure.vi as to Sound Input Read.vi. Maybe these VIs aren't supposed to be used with another number of samples than at Init.