- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Newbie question about storing data

Solved!06-25-2018 08:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would like to create an AI data holding area, say, Buffer3000. It will store 3000 data. I will read 1000 AI numbers a second, store them in Buffer3000, take the average of the buffer, display the average. This process will be continued throughout my test. New 1000 numbers will be added, and old 1000 numbers will be pushed out.

How actually do I do this? Thank you.

Solved! Go to Solution.

06-25-2018 08:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Arrays?

------------------

Heads up! NI has moved LabVIEW to a mandatory SaaS subscription policy, along with a big price increase. Make your voice heard.

06-25-2018 09:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I get the idea now. Prepare 3 arrays, each 1000 elements. Each time, write to a different array. Thank you.

06-25-2018 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That wasn't my idea but I guess if that is the way that makes sense for your application, it could work. The easier method IMHO would be to initialize a 3000 element array, concatenate 1000 elements to the end, delete 1000 elements from the beginning of the array and then average all elements.

------------------

Heads up! NI has moved LabVIEW to a mandatory SaaS subscription policy, along with a big price increase. Make your voice heard.

06-25-2018 10:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you. It would have taken me a half day to come up with the code.

06-25-2018 10:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@knowlittle wrote:

I get the idea now. Prepare 3 arrays, each 1000 elements. Each time, write to a different array. Thank you.

Or use a 3x1000 2D array and overwrite the oldest row whenever a new set of 1000 pts comes in.

wrote:

The easier method IMHO would be to initialize a 3000 element array, concatenate 1000 elements to the end, delete 1000 elements from the beginning of the array and then average all elements.

That does not look very good. You typically don't want to constantly grow and shrink arrays in a fast loop. Look for a solution that works "in-place" as above. You could even use a 3000 element 1D array and replace the oldest 1000 point section without changing the size.

Also note that the mean will be incorrect for the first two calls, again something that could be addressed easily.

If performance matters, note that calculating the mean of all 3000 elements does a lot of duplicate calculations, because 66% of the elements are unchanged.

You don't explain if you need to carry around the full dataset, but if all you need is the mean, an array of three elements would be sufficient. Whenever 1000 points come in, take the mean and replace the oldest of the three elements, then take the mean of the tree valid elements. You could even use "mean ptbypt" with a sample length of 3.

06-25-2018 12:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

You could even use "mean ptbypt" with a sample length of 3.

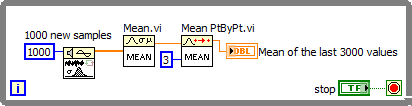

Here's how this could look like....

Modify as needed. Now you can even reinitialize the data by wiring the top of mean ptbypt to a latch action boolean.

06-25-2018 01:39 PM - edited 06-25-2018 02:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I only care about the average of 3000 numbers. I will take your advice: calculate average immediately after acquiring 1000 data points, and use MeanPtByPt with 3. This is really smart.

By the way, I didn't know MeanPtByPt could be used just like in your example. Apparently, I don't have to save previous averages; the VI takes care of it automatically. Really cool.