- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Memory management when converting a set of PNG images into a large 3D array

Solved!09-14-2017 12:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paulygon wrote:

http://digital.ni.com/public.nsf/allkb/07227F8B6D29283B86256CB1008329BC

So I guess either you move to LV 64 bitness version, or you program your code to create files below 4GBytes...

Interesting, I think I'll try installing the 64 bit version tomorrow, it might just work with the code version I am running right now...

~thanks

Hmm, I think you could just get lots of RAM (like 16-32 GB?) for your PC, and handle all data in memory using LV x64:

http://digital.ni.com/public.nsf/allkb/AC9AD7E5FD3769C086256B41007685FA

But be aware, not as many toolkits are supported in LV 64bit as in LV 32bit!

http://digital.ni.com/public.nsf/allkb/71E9408E6DEAD76C8625760B006B6F98

09-14-2017 01:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@paulygon wrote:

The calculations I need to perform are quite simple, basically I'm calculating a value for each voxel/node, the result being a 3D array of SGL floating point numbers - this is the largest array that I am using currently. I then take these values and sum them in the vertical direction from slice 0 to slice N (if that makes sense).

What does the value for each voxel depend on? (1) Only on itself (e.g. scaling). (2) On neighboring voxels in the same plane, (3) on neighboring voxels in close other planes? (4) on all voxels?

If the calculation for each voxel does not depend on vertically far pixels, all you need is a single 2D SGL array in memory where you store the running sum, i.e. you increment each value after processing each image. You should be able to that using only a small amount of memory. Do you really ever need the full 3D array?

09-15-2017 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

altenbach wrote:

If the calculation for each voxel does not depend on vertically far pixels, all you need is a single 2D SGL array in memory where you store the running sum, i.e. you increment each value after processing each image. You should be able to that using only a small amount of memory. Do you really ever need the full 3D array?

It's taken me a while but I've pretty much come to this conclusion, there really is no need for a 3D array (other than it makes the code a bit simpler). However, the calculation does depend on vertically far pixels. My solution at the moment is to try and re-slice the array in the horizontal direction.

@altenbach wrote:

What does the value for each voxel depend on? (1) Only on itself (e.g. scaling). (2) On neighboring voxels in the same plane, (3) on neighboring voxels in close other planes? (4) on all voxels?

To answer your question: (2) On neighboring voxels in the same plane, but looking from either the X or Y horizontal direction (if we take it that all the slices from the 3D printer are taken looking from the Z/vertical direction. I could even split the array into 1280x800 1D sections that contain a single pixel from each Z slice, as the calculation for each pixel depends only on the values of the pixels in the layers underneath it... if that makes sense?

@Blokk I am currently installing LV 64 bit as my PC has lots of RAM (16GB) - fingers crossed I don't need one of those toolkits... I don't think I do...

~thanks

09-18-2017 08:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all,

Thanks for all of your help with this, I installed LV 64-bit on Friday and it has allowed me to get around the main memory issues I have been having for now. I still managed to push the system to it's limit by trying larger and larger arrays (upper limit seems to be <9000 layers) but this will get me by for the time being!

I am still working on improving the performance/efficiency but for now I will mark this as solved - thanks @Blokk for the solution.

Thanks to everyone for the help and advice with this!

~Paul

09-18-2017 08:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Since you're using boolean information, you can compress the data by 8 by bitshifting 8 booleans (which are each 1 byte under the hood) into 1 byte.

/Y

09-18-2017 08:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

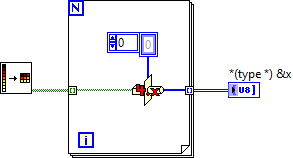

Continuing on my data compression idea, something like this should work.

/Y

09-18-2017 09:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Yamaeda wrote:

Continuing on my data compression idea

You mean 2) in the first answer?

>2) Since the array of bytes only contains bits, can you pack them? So there are 8 bits in a U8, instead of 1? This would reduce the data to 12.5%. Of course at the expense of code complexity.

I'd do it like this (it's a bit less verbose):

09-18-2017 09:32 AM - edited 09-18-2017 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

wiebe@CARYA wrote:

@Yamaeda wrote:

Continuing on my data compression idea

You mean 2) in the first answer?

>2) Since the array of bytes only contains bits, can you pack them? So there are 8 bits in a U8, instead of 1? This would reduce the data to 12.5%. Of course at the expense of code complexity.

I'd do it like this (it's a bit less verbose):

That was a beautiful solution, i never thought about the 4.x-setting!

/Y

09-18-2017 09:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That was a beautiful solution, i never thought about the 4.x-setting!

/Y

Thanks! I still remember the shock when they changed it in LV5!

09-18-2017 10:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, we already have the 2D boolean array in memory, and converting it to a compressed boolean array does really help much unless we can deallocate the initial array. Also, the calculations on each pixel is done in floating point (SGL) and that's where the memory really starts to fill up. The storage of the boolean array is peanuts in comparison. Instead of squashing mosquitoes, we need to focus on the elephant. 😄

As I said, a smart ordering of operations and only operating on a single 2D output array by doing the summing in place is probably the solution.