- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Memory is full (~ 10 GB datalog file)

Solved!03-18-2014 06:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

I developed an application which generates a temporary datalog files (*.csv) logging @10ms. after a ~3day test I need to merge all the temporary files to a single file.

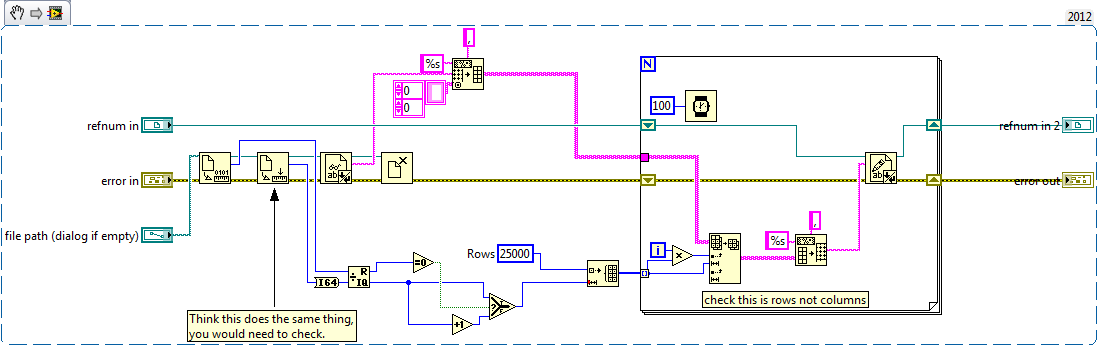

implementation: to merge to a single file I am calculating the total line is each temp. File and by reading 25k rows per iteration and I am writing to the main datalog file. when file size reaches ~400MB. I am getting labview: memory is full error. in the loop I have used "request deallocation" palette', but didn't worked.

I am attaching the screen shot of the code. Please suggest me a solution

Solved! Go to Solution.

03-18-2014 06:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

1) Deallocate memory does not work as you are thinking it does. You will need to bring the Read of the temp file, the conversion and the write of the data into the main log file into 1 VI to lose the memory allocated for each temp file read. (It is doing nothing at the moment)

2) You can replace the while loop with a FOR loop wiring the an Initialised array of Q elements (default value 25000) + if R =! 0 add the value our of R to the bottom of the array. - this will allow LabVIEW to preallocate memory for the operation.

3 Take your Read Spreadsheet string outside the array - every time you run this you onpen and close the temp file which eats )memory. you could just read tyhe file once at the start and then index the array of elements converting them into strings (see method described above)

4) can you replace the while loop with a FOR loop based on the number of files?

5) Try using the Set File Size and Set File position to pre-allocate the size of your destination file before you start your initial read.

James

03-18-2014 07:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Try this to see if it helps

03-18-2014 07:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your biggest issue is the Read From Spreadsheet File. Why are you reading it out as an array of strings just to turn it into a string. Just read the file as a string and then write to your main file. Nice and simple.

As large as your file is going to be getting, I would highly recommend changing over to TDMS. Having that large of a text file will drag down almost any program trying to process it. TDMS will make life a lot easier.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

03-18-2014 07:56 AM - edited 03-18-2014 07:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Dope!! - I should have seen that - I kind of assumed the OP wanted to ensure line consistency but even that is no excuse for the code I just posted - Go on, Rube It!!!

(I only really noticed the 3 different open instances of the same file for no reason)

James

03-18-2014 09:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi thanks to all for the quick response. Your suggestions helped me a lot to solve the problem.

Here is screen shot I have attached (new code)

here I am reading 100MB in each iteration and writing to a new file

03-18-2014 10:08 AM - edited 03-18-2014 10:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You don't need to keep getting and setting the file position. It is all taken care of for you when you read from the file. Just tell it the number of bytes to read.

I would also change your FOR loop to a WHILE loop. Keep reading your 100MB each iteration, but look for errorr code 4 out of your file read (End Of File). Then you know all of your data is transferred and you don't have to do math at the start.

I would also eliminate that wait. You want that loop to run as fast as it can.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

03-18-2014 10:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

modified as per your comment. thank you

03-18-2014 10:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

03-18-2014 11:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Its a mistake.. 100 MB = 104857600 bytes

thanks GerdW

hi,

I have another issue regarding plotting. I need to plot these files in to a graph for report.

For the I have used "read from spreadsheet file.vi" where a memory full error is occurring.

Here I can't use "read from text file" because I don't know what exact byte value for each row.

I have attached the screen shot.

Please suggest