From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

04-20-2018 01:44 PM

Hello,

I'm running LabVIEW 2014 and I'm experiencing a very weird problem where I run my code and after a long period of time the data will get backed up when I'm saving data to a file. For example after collecting data after 20 minutes, I will stop running my setup and even though it is off it will continue to save/display data for 75 seconds or so. Shorter tests there is only a short amount of lag, but longer tests it builds up. Is there a way to prevent this? I've built a basic state machine and know there are probably several areas I can improve, any help would be very appreciated. I need to eliminate all lag in total as to avoid an errors in data. I've attached an example of the my LabVIEW project. Thanks.

Solved! Go to Solution.

04-20-2018 02:20 PM

This would make more sense as a Producer/Consumer than a State Machine. The idea is to separate your tasks into parallel loops with one loop sending data to the next with a Queue. You can have a main DAQ loop, an Analysis loop, and a Logging loop.

04-20-2018 02:37 PM

Would that allow for increased consumption rates that could match the necessary production rates? Because I am also having visual lag issues with my indicators. What would be the best way to match all these rates to prevent any lag? Especially between the Boolean and analog signals.

04-20-2018 05:53 PM

Here are some immediate suggestions that will improve your ability to improve your LabVIEW Coding Skills:

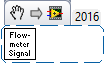

You probably don't know, but it is Flowmeter Signal SubVI. Here it is, again, from your Block Diagram, after I used the Icon Editor to create an Icon consisting of a Filled Rectangle (OK, square) and three lines of text -- much easier to understand and notice if you used the wrong VI ...

You probably don't know, but it is Flowmeter Signal SubVI. Here it is, again, from your Block Diagram, after I used the Icon Editor to create an Icon consisting of a Filled Rectangle (OK, square) and three lines of text -- much easier to understand and notice if you used the wrong VI ...

Bob Schor

04-20-2018 07:52 PM

I'm not sampling continuously at 20 Hz.. I have my sample rate and number of samples defined on the front panel. Additionally, wouldn't I have the same issue with that build up of data if I'm trying to sample at a higher rate for example? How can I have the data save/display/etc faster? I understand changing to a parallel setup would result in no delay for the signals to be read, but how would this improve time from being read to being saved/displayed?

04-21-2018 11:48 AM

@Poxford wrote:

I'm not sampling continuously at 20 Hz.. I have my sample rate and number of samples defined on the front panel. Additionally, wouldn't I have the same issue with that build up of data if I'm trying to sample at a higher rate for example? How can I have the data save/display/etc faster? I understand changing to a parallel setup would result in no delay for the signals to be read, but how would this improve time from being read to being saved/displayed?

In a Serial (State Machine) design, all of the processing starts after the DAQmx Read finishes, and the next DAQmx Read has to wait until all of the processing finishes, i.e. the times sum.

In a Parallel (P/C) design, the first DAQmx Read finishes, the data are put on a Queue and sent to the Consumer which starts processing the data. Meanwhile, the Producer immediately calls the next DAQmx Read, which "blocks" until the read is done, meaning all of the available CPU time is devoted to the Consumer. So the Consumer gets the data as fast as the Producer provides it, and since the Producer is mostly "waiting for the next bunch of data to arrive", the Consumer can use all that "idle" time for processing.

Suppose, however, that some Consumer loops take a little longer than a Producer Loop. No problem -- the Producer asks for its "share" of the CPU, gets it, and simply puts more data on the Queue. As soon as the Consumer finishes the previous data chunk, it starts on the new one. As long as, on average, the Consumer is faster than the Producer, the P/C pair will run at the speed of the Producer, with the Consumer processing taking basically no time (or whatever time it would take if you just processed one chunk of data).

Bob Schor

For the sake of argument, let's assume your DAQmx setup is for Continuous sampling of 1000 points at 1KHz. This takes 1 second. Let's assume your "processing" of the data takes 0.7 seconds. Now let's examine a Serial (State Machine) vs Parallel (P/C Design), and see what happens.

Serial: At T=0, you read Buffer 1. At T=1, you run the rest of the State Machine to process Buffer 1. At T=1.7, you read Buffer 2. At T=2, you run the rest of the State Machine to process Buffer 2. At T=2.7, you read Buffer 3.

04-23-2018 11:15 AM

Hi Bob,

Thanks for your help, what would you recommend I try to limit the amount of states/case structures that I use to consume the data? This would require putting more all in one structure, but reduce the amount of different cases for each structure.

Thanks

04-23-2018 11:46 AM

I recommend you use a Producer/Consumer Design to process the data. You want a Producer Loop that does nothing but acquires the data. Note it can (and should) have code that precedes it that "sets up" the DAQ (initialize, process the "Start" button), but once you start Acquiring, you should keep acquiring until you are done (decide for yourself how you want to signal that).

When the "Read Data" command (whether DAQmx Read, VISA Read, or whatever) finishes, put the Data on a Queue and export it to a Consumer Loop that dequeues the data and does whatever processing (including plotting, averaging, writing to disk, etc.) needs to be done. As long as you can, on average, "consume" at a comparable rate with "produce", you'll be fine. Note that the Producer loop should be spending >95% of its time "waiting for data", thus allowing the Consumer to use all of the CPU cycles. Pretty efficient.

When you create the Queue, don't put a size on it at first, but allow it to grow. You can put a Queue "Status" request in the Consumer and record the maximum size of the Queue to make sure that it doesn't just keep growing (a sign that the Consumer is not keeping up with the Producer, so do a little less in this loop).

One issue with multiple loops is how to stop them. One (poor, IMHO) way to stop a Producer/Consumer loop is to have the Producer, which should know when it's time to stop, release the Queue, potentially (!!) causing an Error in the Consumer that can serve as an Exit signal. A better way (again, IMHO) is to use a "Sentinel" as a "Time to Quit" signal. Say your Queue is an Array of Dbl, or a String. When the Producer exits, it leaves the Queue intact, but sends an "impossible Data" set, e.g. an Empty Array, or an Empty String. The Consumer, when it dequeues the Data, checks for this "sentinel" value and wires the result to a Case Statement. If the Sentinel is present, send "True" to stop the Loop, and when the Consumer exits, release the Queue (the Producer has already exited, so this is perfectly safe). Otherwise, the Consumer processes the (valid) data.

Note that the new Stream Channel Wire has a built-in Sentinel terminal called "last element? (F)" -- if you are using a Stream Channel instead of a Queue, the Producer, when sending the last Data element, would set this terminal to True, and the Consumer would use the "last element?" output to decide whether or not to exit the Consumer. As the Channel Reader and Writer manage the Channels, there is no Obtain or Release to worry about. [If none of this makes sense to you, don't worry -- it's a fairly new LabVIEW Feature that made its "public" debut in LabVIEW 2016 that only some of us use, so far].

Bob Schor

04-23-2018 12:28 PM

Do you think using bundle by name in that producer loop to convert all the double data to a string to put in the queue would take too long? If not could I use a queue with dynamic data? I'm having trouble getting that data type to work.

04-23-2018 06:59 PM

Your Acquire loop uses the Dreaded DAQ Assistant to produce data using the Evil Dynamic Data Wire, which you turn into a 1D array of Dbls. That's what you want for a Queue, a Queue of Array of Dbls. You wire the Array into Enqueue Element (which happens almost instanteously) and just as fast, the entire Array is presented to the Consumer Loop. The data are "teleported" from Producer to Consumer -- don't do any processing in the Producer, do it all in the Consumer.

Bob Schor