- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Inconsistent Timing Units

10-19-2020 11:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

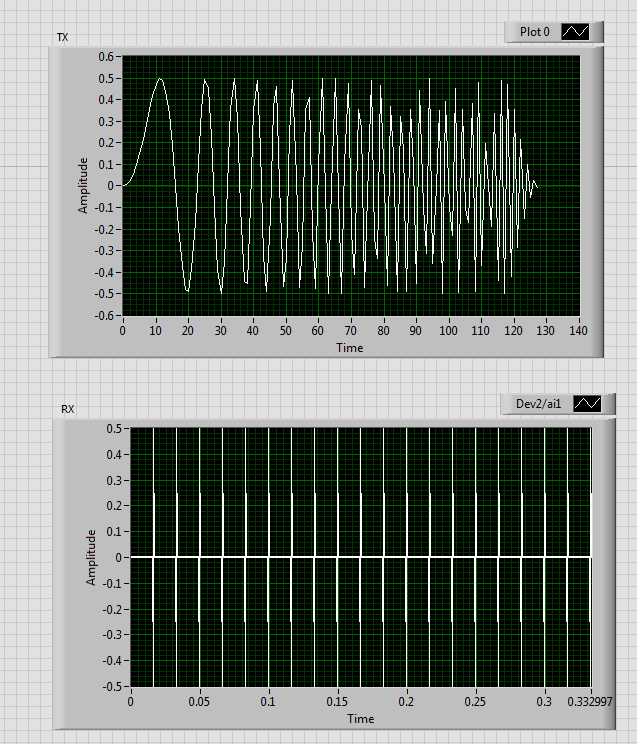

I've created a VI that transmits a Chirp on one channel and receives on another.

I'm a little confused as to what unit of time the TX graph is measured in?

As seen below, it ranges from 0-140 units of time. However, when I measure the actual signal on an oscilloscope, the signal is 412us long.

How can I display actual time on my graph?

Additionally, the RX graph seems to be in a different unit of time to the TX.

My end goal is to be able to calculate TOF from the TX and RX signal. However, I am unsure how I can do this with these units of time not being consistent.

Thank you!

10-20-2020 12:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your chirp pattern create a 1D array of Y values with no timing information at all. When converting to dynamic data (why??) it cannot know what the timing is.

For the graph, you can set dt of the x-axis to the time increment between points (assuming the spacing is regular)

10-20-2020 12:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the suggestion!

How do I set the dt of the x-axis? (Sorry, I'm quite new to Labview!)

10-20-2020 12:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi mcollins,

@mcollins27 wrote:

How do I set the dt of the x-axis? (Sorry, I'm quite new to Labview!)

Right-click the graph, open the properties dialog. See the properties for the X axis. Set the multiplier…

Most of the properties in the dialog can also be set using property nodes of the graph.

I recommend to build a waveform (a special LabVIEW datatype!) instead of converting a 1D array of samples into a DDT wire. A waveform includes timing information and is much more user-friendly than DDT wires…