- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to read big files

10-10-2011 11:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all,

I have a big text file (about 140MB) containing data I need to read and save (after analysis) to a new file.

The text file contains 4 columns of data (so each row has 4 values to read).

When I try to read all the file at once I get a "Memory full" error messags.

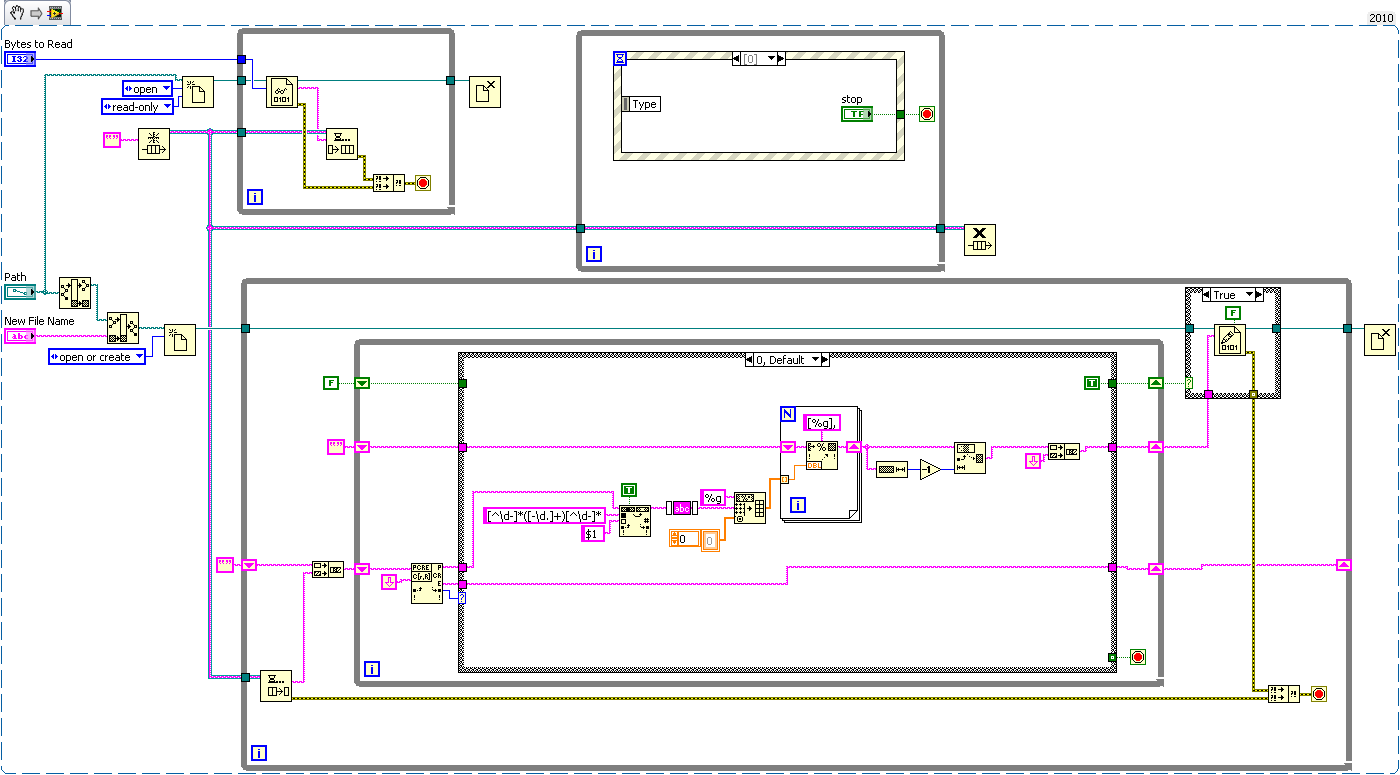

I tried to read only a certain number of lines each time and then write it to the new file. This is done using the loop in the attached picture (this is just a portion of the code). The loop is repeated as many times as needed.

The problem is that for such big files this method is very slow and If I try to increase the number of lines to read each time, I still see the PC free memory decending slowly at the performance window....

Does anybody have a better idea how to implement this kind of task?

Thanks,

Mentos.

- Tags:

- read large text data

10-10-2011 11:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would recommend reading the file in chunks (good size are about 10K) and process each chunk. It is possible that you will have left over data at the end of a chunk so you will need a shift regiaster to hold that last bit of data which will be prepended to the new chunk you read. if you need to process the data line by line do that after reading the large chunk and operate on the string data itself, not reading the file line by line.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

10-11-2011 03:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Mark,

Sorry for the late reply.

I tried your recommendations and it really works better.

I still have a slow descending of the free memory but I hope it won't be so crucial.

I'll have to do some more checking.

Thanks!

10-11-2011 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Can you post your code? You shouldn't have any issues with memory if done correctly. However without seeing the code I can't really see what is wrong.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

10-11-2011 12:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your inner loop can be replaced with a Spreadsheet string to array.

/Y

10-12-2011 07:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Mark & Yamaeda,

I made some tests and came up with 2 diffrenet aproaches - see vis & example data file attached.

The Read lines aproach.vi reads a chunk with a specified number of lines, parses it and then saves the chunk to a new file.

This worked more or less OK, depending on the dely. However in reality I'll need to write the 2 first columns to the file and only after that the 3rd and 4th columns. So I think I'll need to read the file 2 times - 1st time take first 2 columns and save to file, and then repeat the loop and take the 2 other columns and save them...

Regarding the free memory: I see it drops a bit during the run and it goes up again once I run the vi another time.

The Read bytes approach reads a specified number of bytes in each chunk until it finishes reading all the file. Only then it saves the chunks to the new file. No parsing is done here (just for the example), just reading & writing to see if the free memory stays the same.

I used 2 methods for saving - With string subset function and replace substring function.

When using replace substring (disabled part) the free memory was 100% stable, but it worked very slow.

When using the string subset function the data was saved VERY fast but some free memory was consumed.

The reading part also consumed some free memory. The rate of which depended on the dely I put.

Which method looks better?

What do you recommand changing?

10-13-2011 04:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Mentos,

Both methods look fine. It really depends what works best with you. I would use the Read bytes aproach.vi, but as far as using replace substring compared to the string subset function thats really up to you. If time is not a really big issue I would go with the replace substring. If time is an issue then go with the string subset and just keep an eye on memory.

Tim O

National Instruments

10-13-2011 07:27 PM - edited 10-13-2011 07:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here is more of what I was processing. For posting here I didn't create any subVIs but if I probably would do that if this were going into an application. The processing I do on the data is for example purposes only to illustrate how you can parse the chunks of data. Naturally you would need to implement your specific processing but this illustrates processing the data a line at a time. The loop with the event structure is simply there to allow you to exit the program. The queue data could be a cluster which would contain a command and the data. This way you could post an element to the queue indicating that all the data has been read from the file and to exit the processing loop.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

10-13-2011 07:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There was an issue with writing to the file. Here is the fixed version.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

10-14-2011 09:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I should have noted that your Read bytes VI would have problems with very large files. Even though you are reading in chunks you are still reading the entire contents of the file into memory before doing anything with it. You need to only keep a portion of the file in memory at any one time.

The example I posted above doesn't require the parallel loops and queues. You could process each chunk of data within the read loop as well. I showed the queued method because this is a good way if you were reading from some device. Your reads would occur very quickly and you would avoid buffer overruns that may happen if your processing takes a fair amount of time.

The queued implementation can also result in excessive memory usage if you don't process the data in a timely fashion. If you read the entire file and queue the chunks before ever dequeuing anything you again will have the entire contents of the file in memory at one time.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot