- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to check if data is ready on visa/serial

Solved!09-24-2021 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Dobrinov wrote:

@johntrich1971 wrote:

@Dobrinov wrote:

@johntrich1971 wrote:

@Dobrinov wrote:

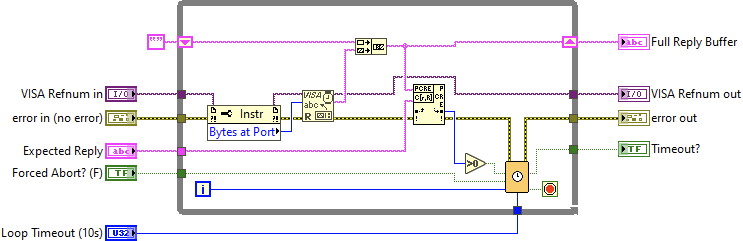

Try using Bytes at Port. Have a look at the example. If there are no bytes to read, the VI will simply go through and do nothing, without blocking the execution or wasting time.

EDIT: Nevermind, got beaten to it.

Note that this is NOT the way to use bytes at port. If using bytes at port do it the way crossrulz said - check to see if there are bytes at port but don't use that value as the number of bytes to read.

There are perfectly valid reasons to do it EXACTLY this way. And the requested solution seemed as one of them.

No, the requested solution did NOT need a race condition as would be introduced with your approach. With your code it is entirely possible to read a partial message. The bytes at port reads before the entire message has arrived, and only part of the message is read. While this approach may work most of the time it is certainly NOT the right thing to do. The only reason that I know of to use bytes at port to tell the read function how many bytes to read is if you don't know how many bytes to read and there is not a termination character (perhaps crossrulz who is a serial communications expert, could think of some other situation where it might be appropriate). For this application use bytes at port to determine that there are bytes at port and then use a large number of bytes to read to allow the read all of the way to the termination character, eliminating the possibility of the race condition.

I'm not sure you understand what "race condition" means.

The example demonstrates that a thing called "bytes at port" exists, nothing more. As a rule of thumb one would need a shift register to gather everything that came at port and scan it for whatever the stop criteria would be, or simply not read at all until a certain amount of bytes is there to read. Not any number of bytes, but a certain amount of them, and then read as many out. Without any termination character. Either way one can iterate through the loop without blocking execution, as per thread request. Or ever getting a VISA Read timeout for that matter. I can implement my own timeouts or scan loop stop conditions based on whatever criteria suits the application instead, since I don't need to wait until a predetermined timeout no matter what (which in my case is sometimes minutes).

And in my applications I can get hundreds of termination characters before I need to stop scanning; they are basically useless to me when working with UARTs. Also I usually need to display everything that comes from a UART console for 0.5-4min before whatever I'm scanning for arrives, as user feedback, thus this allows me to do exactly that while waiting. The "right" way to do VISA Read wouldn't.

It really depends on the use case. For what you described the Bytes at Port would work fine. Though I would probably have a separate task dedicated to the communications and simply pass data to the application as it arrives. In most cases where there is a defined protocol or more normally a termination character is used than Bytes at Port should only be used to indicate that data is there but not be used as the input to the VISA read. In other cases where the protocol is not well defined and response data may or may not have a termination character than I would use bytes at port to see if data is available and then read large chunks of data with a very short timeout. When I get a timeout this would indicate the end of the data. This is not a perfect solution but in my experience it has worked reasonably well once you find the sweet spot for the short timeout.

Ultimately it depends on the use case but more often than not people misuse Bytes at Port and wind up reading partial data due to the previously mentioned race condition. That race condition is when the code reads Bytes at Port while the data is still arriving and the value is used as the input to VISA Read. Unless you are explicitly doing post processing like you describe this will lead to errors in the data.

Mark Yedinak

Certified LabVIEW Architect

LabVIEW Champion

"Does anyone know where the love of God goes when the waves turn the minutes to hours?"

Wreck of the Edmund Fitzgerald - Gordon Lightfoot

09-24-2021 04:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@johntrich1971 wrote:

@Dobrinov wrote:

@johntrich1971 wrote:

@Dobrinov wrote:

@johntrich1971 wrote:

@Dobrinov wrote:

Try using Bytes at Port. Have a look at the example. If there are no bytes to read, the VI will simply go through and do nothing, without blocking the execution or wasting time.

EDIT: Nevermind, got beaten to it.

Note that this is NOT the way to use bytes at port. If using bytes at port do it the way crossrulz said - check to see if there are bytes at port but don't use that value as the number of bytes to read.

There are perfectly valid reasons to do it EXACTLY this way. And the requested solution seemed as one of them.

No, the requested solution did NOT need a race condition as would be introduced with your approach. With your code it is entirely possible to read a partial message. The bytes at port reads before the entire message has arrived, and only part of the message is read. While this approach may work most of the time it is certainly NOT the right thing to do. The only reason that I know of to use bytes at port to tell the read function how many bytes to read is if you don't know how many bytes to read and there is not a termination character (perhaps crossrulz who is a serial communications expert, could think of some other situation where it might be appropriate). For this application use bytes at port to determine that there are bytes at port and then use a large number of bytes to read to allow the read all of the way to the termination character, eliminating the possibility of the race condition.

I'm not sure you understand what "race condition" means.

The example demonstrates that a thing called "bytes at port" exists, nothing more. As a rule of thumb one would need a shift register to gather everything that came at port and scan it for whatever the stop criteria would be, or simply not read at all until a certain amount of bytes is there to read. Not any number of bytes, but a certain amount of them, and then read as many out. Without any termination character. Either way one can iterate through the loop without blocking execution, as per thread request. Or ever getting a VISA Read timeout for that matter. I can implement my own timeouts or scan loop stop conditions based on whatever criteria suits the application instead, since I don't need to wait until a predetermined timeout no matter what (which in my case is sometimes minutes).

And in my applications I can get hundreds of termination characters before I need to stop scanning; they are basically useless to me when working with UARTs. Also I usually need to display everything that comes from a UART console for 0.5-4min before whatever I'm scanning for arrives, as user feedback, thus this allows me to do exactly that while waiting. The "right" way to do VISA Read wouldn't.

I've not implemented code with UARTS, so perhaps for your application using bytes at port is appropriate, but you are also implementing code to alleviate the race condition. In most cases (and the original request is one of them - entirely the opposite of the problem that you described) there's no need to take multiple loops to do what can be done in a single loop. The original post indicated that the problem was that he was having to wait for data to arrive as data is only sent out sporadically. Adding the complexity of making sure that all of the message was read is overkill for this application.

There is no race condition. You can gather whatever has arrived at an input buffer, display it if needed, and in the process empty said buffer. Said buffer isn't very big either, and with UARTs can actually get full, you get buffer overflow errors, and lose information since it no longer fits inside any more. And it doesn't block execution if you don't want it to.

If you consider adding a shift register a complexity overkill, then so be it. The particular module I cut this the example from looks like this (basically a blocking SubVI without display function waiting for a non-termination-character response, with custom error generation and arbitrary timeout independent from VISA settings):

09-24-2021 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

While it works for your purposes, there are 2 "red flags" that are typically things I would work to avoid:

1. A shift register carrying growing content in a While loop. The memory space for your growing string is potentially unbounded.

With your approach of immediately reading exactly the # of bytes seen at the port, you're kinda stuck doing this accumulation because any single read might only retrieve part of the regex you're searching for.

2. The regex search keeps starting over from the beginning of the cumulative string. Fast iterations with a growing string means you're (probably) wasting a lot of CPU effort repeatedly failing to find a match in the early part of the string. One would normally want to use knowledge from a failed search to offset the starting point for the next one.

Depending on the regex you search for, you may be stuck with this as well. Some regex's can't let you rule out the need to start the search from the beginning of the cumulative string every iteration. Dunno what kind of regex's you might be using.

Your 'timeout' will apparently limit the time you spend iterating, which does represent *some* sort of bound on the size of the growing string. But you still don't know if the string you return to the caller is cut off at a place that makes any logical sense. So you propagate the need for the upstream caller to *also* do some kind of shift register accumulation, I would think.

These are just some of the kinds of inefficiencies and hassles that arise from *not* making use of the line termination char. Of course, if you can't depend on getting one, you *are* stuck doing things the hard way.

-Kevin P

09-24-2021 06:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Kevin_Price wrote:

While it works for your purposes, there are 2 "red flags" that are typically things I would work to avoid:

1. A shift register carrying growing content in a While loop. The memory space for your growing string is potentially unbounded.

With your approach of immediately reading exactly the # of bytes seen at the port, you're kinda stuck doing this accumulation because any single read might only retrieve part of the regex you're searching for.

2. The regex search keeps starting over from the beginning of the cumulative string. Fast iterations with a growing string means you're (probably) wasting a lot of CPU effort repeatedly failing to find a match in the early part of the string. One would normally want to use knowledge from a failed search to offset the starting point for the next one.

Depending on the regex you search for, you may be stuck with this as well. Some regex's can't let you rule out the need to start the search from the beginning of the cumulative string every iteration. Dunno what kind of regex's you might be using.

Your 'timeout' will apparently limit the time you spend iterating, which does represent *some* sort of bound on the size of the growing string. But you still don't know if the string you return to the caller is cut off at a place that makes any logical sense. So you propagate the need for the upstream caller to *also* do some kind of shift register accumulation, I would think.

These are just some of the kinds of inefficiencies and hassles that arise from *not* making use of the line termination char. Of course, if you can't depend on getting one, you *are* stuck doing things the hard way.

-Kevin P

Indeed, some good points, and no solution is perfect, there are always advantages and disadvantages.

1. Memory. While the more is buffered in the shift register, the more memory it will take, logically, the alternative is to consume that memory in the VISA input buffer. Same thing, only the VISA input buffer is pretty small (at least for my purposes) and you really don't want to let it get full, while the general memory on the computer(PXI controller) is ginormous in comparison. One of those is a MUCH lesser evil. The moment this SubVI is done (either the expected string is found, it timeouts or an error occurs/is generated) and it is restarted, the shift register is "emptied" thus no permanent memory leak can occur. I can live with all of that. Of course continuously concatenating strings also will create copies in memory, it can be optimized further.

2. It is not perfectly efficient, but since there is no way to know where to cut with certainty as to scan only a portion of the buffer the following iterations it is safer to scan the whole thing every time. I ended up adding 20-50ms wait time into that loop for that reason. Again, hardly optimal, and again I can live with that. Given that this runs on a quad core Xeon PXI controller it is hardly a consideration, but given other circumstances it might require further optimization. As for what the regex is - it is a general purpose "Wait for Reply" SubVI, the "Reply" can be anything and regex allows to search for almost anything, thus why it was chosen. It was encapsulated for that very reason and is used multiple times, waiting for very different system responses through the test sequence.

3. Termination chars are not an option in that application (or rather there are tens or hundreds of them, thus not applicable).

4. There is always a timeout, even if not defined there is one by default. Often, but not always, with additional criteria to stop the loop prior to timeout as well.

5. The string returned to the caller is either for display/logging purposes or for debugging. No additional processing is done on it beyond those purposes. Plus it makes for a really nice spot to use probes on. There is no shift register accumulation upstream.

6. I'd much rather live with the downsides of the design but still be able to wait on "anything" as a stop criteria, not just termination chars, being able to quit waiting (as opposed to having a fixed timeout that cannot be interrupted in the case of "other" way to do that), never having to deal with VISA timeout errors, having the option to not block execution and being able to display everything received up to that point, if required. I find it a valid tradeoff.

Whole point was that there are cases where doing VISA reads this way is exactly the way Bytes at Port ought to be used to read that many bytes at a port. Working with UARTs reading incomplete "replies" is the least of the problems. Combating buffer overflow or framing errors is significantly more unpleasant.

09-25-2021 07:32 AM - edited 09-25-2021 07:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Dobrinov wrote:

As a rule of thumb one would need a shift register to gather everything that came at port and scan it for whatever the stop criteria would be, or simply not read at all until a certain amount of bytes is there to read. Not any number of bytes, but a certain amount of them, and then read as many out. Without any termination character. Either way one can iterate through the loop without blocking execution, as per thread request. Or ever getting a VISA Read timeout for that matter. I can implement my own timeouts or scan loop stop conditions based on whatever criteria suits the application instead, since I don't need to wait until a predetermined timeout no matter what (which in my case is sometimes minutes).

But all this work is exactly what VISA Read already does for you internally. And a lot more efficient and proven to work than you can probably whip up without lots and lots of testing and refining.

There are two modes that VISA Read does perfectly for you and the situation you describe does not seem to fall out of them:

1) If your protocol requires a certain amount of bytes, or as a variant a fixed size header that contains the exact number of variable sized data to follow, just disable the termination character mode when initializing the VISA session and read as many bytes as you want. If there are less bytes within the timeout period, VISA Read will return with a timeout error and leave the bytes in its internal buffer. No need for a shift register to maintain your own data fragments yourself.

2) If the amount of returned data is not known beforehand, your device better supports a designated termination character. If that is not the case, then get the device programmer and flog him until he promises to never implement such a brain damaged protocol anymore. Enable the according termination character when initializing the session and then pass a "number of bytes to read" to VISA Read that is guaranteed to be larger than what you ever expect. VISA Read will return when

- there was any error in the driver

- with a timeout error if no termination character has been received within the timeout period, leaving all the bytes so far received in an internal receive buffer that is private to the VISA session

- as soon as the termination character has been received with all the data up to and including the termination character, but nothing more than that, leaving potentially already received bytes for the next message in the internal buffer

- if the requested amount of data has been received but only with as much as requested even if there is more in the buffer

If a device sends very sporadically data it can be useful to employ Bytes at Serial Port but NOT to determine how many data to read but only if you should try to read at all. The actual read should happen according to the two above mentioned modes, NEVER using the actual number you got from Bytes at Serial Port. If you use that number anyway you indeed need to do your own buffering scheme to detect when a full message has been read and that is simply unnecessary extra work on your side since VISA Read already does that work for you anyhow if you let it do that.

There are only really two reasons to use the actual number returned from Bytes at Serial Port:

1) You just want to implement a terminal application that writes out all the data as it is received. Here the human viewer does the protocol parsing himself.

2) You have to communicate with a brain damaged device that does neither use fixed size messages or headers nor any clear termination character.

Using Bytes at Serial Port for anything but case 1) will always make you implement extra code with shift registers that is difficult to debug and maintain and generally will always make you revisit that code over the years over and over again to fix some corner case problems when the timing of the device suddenly works a little different. And I can know it. I did 25 years ago also try to use Bytes at Serial Port in my serial port instrument drivers and those have always been the ones that required lots and lots of tinkering to get fully reliable device communication working, and a few years later after having seen the light I always yanked that code out of projects when I had to revisit them to simply replace them with a proper VISA Read and never looked back at that part of the software after that.

09-25-2021 10:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:Using Bytes at Serial Port for anything but case 1) will always make you implement extra code with shift registers that is difficult to debug and maintain and generally will always make you revisit that code over the years over and over again to fix some corner case problems when the timing of the device suddenly works a little different. And I can know it. I did 25 years ago also try to use Bytes at Serial Port in my serial port instrument drivers and those have always been the ones that required lots and lots of tinkering to get fully reliable device communication working, and a few years later after having seen the light I always yanked that code out of projects when I had to revisit them to simply replace them with a proper VISA Read and never looked back at that part of the software after that.

Not to mention HORRIBLY inefficient. The first application I did with serial ports (~15 years ago) fell so far behind in communications that I was forced the throw out swaths of messages. Yes, I used the methods of reading the number of bytes in the port, storing in a shift register, and doing weird search algorithms. I have since learned to let VISA do most of the hard work for me and use the messaging protocol to figure out how many bytes I should read or just try to read a large amount of data and let the termination character end the read.

For a lot more details: VIWeek 2020/Proper way to communicate over serial

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

09-25-2021 01:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

@Dobrinov wrote:

As a rule of thumb one would need a shift register to gather everything that came at port and scan it for whatever the stop criteria would be, or simply not read at all until a certain amount of bytes is there to read. Not any number of bytes, but a certain amount of them, and then read as many out. Without any termination character. Either way one can iterate through the loop without blocking execution, as per thread request. Or ever getting a VISA Read timeout for that matter. I can implement my own timeouts or scan loop stop conditions based on whatever criteria suits the application instead, since I don't need to wait until a predetermined timeout no matter what (which in my case is sometimes minutes).But all this work is exactly what VISA Read already does for you internally. And a lot more efficient and proven to work than you can probably whip up without lots and lots of testing and refining.

There are two modes that VISA Read does perfectly for you and the situation you describe does not seem to fall out of them:

1) If your protocol requires a certain amount of bytes, or as a variant a fixed size header that contains the exact number of variable sized data to follow, just disable the termination character mode when initializing the VISA session and read as many bytes as you want. If there are less bytes within the timeout period, VISA Read will return with a timeout error and leave the bytes in its internal buffer. No need for a shift register to maintain your own data fragments yourself.

2) If the amount of returned data is not known beforehand, your device better supports a designated termination character. If that is not the case, then get the device programmer and flog him until he promises to never implement such a brain damaged protocol anymore. Enable the according termination character when initializing the session and then pass a "number of bytes to read" to VISA Read that is guaranteed to be larger than what you ever expect. VISA Read will return when

- there was any error in the driver

- with a timeout error if no termination character has been received within the timeout period, leaving all the bytes so far received in an internal receive buffer that is private to the VISA session

- as soon as the termination character has been received with all the data up to and including the termination character, but nothing more than that, leaving potentially already received bytes for the next message in the internal buffer

- if the requested amount of data has been received but only with as much as requested even if there is more in the buffer

If a device sends very sporadically data it can be useful to employ Bytes at Serial Port but NOT to determine how many data to read but only if you should try to read at all. The actual read should happen according to the two above mentioned modes, NEVER using the actual number you got from Bytes at Serial Port. If you use that number anyway you indeed need to do your own buffering scheme to detect when a full message has been read and that is simply unnecessary extra work on your side since VISA Read already does that work for you anyhow if you let it do that.

There are only really two reasons to use the actual number returned from Bytes at Serial Port:

1) You just want to implement a terminal application that writes out all the data as it is received. Here the human viewer does the protocol parsing himself.

2) You have to communicate with a brain damaged device that does neither use fixed size messages or headers nor any clear termination character.

Using Bytes at Serial Port for anything but case 1) will always make you implement extra code with shift registers that is difficult to debug and maintain and generally will always make you revisit that code over the years over and over again to fix some corner case problems when the timing of the device suddenly works a little different. And I can know it. I did 25 years ago also try to use Bytes at Serial Port in my serial port instrument drivers and those have always been the ones that required lots and lots of tinkering to get fully reliable device communication working, and a few years later after having seen the light I always yanked that code out of projects when I had to revisit them to simply replace them with a proper VISA Read and never looked back at that part of the software after that.

Well, the "situation" I describe falls out of the convenient case you, and the likes of you, insist on. And I did outline it, one just has to bother reading what I wrote so far. There is no fixed number of bytes and termination characters are useless to determine when to stop scanning a serial port. Sometimes waiting for so long can mean the VISA input buffer gets full. I guess none of you have an idea what a UART is and thus assume it must be something like a protocol to communicate with, say, a programmable power supply. Which is not the case.

In my case I work with embedded systems. I am responsible for implementing the end-of-line testers for those, and more or less always those use a UART interface as a mean of communication with the OS running on them. As a rule of thumb as part of the testing process I have to boot from a certain source, with a specially prepared bootloader, boot some version of Linux configured for that specific DUT, log in, and run a number of prepared scripts, log their console feedback, analyze it and determine if everything ran properly or not, i.e. was a test step pass or fail, and in case log the results in a TestStand protocol via string parsing. Termination characters are useless in that case, as every line of console feed is terminated. In every case counting number of returned bytes returned is useless as well - if any error occurs that number will change. Some scripts can run for multiple minutes and thus fixed timeouts that long are unacceptable, since with a loop I can determine if a DUT is not communication at all and interrupt the loop long before timeout would occur. In production wasting minutes for no good reason is a no go - time is money. Only way is to wait until a certain string, found by regex, comes on that console and you proceed further with whatever the test specification demands.

Nobody in that field would consider a standard embedded system running Linux as "brain damaged". Me insisting on anybody changing how things are done just so I can use VISA Read with termination characters would get me laughed out of the building.

My code, even the basic version copy-pasted here, is elementary to debug and maintain - hardly anything happens there. Maybe for complete novices it might be hard, but if that sort of code scares you, don't know what to tell you. That sort of module is peanuts.

I'm not saying anybody should always used Bytes at Port, I'm just saying it has very valid reasons to be used the way I outline. Blanket statements of "never do this" is frankly pretty stupid - there are advantages for every tool in our disposal, and if the pros outweigh the cons, then everything is acceptable. You can't always count on known number of bytes returned or using termination characters.

09-25-2021 02:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have built real UARTs with discrete digital logic long before you probably knew what a computer is, unless you were also born in the 60ies of last century. 😉

But you talk about programming UARTs, which is of course a valid thing in the embedded world, but what has that to do with LabVIEW and VISA? Both don't work on such embedded hardware. The embedded hardware where you can run LabVIEW and VISA on are all running fully featured OSes, where you do not directly program UARTs, unless you program a device driver for those OSes, in which case you won't use VISA and LabVIEW either. They sit way above an OS device driver.

And yes if someone programs an embedded device using a communication protocol that does not either employ fixed size messages or a termination character, he is simply trying to make your life hard. It costs nothing in terms of performance to use either of those techniques but makes life for everyone having to deal with those devices more complicated. Of course it could be on purpose, as part of making it more complicated for someone to use the communication port as part of security through obscurity.

09-25-2021 05:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Dobrinov wrote:

In my case I work with embedded systems. I am responsible for implementing the end-of-line testers for those, and more or less always those use a UART interface as a mean of communication with the OS running on them. As a rule of thumb as part of the testing process I have to boot from a certain source, with a specially prepared bootloader, boot some version of Linux configured for that specific DUT, log in, and run a number of prepared scripts, log their console feedback, analyze it and determine if everything ran properly or not, i.e. was a test step pass or fail, and in case log the results in a TestStand protocol via string parsing. Termination characters are useless in that case, as every line of console feed is terminated. In every case counting number of returned bytes returned is useless as well - if any error occurs that number will change. Some scripts can run for multiple minutes and thus fixed timeouts that long are unacceptable, since with a loop I can determine if a DUT is not communication at all and interrupt the loop long before timeout would occur. In production wasting minutes for no good reason is a no go - time is money. Only way is to wait until a certain string, found by regex, comes on that console and you proceed further with whatever the test specification demands.

Nobody in that field would consider a standard embedded system running Linux as "brain damaged". Me insisting on anybody changing how things are done just so I can use VISA Read with termination characters would get me laughed out of the building.

Ah, a Command Line Interface, aka a Terminal. I do plenty of those. Use the termination character to read a line at a time. You can easily read multiple lines or do some search to stop reading lines. What I like to do is have my terminal interface happening in a loop all on its own. I use a queue to send commands to that loop, including "write", "stop", "return lines that match a specific pattern". That return data is sent through another queue defined by the writer.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

09-25-2021 07:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@crossrulz wrote:

@Dobrinov wrote:

In my case I work with embedded systems. I am responsible for implementing the end-of-line testers for those, and more or less always those use a UART interface as a mean of communication with the OS running on them. As a rule of thumb as part of the testing process I have to boot from a certain source, with a specially prepared bootloader, boot some version of Linux configured for that specific DUT, log in, and run a number of prepared scripts, log their console feedback, analyze it and determine if everything ran properly or not, i.e. was a test step pass or fail, and in case log the results in a TestStand protocol via string parsing. Termination characters are useless in that case, as every line of console feed is terminated. In every case counting number of returned bytes returned is useless as well - if any error occurs that number will change. Some scripts can run for multiple minutes and thus fixed timeouts that long are unacceptable, since with a loop I can determine if a DUT is not communication at all and interrupt the loop long before timeout would occur. In production wasting minutes for no good reason is a no go - time is money. Only way is to wait until a certain string, found by regex, comes on that console and you proceed further with whatever the test specification demands.

Nobody in that field would consider a standard embedded system running Linux as "brain damaged". Me insisting on anybody changing how things are done just so I can use VISA Read with termination characters would get me laughed out of the building.

Ah, a Command Line Interface, aka a Terminal. I do plenty of those. Use the termination character to read a line at a time. You can easily read multiple lines or do some search to stop reading lines. What I like to do is have my terminal interface happening in a loop all on its own. I use a queue to send commands to that loop, including "write", "stop", "return lines that match a specific pattern". That return data is sent through another queue defined by the writer.

In my experience, terminals always have a prompt. I read to the prompt. The prompt is my termination character. If the response has human formatting of several lines separated by prompts, I as many VISA reads as needed. If I'm not initiating a query, then I'm using Bytes at Port to see if there is anything to read. If it's not zero, then I do a VISA read.

Is that what you meant by "termination character"?

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.