- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How can I synchronise these loops?

03-29-2018 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi all,

Right, need a bit of help and advice.

All the applications I have previously designed have had all their file I/O operations, test timing, display update rates etc based on hardware timed samples making it relatively easy to synchronise all the various operations as well as ensuring the correct sample is logged even if the host slows up.

The issue I have for this project is the device in question is a serial-based weigh balance which means the data cannot be hardware timed and in non-deterministic in its sampling time.

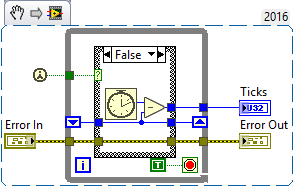

The attached picture shows part of the code. The acquisition loop simply reads the data from the balance as quickly as it possibly can (the loop iteration period is around 200-300ms) then pings that into a File I/O queue and an FGV used for the display loop.

The File I/O then logs this data if the logging interval time has elapsed (based on a software timed elapsed timer I’ve designed). Also included in the File I/O is another timer that I use for the total test elapsed time.

The display loop updates a numeric and Boolean every 250ms, however I’ve had to make the chart update every second as the end user wants the chart X axis in minutes. This is because the chart when used with relative time is based in seconds, therefore the rate of data into the chart must be a function of the time you want to see on the chart axis.

This is where part of the problem lies as something is causing the timing to fluctuate which means the data coming into the chart is out of synchronisation with the X axis time. So say I set the X axis to 10 minutes and I watch the chart, 10 minutes can elapse but the chart has not filled because the timing is out. The next log time is also out of sync with the total elapsed time, not massively, but again I feel there is a better way of doing this.

I need a way to somehow synchronise these loops or at least have the ability to control the loop execution and I think that the Timed Loop is the way forward, I just don’t know how to implement it correctly for this particular issue as well as in general as I’ve never used them.

Any thoughts or suggestions?

Cheers

Mitch

03-29-2018 05:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Unfortunately, with Windows you cannot count on timing. The Timed Loop won't even help. If you need deterministic timing, you need to go to an RT system.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

03-29-2018 06:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As I see it you simply have to live with and handle the varying timing. The first thing that comes to mind is XY-graph and read and add the timing when you write the log file. The timing will be as bad, the information correct.

/Y

03-29-2018 06:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I faced the same problem -- I was taking data from 24 balances that nominally made 10 weights/second. I used a two-prong approach -- when the VISA Read gave me the weight, I saved it, along with the number of milliseconds since the start of recording, to a data file. I also passed the value to a plotting routine that used the nominal 0.1 sec time step to provide me with a graph of "what was happening" in real-time. For monitoring purposes, splitting tenths of a second seemed irrelevant, but the more accurate timing information was being saved for post hoc analysis. "Having your Cake and Eating a Cookie, Too".

Bob Schor

03-29-2018 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Surely though if the timed loop can give iteration timing information I can use that to adjust the next loop period to keep all the loops synchronised (very closely at least)?. If the Timed Loop won’t help this situation, why does NI support such a function for Windows?

The File I/O operations I can probably live with, but I know the end user will want to know that when it says 10 minutes, it really is 10 minutes worth of data as they are looking for a rate of change, i.e. Weight vs Time and I guarantee they will use the graph axis to approximate what’s going on.

03-29-2018 07:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Timed Loop is really designed for LabVIEW Real-Time, where "Time" is more "sacred" and the OS is designed to stay out of the way. In my previous response, I gave you the tactic I used, namely to record the time as accurately as possible and for "real-time monitoring", i.e. as the data are coming in, to accept (and essentially ignore) the (small?) uncertainties in the actual times, assuming that these graphs are for monitoring purposes, and precision/accuracy is not a strict requirement.

If it is, you can spend extra processing time "adjusting" the plots (you'd probably need to use a Graph) to handle variable Y (data) and variable X (time) values.

Bob Schor

03-29-2018 07:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Bob,

Apologies, your reply came as I was responding to the other two so I didn't see your response before I posted.

I completely agree that Timed Loops lend themselves more to RT, however there must be some benefit to using them even on Windows, otherwise why would they have bothered to include them?

So your solution, do you happen to have an example I could see?

In terms of the requirements the timing accuracy is of high importance given that the entire test is Time vs Weight so the timing needs to be the best it can within the given restrictions of software timed data acquisition. This includes the chart/graph for monitoring purposes as I need to assure the end user that the rate of change as depicted is accurate (to within a few seconds at least)

If I did need to adjust the timing/plot on the fly, what monitored value would I use to determine the offset?

03-29-2018 02:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Mitch_Peplow w

I completely agree that Timed Loops lend themselves more to RT, however there must be some benefit to using them even on Windows, otherwise why would they have bothered to include them?

It's a Structure, which LabVIEW puts in the Structure Palette. Functions can (usually) be used in LabVIEW and LabVIEW-RT, even if one might not be appropriate. My guess is that years ago, NI put in here, not "worrying" that someone would use it in an inappropriate spot.

So your solution, do you happen to have an example I could see?

Well, here's a VIG called "Clock". When first called, it sets the current Millisecond count in the Shift Register, and subsequent calls get "Milliseconds since first called". Hence a clock. The values come, I believe, from the CPU clock, so it should be precise, if not completely accurate (I think I have those terms right ...). That is, while any particular Clock reading might be off (a millisecond or two), there should be no "drift".

In terms of the requirements the timing accuracy is of high importance given that the entire test is Time vs Weight so the timing needs to be the best it can within the given restrictions of software timed data acquisition. This includes the chart/graph for monitoring purposes as I need to assure the end user that the rate of change as depicted is accurate (to within a few seconds at least)

It should certainly be accurate to within a few seconds -- probably within a (very) few milliseconds.

If I did need to adjust the timing/plot on the fly, what monitored value would I use to determine the offset?

If you are talking about "saved data" (i.e. data written to a file), the pairing of "Value Read" and "Clock" should suffice and be good to within a few milliseconds of Time. If you are talking about "what a human can see during an experiment", now you are talking seconds, or several orders of magnitude lower that the precision of your data. Just remember that you don't really know the rate your data are arriving (unless you compute it from Clock). Should not be a problem.

Bob Schor

03-29-2018 03:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Include a timestamp generated at the same place you get your data. Send the timestamp/data pair off to your logger and graph and make the graph an x-y graph. The data points will not be evenly spaced but at least the times will be more accurate.

04-03-2018 03:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Morning chaps,

Many thanks for your advice and help, much appreciated.

I think you are correct in what you are saying and your suggestions are as good as I'll get it so I'll try it out and see how I get on.