- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

How accurate is the wait function when using really long waits?

01-30-2015 06:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@crossrulz wrote:

You really can't trust the waits being exact in a Windows environment. What you should really be doing is use a While loop that iterates every 100ms or so and use the system time to see how much time has passed. When the difference reaches your 30 minutes, stop the loop.

What crossrulz said.

The benefit of doing this is that you can put it in a parallel process and still carry on doing all the other things you might want to do...including interrupting your wait to stop the VI running.

CLA

01-30-2015 11:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the tips guys. I'll try using a time stamp comparison (if I understood your tip correctly) and see if that fixes the bug. The reason I didn't do that to begin with is that I figured there would be more overhead than with a simple wait function, which would ADD time to the loop...but in this case, if I'm over by a couple percent, it's not a big deal. Much better than being under by 50% every so often!

Can anyone explain why the wait(ms) function is unreliable in Windows?

01-30-2015 12:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

R.Gibson wrote:

Can anyone explain why the wait(ms) function is unreliable in Windows?

Simply put, Windows is not a deterministic OS. It cannot guarantee your timing to any real accuracy.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

01-30-2015 01:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You can also use the Tick Count function. I do this just in case something silly like daylight savings time happens during a wait. You do need to watch for the tick count to roll over though if you use it.

01-30-2015 03:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just use the elapsed timer express vi

Its one of the good low overhed Xvi's if you dig into it its just doing what you are doing only you don't have to worry about testing it NI did that

"Should be" isn't "Is" -Jay

01-31-2015 05:46 AM - edited 01-31-2015 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@BowenM wrote:

You can also use the Tick Count function. I do this just in case something silly like daylight savings time happens during a wait. You do need to watch for the tick count to roll over though if you use it.

The roll over is no problem at all. The output is an unsigned integer and unsigned integer mathematics is defined to do the right thing even if you subtract a bigger number from a smaller number (which is what happens after a roll over). The result of the subtraction is still correct. The only thing you have to watch out there is that you can not calculate intervals that way that go over the entire interval of the unsigned number you are using. In the case of the timer tick output (uInt32) this is 2^32 ms or about 1193 hours or 49 days.

The only problem about the timer tick is that it is derived from one of the crystal clocks on the mainboard and therefore can go out of synch with the realtime clock which nowadays normally is synchronized to some internet time service with atomic clock accuracy. So for longer periods there is a small deviation between the timer tick and the realtime clock in the computer. This is however typically a few 100 ppm at most.

And yes, all the wait functions use the timer tick to calculate their wait time, not the realtime clock value which has a somewhat bigger overhead to be read. Not an overhead that is usually important but in tight loops trying to time on milliseconds, this overhead can add up.

01-31-2015 08:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Win keeps civil time measured in seconds elapsed since epoch ignoreing leap seconds. The Veep's house offers a service to provide your PC with the correct civil time. NIST-Time32.exe. The USNO is an interesting google.

"Should be" isn't "Is" -Jay

01-31-2015 08:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@JÞB wrote:

Just use the elapsed timer express vi

Its one of the good low overhed Xvi's if you dig into it its just doing what you are doing only you don't have to worry about testing it NI did that

I don't know why anyone would use a wait (ms) primitive for delays more than a minute or two. Timer vis are a lot more convenient and less prone to goofy stuff happening when the time zone changes or there's a rollover.

LabVIEW versions 5.0 - 2020

“All programmers are optimists”

― Frederick P. Brooks Jr.

02-04-2015 11:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

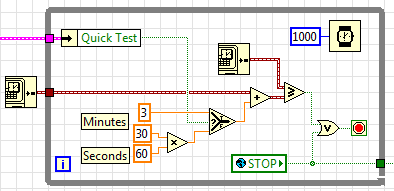

So I implemented a timestamp approach rather than a wait approach, which I agree is a more robust and accurate coding methodology for long periods. Using this approach possibly introduces a little more overhead but it is not accumulating error on each iteration of the wait(ms) function like my previous code. Here is what I ultimately ended up using:

However, I realized that this wasn't the main bug that I was experiencing...there was a tiny little boolean in a error handling sub-vi that was set incorrectly, breaking out of the configure-equipment state-machine, rather than continuing to monitor the device until it had stabilized and soaked. This was in turn causing the dwell timer to start prematurely...hence randomly short dwell times every time there was a communication error with the device.

...now I just need to figure out why there are so many communication errors... 😉

Thanks for your help guys! 🙂

- « Previous

-

- 1

- 2

- Next »