- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Dynamically populate clusters from 1D array of doubles

06-25-2019 09:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

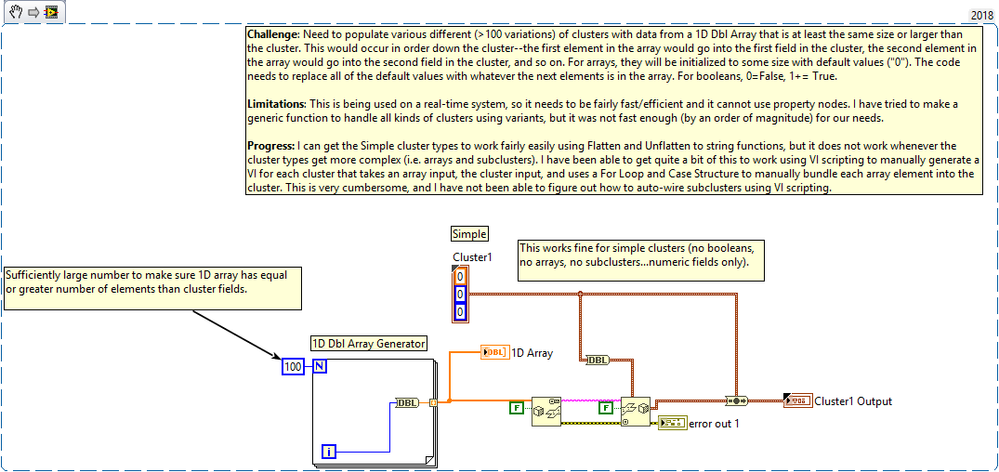

Looking for some additional assistance on this to see if I've exhausted all of my options here. I need to populate various clusters (some simple, some complex) from a sufficiently large 1D array of doubles. I have no issues getting this to work for simple clusters (rust/orange color, numeric controls only) using Flatten and Unflatten to String. I cannot figure out how to do this easily for a complex cluster (pink color, contains arrays, sub-clusters, booleans, etc.).

One solution I am close to getting working, but it is very cumbersome, is using VI scripting to automatically generate a subVI for each cluster using a For Loop and Case Structure to just bundle by name on each field manually, but I am stuck trying to automatically wire sub-cluster elements together.

06-25-2019 12:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Here's my first thought, hopefully you'll get some better ones. It's a bit hand-wavy as I'm not 100% sure about feasibility. If it's no good, I've no doubt someone will let me know.

Right now you're expecting to convert into any of several distinct typedef'ed clusters. To me, this smells like a good opportunity to uses classes in place of those clusters. A parent-level class would be defined that accepts a double array (and perhaps start index & length) as input. It would be configured as "children must override". Each present-day cluster becomes a class, and the respective override functions could each do the conversion internally with straightforward methods (indexing, bundling by name, etc.) This should be quite run-time efficient at the expense of a bigger compile-time codebase.

I'm not a LVOOP expert or heavy user, but I *think* that you can define the child data in a way that would allow you to take use "Coerce to Type" on the output object to convert it efficiently into the desired typedef'ed cluster.

-Kevin P

06-25-2019 12:17 PM - edited 06-25-2019 12:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I feel like you kind of glossed over the whole “conversion internally” part. That is the most difficult part.

I have 100s of Typedefs and they are currently in a state of flux during development of the project. I want to be able to run just a one or a few pieces of code that automatically update all my 1D array to Cluster populating functions based on a directory of all of latest typedefs. Manually doing bundle-by-name for every field in every typedef is not an option. It is too time consuming and too prone to user error.

(I say this as somebody who hasn’t done a whole lot with classes, so if there is some sort of simple way to implement that part in classes, I’m sorry and would love to learn it.)

06-25-2019 01:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You cannot convert a boolean to a DBL.

For inner hierarchical structures, the size is always prepended, so your "F" makes no difference. It also means that your random string contains size information that cannot correspond to the data unless you are really, really lucky!

06-25-2019 03:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

You cannot convert a boolean to a DBL.

For inner hierarchical structures, the size is always prepended, so your "F" makes no difference. It also means that your random string contains size information that cannot correspond to the data unless you are really, really lucky!

I know! The code I provided is just one of the many ways I have attempted this. I've also tried to do some experiments with coerce to type or typecasting with no success.

Ultimately, my objective is to populate many different clusters from 1D arrays of doubles. How do I do that easily (and problematically), given the limitations I have provided?

06-25-2019 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I still don't know why it needs to be an array of DBL? Why not a binary string as input?

DBL is very dangerous because:

- flattened size is always a multiple of 8

- data could get lost if values get normalized (e.g. there is a large number of bit patterns that translate to NaN).

How many different cluster type do you actually have?

How is the DBL array initially generated?

Can't you just e.g. have a first byte that specifies one of 256 cluster types, and then unflatten the remaining string to it?

06-25-2019 04:01 PM - edited 06-25-2019 04:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ultimately, my objective is to populate many different clusters from 1D arrays of doubles. How do I do that easily

I'm pretty sure you'll find there is no "easily". My earlier suggestion doesn't scale nicely at all to 100's of different typedefs that are in flux during development. I agree with you there. (I was picturing a more manageable # that were not in flux).

Any chance at automating this will likely lead you to the "Data Type Parsing" palette.

1. The first hurdle will be to navigate and identify all the possible types contained in the cluster. In order. Including expansion for possible cluster-inside-cluster-inside-cluster.

2. The second hurdle (probably the easiest one) is to code up all the cases where you convert an array element or subset into the corresponding type.

3. The third hurdle (likely the hardest one to fully debug and validate) is to handle all the degenerate cases. What if you run out of array elements before you run out of cluster elements? What if the found cluster type is ambiguous? For instance, the typedef cluster containing an array of doubles doesn't tell you how many elements might be contained by that array. How will you decide how many elements need to be copied over? And so on...

Is there any option to transform this problem? Apparently right now you have some data source that only gives you an array of doubles, but you somehow need to reinterpret the values as though they represent distinct fields of some arbitrarily defined typedef'ed clusters. This becomes a hard problem to solve on your end. Can any of it be solved on the other end? Can any additional useful information be made available beyond this array of doubles?

(I'm guessing the answer's no or you wouldn't be tackling it this way in the first place, but figured it's worth asking.)

-Kevin P

06-25-2019 04:38 PM - edited 06-25-2019 04:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It has to be an array of doubles because that is what NI VeriStand outputs for it’s channel data. Veristand (AFAIK) only works with doubles, and converts all of its data to doubles.

This is code needed for an NI VeriStand Custom Device.

I currently have 100+ Cluster typedefs. That number is changing, and the structure/shape of typedefs are currently changing.

06-25-2019 04:46 PM - edited 06-25-2019 04:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

After the first post of the OP my reaction was, WTF, but after the next explanation it was like, sorry are you wanting to spend 100ds of development hours into a typestring parsing and mixing an matching routine to some arbitrary data, or are you rather wanting to develop your application? The entire idea is pretty much doomed in my experiences!

There is no way you can meaningfully map a flat number of values into an arbitrarly structured datatype. There exist virtually an indefinte amount of possible mappings for that.

You need some structure in the data storage too in order to be able to map that back. The way I do this usually is by using the OpenG Ini File library. It stores an arbitrary structure into an ini file and allows to restore it back into that structure. If you happen to modify the structure it still can restore the data elements that didn't change in name and hierarchical location but if you rather want to have a mutable data stroage format you will need to use LabVIEW classes anyhow. They maintain a mutation history and the necessary routines to allow to restore a class from a flattened data stream even if there were changes to the class since the data stream was flattened from the class. The code which does that is a major piece of code that would take man years to replicate in LabVIEW in a meaningful way.

06-25-2019 08:18 PM - edited 06-25-2019 08:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

After the first post of the OP my reaction was, WTF, but after the next explanation it was like, sorry are you wanting to spend 100ds of development hours into a typestring parsing and mixing an matching routine to some arbitrary data, or are you rather wanting to develop your application? The entire idea is pretty much doomed in my experiences!

Believe me...this is not something I want to be doing. I've already spent months on this problem. It is not a simple one as I'm not able to set some of the rules for the application. It's important to understand that this is for a Custom Device for NI Veristand. Veristand converts all channel data from models inside of it to doubles. That is the only datatype that currently exists in Veristand. When you read a bunch of channels at once (rather than a single channel), it gives you an array of doubles. I can control the order of that data, and add anything to it, but I cannot change it to any other datatype in Veristand. It has to be an array of doubles.

On the other side, we are using 3rd party VIs that write data out. The input field for the data is a cluster (typedef). Each of those write VIs is built specifically for each typedef. So if you have Typedef A (cluster), it generates it's own read and write VIs that have that typedef/cluster as an input. It automatically generates the read/write VIs for each typedef needed, either from a single file or an entire folder/batch process. These generated VIs are made by a 3rd party and are password-protected. So between those two, I need to write code to convert an array of doubles and put that data into a typedef cluster. And not just one cluster, but many.

Other considerations, this is running on a real-time system, therefore property nodes are not an option. It also needs to be running many of these 1D array-to-cluster-to-write in parallel at 500 Hz. We are already nearing our processing/performance limits on a high-end cRIO, so it needs to be efficient code.

It sounds like VI scripting is the way to go on this. I've got that working where it will automatically generate a VI for each typedef that uses For Loop, Case Structure, and polymorphic VIs to bundle elements back into a cluster. This works for everything at initial hierarchy level in a cluster, but I need a little more time with it to get the subclusters working.