- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Data lost when reading DMA FIFO from FPGA.

Solved!06-16-2020 11:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Everyone, I have problem related to DMA FIFO FPGA.

I use cRIO 9012 with a C-modul NI 9232 to read analog input. When I read data continuously from FPGA, it seems I lost data for few hundred milliseconds. I use DMA FIFO to transfer data from FPGA to Host PC. I use example program from labVIEW with small changes.

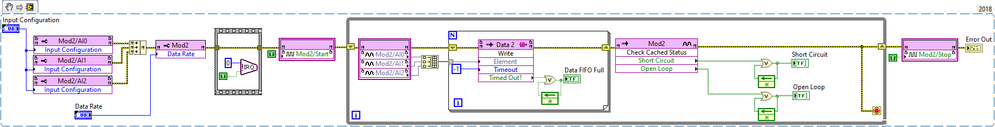

FPGA Block Diagram

Host Block Diagram

The interesting thing to notice is in the time I lost the data, lag happens in my computer and cRIO CPU dropped also.

CPU Drop

Does anyone have a clue about this problem?

Thank you....

Solved! Go to Solution.

- Tags:

- DMA FIFO FPGA

06-17-2020 02:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi RezaAB,

A few questions (numbered for reference in a response, not importance):

- What's the sample rate? As a follow-up, how many samples go missing in the "few hundred milliseconds"?

- Are you sure you're losing samples (I know this is a silly question, but still...)? How did you determine this?

- Do any of your indicators for the booleans become true? (I guess not, or you'd probably have mentioned it)

- What's the loop update rate in the Host?

- Did you try checking the update rate in the FPGA loop (e.g. difference in tick counts via FB node and an indicator, perhaps, or a separate DMA if you wanted to know over time (might be worth checking - remove this after you've checked since it will only waste a DMA channel and FPGA space).

06-18-2020 11:17 AM - edited 06-18-2020 11:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hmmm... your FPGA FIFO write node is set to infinite time-out (-1). It shouldn't be possible for 'data fifo full' to ever go high with this configuration. While samples shouldn't be lost, you will miss out on acquiring some while the FPGA waits for the host-side to read enough items out of the buffer if it's full.

What do you have the host side loop-rate set to? You'll have to make sure the FPGA isn't sending fast enough to overflow the host side buffer. Maybe get some history on the Elements Remaining indicator (change it to a graph or something). You may also want to add one on the output of the Number of Elements To Read wire.

TJ G

06-18-2020 10:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi cbutcher,

Thanks for your fast response.

1. I use maximal sample rate of NI 9232. It is 102.4 kS/S.

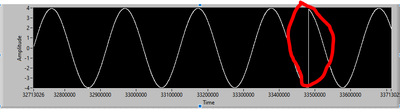

2. Yes I am sure. I connect 9232 to sinewave generator. As you can see in the red circle, the signal is not a sine wave. At that moment a lag occurred.

3. All boolean are off. However when I used 0 value for FIFO Read timeouts (default program), Data FIFO Full LED indicator is ON when a lag occurred.

4. 10 ms. I already increase and decrease this value and I still got this problem.

5. I already checked the update rate of FPGA and It showed the correct value (T=1/Rate).

I hope this information give you more insight about my problem.

Reza

06-18-2020 10:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi T-REX$,

Thanks for your response

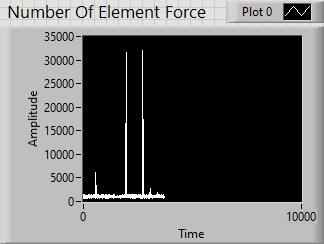

I am sure that my Host read the data from FPGA fast enough and overflow would not happen in normal condition. However, just after the lag occur the number of Elements Remaining became very high suddenly.

I hope this information can help you to solve my problem.

Reza

06-18-2020 11:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@RezaAB wrote:

1. I use maximal sample rate of NI 9232. It is 102.4 kS/S.

2. Yes I am sure. I connect 9232 to sinewave generator. As you can see in the red circle, the signal is not a sine wave. At that moment a lag occurred.

3. All boolean are off. However when I used 0 value for FIFO Read timeouts (default program), Data FIFO Full LED indicator is ON when a lag occurred.

4. 10 ms. I already increase and decrease this value and I still got this problem.

5. I already checked the update rate of FPGA and It showed the correct value (T=1/Rate).

I hope this information give you more insight about my problem.

Reza

Ok, that's great. 1,2 and 5 are all I suppose as expected, and 4 seems like it should generally be fine (more below).

3 hints at a possible cause of your problem but doesn't immediately (at least to me) indicate a great solution.

Generally (as a result of 3+4) I'd like to suggest increasing the RT side size of the DMA FIFO using FIFO.Configure. However it looks like perhaps you're already pretty full on memory usage... 😕

Written before I saw your new response to T-REX:

The default size is either 10k, or ~16k, or twice the FPGA FIFO size (which is probably fairly small, so I'm guessing it's 10k-16k).

At ~100kS/S/Ch you fill the buffer in ~(10k / (300k)) ~= 33ms, which would suggest a 10ms loop rate is probably ok. However, if your RT loop is actually slower (because that's just setting the minimum time - if you have a lot of slow code in a loop it could take much longer, and I don't know how long your RT target takes to dequeue and decimate the array and so on) then you might be running into trouble. Unfortunately the problem there won't be solved by a larger buffer even if you can increase the size - you'll just have fewer interruptions with longer times between (it looks like you got ~700k samples in your reply's image, so I guess ~7s is your current time without problems).

It seems like maybe you have ~32k elements/spaces in your buffer. So the time to be able to read that out and avoid overflow (by the same logic as in the spoiler) is around 100ms.

I wonder if using a Timed Loop on your RT system might give some better indication as to the problem, or at least prioritise that code and perhaps remove the issue.

I'm tempted to conclude that your 'lag' is causing the missing elements (via overflow) and not the other way around (high number of elements or some delay in acquisition causes what looks like 'lag').

Perhaps as with the FPGA loop (which you already measured) you could check the timing on the RT loop? Make sure that it is generally close to the 10ms you're expecting.

Is there anything else running on your RT system that might be causing the delay (because of some intermittent or periodic event occurring, for example?)

As another wild guess, you mentioned a sine-wave generator. Is this a discrete instrument or is it some output (perhaps using LabVIEW) programmed in software?

06-26-2020 04:08 AM - edited 06-26-2020 04:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi cbutcher,

Thanks for your answer. I use PC as Host.

So your assumption is : Software on PC lag >> No data read >> FPGA still write the data >> FIFO Overflow >> Data missing.

I used to run myDAQ from other PC so I only run this program on my PC.

FYI, I also tried to run this program on other PC and got stuck in the same problem.

If your assumption is correct, do you have any suggestion to read the data from FPGA?

I already increase the FIFO in my PC. ans till got same problem.

Reza

07-01-2020 02:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi cbutcher,

I already tried on RT machine and the program work smoothly. I think your assumption about my problem was correct.

I still want to use PC as the Host and I don't want to loss my data when lag occur. Do you have any Idea to solve my problem?

Reza

07-01-2020 05:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Reza,

Were you able to check the loop rate on the Host loop? Particularly on your PC system, given that on RT it apparently works well.

I think perhaps the problem you're running into might coincide with 'random' CPU usage on the PC, e.g. Windows doing something or your antivirus, or perhaps a very brief network issue/burst.

Make sure if you try profiling this that you look at all of the iteration values (using something like the way you produced the #Elements vs Time plot). It might be just a single iteration that runs 'slowly' - e.g. maybe ~300ms - that is breaking your workflow.

I saw you said that you already increased the PC-side DMA FIFO buffer size. How big did you make it? Can you try making it even bigger? That's all I can suggest from a 'quick fix' point of view (I suppose you could try disabling antivirus, using a less busy network if it's shared, etc, but probably these are all things you can't/don't want to do much about).

I think to reliably resolve this problem, you should use the RT system to read the DMA FIFO and then have the RT system use something like a Network Stream to send the data to the PC - this will allow for more variability in the connection to the PC and the responsiveness of the PC without causing everything to fall apart.

It does require a bit more programming, but if you already tested your system with the FIFO being read on RT, I think you're most of the way there.

For reference, I use a very similar kind of strategy with a cRIO-9045 and SPI communication on FPGA that produces essentially 18-bit values at ~100kHz/ch and up to 8 channels (converted to DBL (even though SGL might be enough... hmm...) on the RT system, and then put into arrays for easier PC handling and communication).

Then I use some wrappers around a Network Stream connection to send those blocks of data (so perhaps 8 arrays of say 5000 points at 20 blocks/second) to the PC for logging, processing, whatever.

I avoid making the blocks for communication too small (causes lots of overhead for the network because I'm not using exactly a Network Stream, but actually an object flattened to string over a Stream) or too big (requires a large chunk of memory on the RT system).

Once I've sent them, I just drop them on the RT (so if there is no PC connected, they're immediately dropped).

This makes it easier for me to manage CPU/RAM usage, since there's no disk I/O or similar on the RT side, but it might not work for you depending on your needs. For example, if I connect a PC to the cRIO, I can't see what happened in the past, only in the time after the connection is made (because previous data was already discarded).

07-08-2020 01:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi cbutcher

I really appreciate your answer. It was really helpful.

Right now, I am trying your strategy. I think that is the best way to solve my problem because I can't manage process in my PC.