- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Data and Memory Management over long period of time

10-08-2020 07:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi.

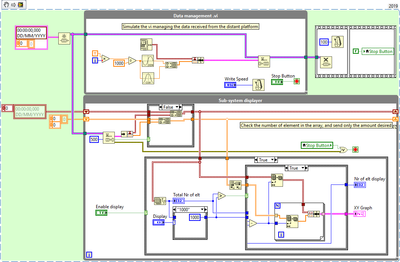

I'm developing an application communicating with a distant platform from which I'm monitoring various data (Its battery state for example)

The monitoring has to be autonomous and constant: as soon as the application runs, the monitoring must start until the application stops.

The battery state graph is display on user request and the idea is to be able to display all data since app start, only the past hour, or the past half-hour.

What Iam doing for now is managing data to create an XY graph, sending it as a lossy element in a sized defined queue and in my "Battery Management.VI" I dequeue and display the graph (or part of it).

In the VI attached, I give a small scale example to illustrate what I mean. The boolean "Enable display" represent the opening of another vi.

This works fine, but my problem is that the application could run for 10-15hours (or even more if the user leave it open over the weekend) and then I need to think about memory management which I never really had to do before.

The thing is, the battery state data doesn't represent much (compared to my example): only 5 numbers to store every 5 seconds. but over 10 hours and with other data, I'm afraid the computer would just crash at some point.

I was thinking of using TDMS parrallel read&write, but I doubt that it would resolve my issue as once open the file is loaded in memory anyway, right?

If you could give me any advice or point me toward some tutorials, that'd be nice.

Thanks in advance.

Best,

Vinny

10-08-2020 08:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

There is no point in queuing all the data arrays, this way you are unnecessarily duplicating your data. Just queue the last acquired values in the acquisition loop, then build the arrays once and store them in a shift register in the display loop.

-------------------

LV 7.1, 2011, 2017, 2019, 2021

10-08-2020 08:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Saying the same thing as pincpanter in different words.

Your feedback nodes are accumulating a potentially infinite history of all your data. Then you keep re-enqueuing the entirety of this ever-growing cumulative history. This is the redundancy he's talking about.

The data producer should not be accumulating any history. It should just enqueue present values of data. The consumer is where you may want a mechanism to accumulate the history. That could be a feedback node, shift register, or some variety of queue.

FWIW, I wouldn't make the queue between producer and consumer inherently lossy. I would assume that as your consumer code develops it will need to include logging to file and you shouldn't take the risk of lossy data delivery from the producer.

You *can* use a fixed-size queue to accumulate history, but it should be a separate queue residing only in the consumer. Then you'd preview data from it to get a copy without emptying the queue of that history.

It's probably not the most CPU- or memory-efficient approach, but it probably will be more compact on the block diagram. I've been known to use this method when I can afford the execution-time inefficiency. Your app could accommodate it too due to your very, very low data rate.

-Kevin P

10-08-2020 09:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

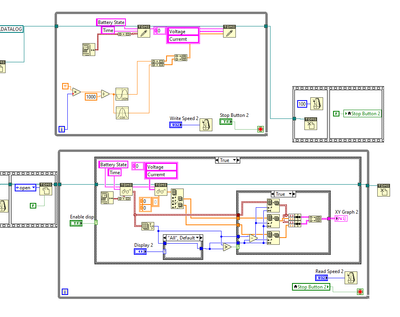

Here is what I would do, basically, although other similar schemes can be applied.

This way, you only have one copy of the arrays in the shift register (feedback nodes like yours are ok, of course).

Plus one more (possibly partial) copy into the graph itself. You don't need a read speed setting, the pace is dictated by the data producer loop.

-------------------

LV 7.1, 2011, 2017, 2019, 2021

10-08-2020 09:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi, thanks for your replies.

So to be more precise, I technically have another queue before those two: The communication one.

That way I receive data from the platform, manage it (transform the bits in appropriate numbers) in my data management loop, and then eventually display it to the user. I actually also realized that it kinda make sense to log this data anyway as well.

@Kevin_Price wrote:

Your feedback nodes are accumulating a potentially infinite history of all your data. Then you keep re-enqueuing the entirety of this ever-growing cumulative history. This is the redundancy he's talking about.

Fair enough, then does it mean that I would need to either store that data constantly in my "Battery Management" VI and simply open the front panel only on User request ?

@Kevin_Price wrote:

The data producer should not be accumulating any history. It should just enqueue present values of data. The consumer is where you may want a mechanism to accumulate the history. That could be a feedback node, shift register, or some variety of queue.

-Kevin P

Ok, but how does that solve the problem of potentially infinite growing data? I suppose I will have to something somewhere anyway myself? (as in programmatically do it if ever the user didn't close the app)

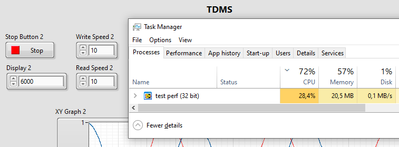

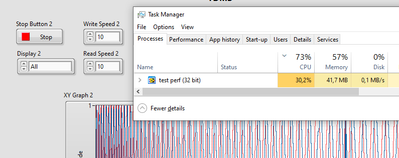

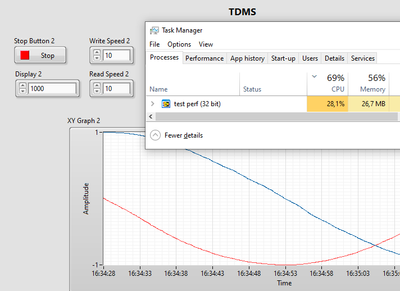

Currently running the same test than before but using TDMS read and write, and it seems far better in term of memory usage, probably because I'm not doing the redundancy you guys where taking about.

With feedback node + queuing in producer, after 30 minutes I was at about 600MB of memory usage

With only TDMS Write/read in parrallel, I'm right now only at 84MB (been running for 15minutes only)

It's uggly, but it's just a quick test ^^

10-08-2020 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ok, but how does that solve the problem of potentially infinite growing data? I suppose I will have to something somewhere anyway myself? (as in programmatically do it if ever the user didn't close the app)

There are various ways. Set a "maximum" number of samples. Then you may implement circular buffesr of that size (memory buffers where you overwrite old data when the maximum size is reached), a solution similar to the lossy queue.

Another looser technique is to throw away old data from time to time. For example, if you got 1,5 times the "maximum" number of samples, discard the first third of the data.

-------------------

LV 7.1, 2011, 2017, 2019, 2021

10-08-2020 09:33 AM - edited 10-08-2020 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ah I see!

That's a much nicer way to present it than what I do indeed.

I'm also still struggling with data manipulation to be displayed in XY graphs ... Arrays of clusters of arrays of clusters are kinda getting to my brain ^^

I'll how this one performs over time compared to logging and reading data in and out of a TDMS file.

For now the latter seems to be doing really well. if I donot show the full data, the memory usage is rather low.

10-08-2020 02:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@VinnyAstro wrote:

Ok, but how does that solve the problem of potentially infinite growing data? I suppose I will have to something somewhere anyway myself? (as in programmatically do it if ever the user didn't close the app)

Another option, if you only need to display the "data since app start", would be to periodically decimate the data. Since the display will be limited by resolution, as long as you have more data points than pixels, you don't need to retain *all* the data.

I would imagine this being held as two separate data sets - one for the past hour (and past half hour), and one for "all time".

Then, any time your "all time" data set grows larger than you like, remove every other data point (and from then on, only append every other data point to this data set).

If the "all time" data set again grows too large, do the process again (now only keeping every 4th data point). Then 8th, 16th, etc.

If the user ever wants to see the information for all time, just display the decimated array. It will show the same detail the user would otherwise visually have, but it will never exceed the amount of memory you prescribe.

Also, if you are worried about a file being too large in memory but still need to save all of the detailed information, save the data off to time-stamped files for later use.

Then there would just be one file for each day (or hour, or other timespan) that contains all the detailed data for that time period.

-joeorbob

10-09-2020 07:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So it would actually be interesting, either for us or for our user to be able to store some data. Not necessarily all the time, but at least during an active test.

I'm thinking of implementing a Record action, and if not enabled then nothing is stored.