- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

Converting a string representing hex into a string of the corresponding ascii characters

07-23-2009 01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

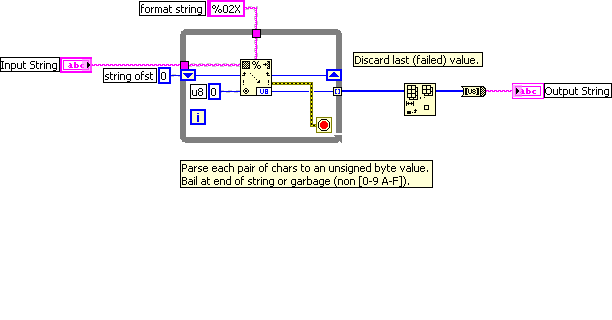

So far none of the answers posted (to my eyes) have fulfilled the original poster's request. DanB83 simply said he had a string of hex values. He wanted a string of ASCII characters "that those values represent". The only assumption I made, from the sample input and output data, is that each pair of chars in his input string were the hex representation of a char in the output.

Where in the examples did these input arrays of strings, set in LabVIEW in hex display mode, which is not the same thing, come from?

So, here's my submission, feel free to critique it. I did verify it produces the output the OP used in his example:

Sr. Test Engineer

Abbott Labs

(lapsed) Certified LabVIEW Developer

07-23-2009 01:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

David,

Before things got off track, GerdW posted a very nice solution. hex2hex.vi Looks a bit like yours. Here's a .PNG

07-23-2009 01:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oops, my mistake. I did download GerdW's second posting, but missed seeing his first one. And yes, his solution looks a bit like mine, though reforming the input string into an intermediate array of strings feels less efficient.

I always prefer to use Scan From String over all those type-specific string conversion primitives on the String/Number Conversion palette. I need those error in/out terminals, and I think the C-style format specifier documents better visually. Same goes for Format Into String. But that's just my preference. And the other primitives do have polymorphic terminals, so that could be advantageous in the right circumstances.

Sorry for the rambling - and I really don't mean to come off as grumpy or picky.

Dave

Sr. Test Engineer

Abbott Labs

(lapsed) Certified LabVIEW Developer

- « Previous

-

- 1

- 2

- Next »

![hex2hex[1]_BD.png hex2hex[1]_BD.png](http://forums.ni.com/t5/image/serverpage/image-id/2076i63A784CAE9718487/image-size/original?v=mpbl-1&px=-1)