- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Concurrent access to TDMS file with interleaved data and only a single header (no file fragmentation)

Solved!06-20-2018 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Gabriel_H wrote:

@drjdpowell wrote:

wiebe@CARYA wrote:

What I don't get is why you'd keep the data in memory, and write it TDMS, and then read it from TDMS? Makes more sense to me to let all the "file creator actors" read from memory.

I think the OP is planning on NOT keeping data in memory, but instead writing to file from one component and viewing it with another. That's a good architecture.

The measurement files may have 100 to 1000 channels, but only 2 to 5 will ever be viewed during testing (while the files are open and being written) to produce basic plots to visualise how the testing is progressing. I didn't want to have a duplicate set of data sitting in memory and also on disk, when the copy in memory will only ever have a small portion used. That solution may not scale well when the data set becomes large. Keeping it only in memory would mean that application crashes or power failures could cause all data to be lost. Hence writing it to disk immediately then viewing it back appears to be the safest approach for keeping the data intact and minimising memory usage.

A past project I developed used advanced TDMS functions (synchronous write) for a 24/7 data logger, which could produce several files each with volumes of 1~10GB for each day (TBs per year of data). The advanced TDMS functions worked well to reimplement the "one time header" feature, allowing a scalable soltuion for recording huge quantities of interleaved data. That part of TDMS works very well.

I was looking for a similar solution, but with concurrent reading. That's when TDMS falls apart in terms of being a scalable solution (i.e. standard TDMS fragmentation get's out of control, causing 6x larger files full of meta data describing the scattered file structure). I'm not sure if there are any other file types that would also work well and scale well, because the the data needs to be appropriately ordered on disk to avoid the fragmentation when a file is written over a long time. It's easy to solve once a file is complete: I can defragment a TDMS file, or if the data is known (final length of channels) I can write it efficiently to something like HDF5.

My summary is that there isn't an easy, scalable solution for this use case. I've experimented with a lot of variations, but the only thing that looks like it might work would be advanced TDMS reads (in a number of smaller blocks) coupled with knowledge of the file structure (groups, channels, data types per channel). It looks like I'd need to wrap the TDMS functions inside a class that stores a duplicate of the file structure and uses that during reads to deinterleave and correctly type cast the TDMS advanced read outpute. It looks a lot like reinventing the wheel, and not worth my time considering the TDMS API is meant to do that already.

I'd really like to see NI make an example of TDMS advanced reads of interleaved data from multiple channels of mixed types. So far the only LabVIEW examples I've seen do this with the same channel types.

You probably need some mixture between memory and TDMS. Use TDMS for accessing older data, written nicely in chunks. So when writing those chunks, TDMS read won't interfere with TDMS write. During reading older chunks, you might need to stall the writing, so the current chunks don't get fragmented.

To access data in a chunk that's being written, read the data from a buffer.

Not too hard, but I don't think you will get it done in a one liner...

06-20-2018 05:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm going to stick with the standard TDMS API for the time being. It does what is required (per the included LabVIEW example and attached vi), it's just not very good at structuring the file (the "one time header" feature doesn't work properly, as confirmed by the older posts I linked to).

Regarding one liners: true. It's effectively a "one liner" to use the standard TDMS API, but to get past the shortcomings of the standard write is beyond a simple solution.

wiebe@CARYA wrote:

@Gabriel_HYou probably need some mixture between memory and TDMS. Use TDMS for accessing older data, written nicely in chunks. So when writing those chunks, TDMS read won't interfere with TDMS write. During reading older chunks, you might need to stall the writing, so the current chunks don't get fragmented.

To access data in a chunk that's being written, read the data from a buffer.

Not too hard, but I don't think you will get it done in a one liner...

Note that I'm trying to read a single channel from file, but the entire channel from the very start of a test to the latest value.

I've thought about writing the TDMS file in chunks (set up the file for repeating blocks of groups and channels with sequential data in each block). Your approach of buffering an incomplete block then writing it once complete would work. I've tried a variation where I write a blank block then incrementally overwrite the block to keep the latest data always in the file. That approach was messy and leaves garbage in the file. Hence your proposal would be a good trade off between regularly recording data to disk (avoiding loss on power failure/crash), minimising memory usage and allowing the TDMS file to be well ordered.

The problem I found with these approaches (including just writing an plain interleaved file one sample per channel at a time), is that they require advanced TDMS writes (sync or async). Once that's done, you can't read the TDMS file unless you also use advanced read. Regardless of the underlying file format, advanced read disregards the majority of the file format and returns the raw data from the starting position, but cast/read as the type you specify. I.e. you get interleaved garbage back with mixed types forced into a single type, and would have to spend a lot of effort decoding the file format.

That's the bit I'm annoyed about: advanced TDMS reads only work well if your data is simple (1 or 2 sequential channels) and uses one type. However, most practical applications use multiple types (e.g. timestamps, double, integer) and are interleaved - even most of the included LabVIEW examples show advanced TDMS set up for interleaved writes. The standard TDMS read fixes all of this, but can't be used when you are using advanced writes to fix the underlying file format.

So I'm left with standard TDMS writes generating fragmented files (which I'll defragment after a test is complete), or I get nicely formatted files that I can't read concurrently from. So I can't fix the TDMS files, that's only something NI can fix with their underyling API (standard writes).

I could use other formats, but they will all have to solve the same basic problem (files need to be well ordered on disk when writing interleaved over a long time, and also able to read). I could push the read into the same thread (actor core) that does the writes, and that may work for other file types. It won't help TDMS though, because of the clash between standard and advanced TDMS.

Hence your suggestion of caching the entire data set in memory is probably the best alternative. It may not scale well when the data set grows to be large, but in that case the copy in memory could be decimated to limit memory usage.

06-21-2018 09:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Gabriel_H wrote:

. It may not scale well when the data set grows to be large, but in that case the copy in memory could be decimated to limit memory usage.

In my experience, keeping the data in memory is a lot easier then displaying it. Displaying anything over 70 MB in a graph will start to render it useless. So I usually keep all the data and apply decimation just before displaying it. That way I can adapt the decimation so that the user does not notice it. There are obviously memory limits as well, but the graph limits are much much tighter.

06-21-2018 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm glad I'm using SQLite. For data rates like your describing, it's a lot easier. No caching data in memory, no need for decimation since you can use a "GROUP BY" clause in your SELECT statements. Write an app for looking you data files offline, and then embed a copy in your data collection program as an online display.

06-23-2018 06:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I don't know much about SQLite. How does it handle writing interleaved data incrementally over a long time?

I imagine this problem of file fragmentation and inefficient storage is common to all file formats that don't explicitly take steps to deal with the structure of the data stream being written. Geneally speaking, anything beyond one channel of incoming data requires some method of serialising that data so it can sit in a single file on disk. That's the bit standard TDMS fails at (pretty spectacularly with 6x file size) when it spends much more space describing where the data is compared to the data's actual size.

Do you know how SQLite handles this use case: Can you setup the underlying file structure to keep the data organised when it comes in interleaved one point at a time? I'm curious to see how it scales with lots of small writes with single values.

06-23-2018 07:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks wiebe@CARYA,

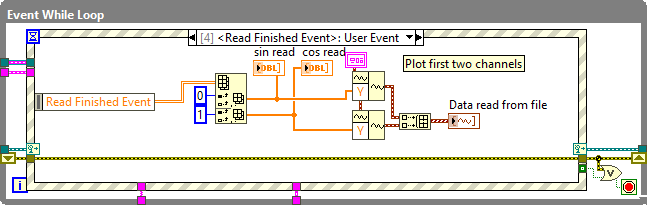

Based on your advice, and this post from 2014 on the LAVA forum about advanced reading from TDMS, I've put together another example that uses the advanced TDMS synchronous read and write to perform concurrent access using a buffered block of data. The VI is attached.

What's great about this solution is that it performs deinterleaving of the data (value at a time) into the buffered block, then writes the block to file once full. Depending on the block size: a larger block length will reduce the number of read operations required for a given length of data, but with the tradeoff of data not being immediately written to file. The blocked size can be tuned as appropriate for the application.

I've also included a button that selects either standard or advanced TDMS functions.

A comparison of standard vs advanced TDMS:

(20 groups, each with 1 time channel and 50 DBL channels, with 2000 values of data per channel written, or approx. 2 million total values for the file):

- Standard TDMS: Ran for 45.8 seconds to write all data, produced a 92,680kB file, with a read time of 22ms (for 1780 points, 3 channels).

- Advanced TDMS (block length of 10): 1.99 seconds to write all data, produced a 16,306kB file, with a read time of 7ms (~1500 points in, 3 channels).

There's quite a large performance difference, purely based on structuring the file properly. Interesting the read time in both cases was quite small. In my specific case I write the file over a long period of time (many hours) but read in about 5 selected channels of data as the user requests them via the GUI. So fast read time is more important that write time.

Summary of Advanced TDMS solution:

There are four parts:

- TDMS file creation and setup.

- Measurement loop (producer loop).

- TDMS read/write loop (consumer loop), that holds a 1 block buffer that is written to the file once the block is full.

- GUI event loop (produces user commands for the TDMS read/write loop).

TDMS file creation and setup:

As groups and channels are added to the TDMS file, they are also added to an array of clusters containing the same group name and channel name structure.

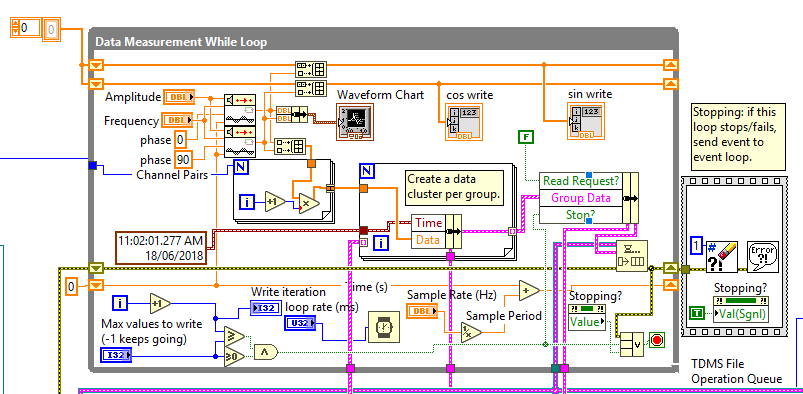

Measurement loop (producer loop):

This loop is produces measurement data, which is bundled into a cluster and sent via a queue. In a real application, this would be located elsewhere and the queue would be replaced with something like actor messages (to send the data to the TDMS data logging actor).

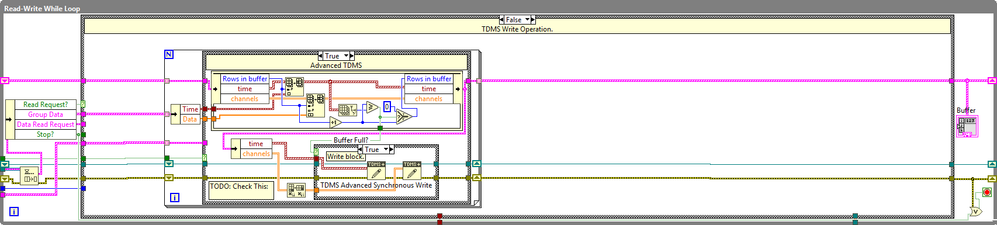

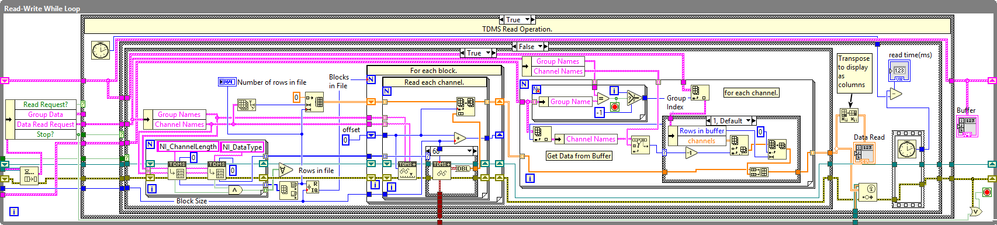

TDMS read/write loop (consumer loop):

First the buffer is dynamically created from the cluster array of TDMS group and channel names. In this example the format is fixed to have an arbitray number of groups with each group having one time channel followed by an arbitrary number of DBL channels.

Inside the TDMS read/write loops, writes are performed as follows:

The writing is straightforward: overwrite into the buffer (keeping track of the buffer contents), then write the buffer to file once full. The transpose 2D array appears to work here, even though writing 2D data to TDMS doesn't appear to be well documented.

The read operation is more complicated:

The number of blocks in the file needs to be calculated first, then for each block and each channel, a block length of data must be read out. Hence, the smaller the block length, the more reads required. After the read is completed, the contents of the buffer is appended to the array then it is returned to the GUI (in this case, via a dynamically registered user event).

In this case, the only limitation is reading the timestamp channels: these are cast to DBL, although more advanced implementations are possible (using the TDMS data type property to handle the type as requried).

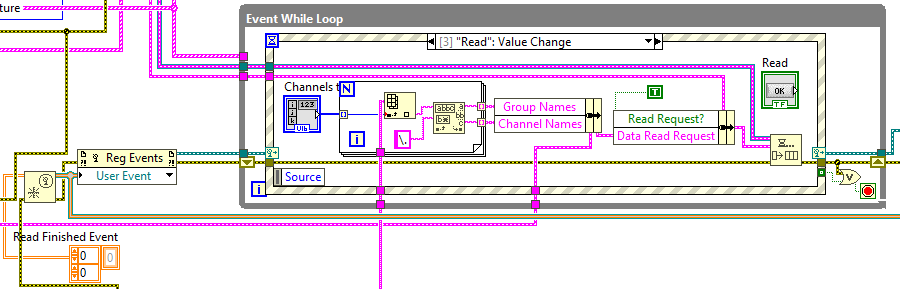

GUI event loop

The GUI event loop is simple. The read request is generated by an array of menu rings (dynamically populated), that allows the selection of an arbitrary number of channels to be read from the file. The channels are displayed in a 2D array, but when sent back to the event GUI only two channels are plotted. This is only a limitation of this GUI event loop, not the TDMS advanced read operation.

Hopefully this helps anyone else in the future who needs to read and write TDMS files with an interleaved structure.

06-23-2018 09:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Forgot to attach the VI.

06-24-2018 05:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Gabriel_H wrote:

I don't know much about SQLite. How does it handle writing interleaved data incrementally over a long time?

I imagine this problem of file fragmentation and inefficient storage is common to all file formats that don't explicitly take steps to deal with the structure of the data stream being written.

SQLite isn't a streamed, append-only format. It's a database-in-a-file, and will go back and overwrite past bits of the file**. That means it cannot achieve the highest write rates that a streamed format like TDMS can, but also that one doesn't have to worry about all this stuff that you are worrying about. It's a lot simpler to use. And you're application, with its roughly 1000 doubles per second, is well within the capabilities of SQLite.

**I use SQLite in its "Write-Ahead-Log" mode, which does an append-only WAL file that is periodically folded into the main file. This is a bit like write and then defragment, but done incrementally, but the important bit is that I don't have to worry about it!

06-24-2018 06:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@drjdpowell wrote:

@Gabriel_H

**I use SQLite in its "Write-Ahead-Log" mode, which does an append-only WAL file that is periodically folded into the main file. This is a bit like write and then defragment, but done incrementally, but the important bit is that I don't have to worry about it!

Thanks for the tip, that sounds like one of the features that would be nice for TDMS (real time partial defragmenting of a file). Last week I thought about how to do such a thing with TDMS, but it's not easy: you'd have to close the file, defrag it, then reopen it and hope you didn't miss any write requests. To do it more robustly, you'd have have a temp TDMS file to capture data that was being written while the main file was getting defragged... This sounds very similar to what the Write-Ahead-Log mode does in SQLite (based on your link), except its all automatic.

I'll take a look at SQLite and see how that goes.

06-25-2018 03:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm no TDMS expert, but I believe one can easily combine them (see this link). So one could think of collecting a file, then periodically start a new file, then defragment the previous one and add it to a large main file.