- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

A (should be) simple DAQmx question - take finite samples in software timed loop

Solved!04-27-2017 12:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Usually I use separate DAQmx loops exclusively for the task, and send the data via a Queue. In such cases I simply use continuous sampling, very simple solution.

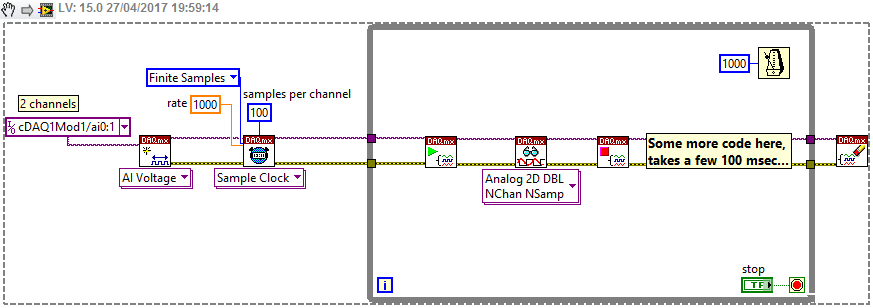

However, I wonder what is the "official" procedure when we want to take finite samples iteratively in a loop, which is software timed and contains other (RS232, etc) tasks. So simply I want to sample like 100 values per channel per iteration, and keep the loop iterating at a certain speed (for example 1000 msec, 1-10 msec jitters are ok in this case). I know that the other tasks will not take more than 400-500 msec to execute. So it is convenient to keep a 1000 msec iteration speed.

Please have a look the (non finished code) snippet below, is it ok to setup it this way? I always realize my DAQmx knowledge is just way too limited 🙂

Thanks!

Solved! Go to Solution.

04-27-2017 02:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You don't actually need the Start Task and Stop Task. Otherwise, I don't see anything actually wrong.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

04-27-2017 03:17 PM - edited 04-27-2017 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I usually set Commit state with Control task.vi before the loop. It moves some task initialization steps outside the loop.

04-27-2017 05:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'd definitely get rid of the wait inside the loop. The DAQ Task waits until samples to read are acquired and throttles the loop perfectly. Adding a second clock source is just asking for trouble. The words of Ben Franklin come to mind. A man with one watch knows what time it is, a man with two is never quite sure.

You know you should just do it my way.![]()

"Should be" isn't "Is" -Jay

04-27-2017 05:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

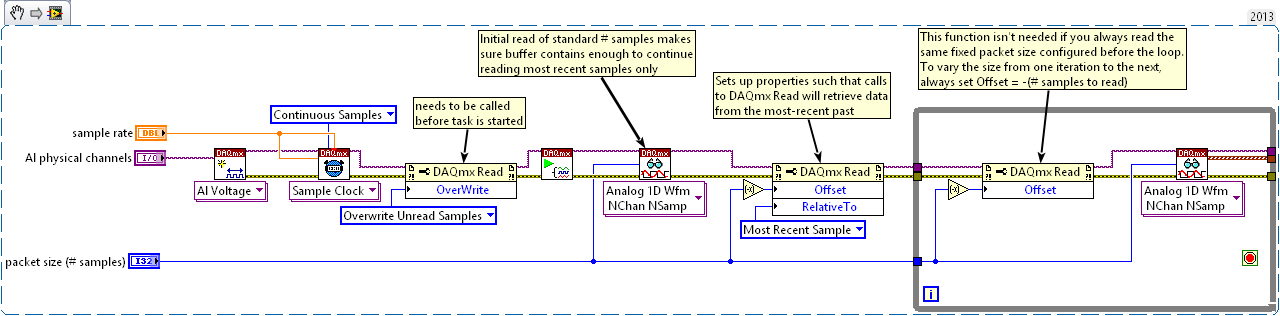

I tend to go with a different approach which is a little more flexibile in a particular way. It can be really useful sometimes, but can also be unimportant or even detrimental. It just depends.

When I need snapshots of discontinuous data, I configure for continuous sampling and for allowing overwrite. I also configure to read relative to the sample being taken *right now* instead of relative to wherever my last read left off. When set up this way, I can immediately retrieve a fixed # of samples representing the most recent stuff that was happening at the sensor. And during the times I'm not peeking at the data, the task will happily overwrite the buffer in the background without throwing errors.

I've never had a problem with this approach being a noticeable detriment, but can imagine how continuous streaming could waste resources in apps where data peeks are relatively rare.

Below is a code snippet to illustrate how to do what I'm talking about:

-Kevin P

04-27-2017 11:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@JÞB wrote:

I'd definitely get rid of the wait inside the loop. The DAQ Task waits until samples to read are acquired and throttles the loop perfectly. Adding a second clock source is just asking for trouble. The words of Ben Franklin come to mind. A man with one watch knows what time it is, a man with two is never quite sure.

You know you should just do it my way.

I agree and also disagree with you. I understand your problem with that extra timing ("Wait Until Next ms Multiple"), but it is required. Why? Because the execution time of the non-DAQmx code part might vary with up to ~100 msec, or even more (serial and other tasks). Probably most of the time it has a very self-similar execution time, but I cannot be sure. So you are right that the DAQmx part executes always with the same speed, but I cannot exactly fix the execution time of the non-DAQmx part, thus the loop time might vary too much occasionally.

Therefore, I need to "round up" the total loop execution time to 1000 msec using that "Wait Until Next ms Multiple"...

04-28-2017 12:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Kevin, I really like your solution:

- It teaches me how the DAQmx driver handles the buffer "behind the curtain"

- I can just simply use the continuous sampling, but only take the most recent portions from the sensor(s) as I just need in this particular application

- The code avoids the two most common errors thrown by DAQmx: when I do not read the buffer enough fast, or when I try to read data which is not there yet

Thanks very much, I learned some new stuff today! 🙂

04-28-2017 03:27 AM - edited 04-28-2017 03:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hmm, I see some very strange behaviour: i set the rate to 1000, and the packet size to 100. I set up the code as your example exactly. Surprisingly, the DAQmx Read function outputs the very same values for 14-16 iterations, then i see some new values. The code should give me always the latest 100 values sampled with 1kHz, so i have no idea what is wrong 🙂 Why the DAQmx Read reads the very same values??

Edit: my hardware is a USB-6009.

04-28-2017 04:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I believe DAQmx is MAGIC!!! ![]()

04-28-2017 06:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Question: can we consider the above behavior as a DAQmx bug?