- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

64-bit LabVIEW - still major problems with large data sets

01-21-2011 03:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Folks -

I have LabVIEW 2009 64-bit version running on a Win7 64-bit OS with Intel Xeon dual quad core processor, 16 gbyte RAM. With the release of this 64-bit version of LabVIEW, I expected to easily be able to handle x-ray computed tomography data sets in the 2 and 3-gbyte range in RAM since we now have access to all of the available RAM. But I am having major problems - sluggish (and stoppage) operation of the program, inability to perform certain operations, etc.

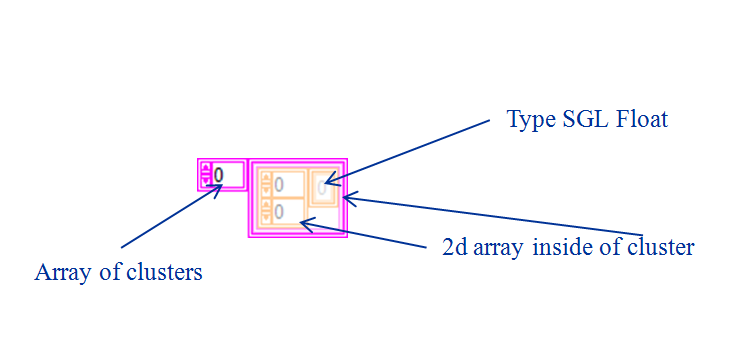

Here is how I store the 3-D data that consists of a series of images. I store each of my 2d images in a cluster, and then have the entire image series as an array of these clusters. I then store this entire array of clusters in a queue which I regularly access using 'Preview Queue' and then operate on the image set, subsets of the images, or single images.

Then enqueue:

I remember talking to LabVIEW R&D years ago that this was a good way to do things because it allowed non-contiguous access to memory (versus contigous access that would be required if I stored my image series as 3-D array without the clusters) (R&D - this is what I remember, please correct if wrong).

Because I am experiencing tremendous slowness in the program after these large data sets are loaded, and I think disk access as well to obtain memory beyond 16 gbytes, I am wondering if I need to use a different storage strategy that will allow seamless program operation while still using RAM storage (do not want to have to recall images from disk).

I have other CT imaging programs that are running very well with these large data sets.

This is a critical issue for me as I move forward with LabVIEW in this application. I would like to work with LabVIEW R&D to solve this issue. I am wondering if I should be thinking about establishing say, 10 queues, instead of 1, to address this. It would mean a major program rewrite.

Sincerely,

Don

01-21-2011 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Is the indata 2-3 Gb or the array of clusters? Do you store 2D pictures as doubles? What operations is performed and how are they performed, are you creating alot of data copies. It might very well be that the data bloats to 15Gb if you're not careful.

You should be able to use Profile Memory usage to see if memory usage is high when the program starts, after a data load and after a few operations.

Without the code it's hard to say something about improvements.

//Y

01-21-2011 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I don't understand the queue argument. Do you have more details about the logic behind it?

What are the actual 3D image set dimensions (x, y, z). What kind of processing do you do?

In LabVIEW 2010, you might use data value references. Might help.

01-21-2011 07:30 PM - edited 01-21-2011 07:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First, I want to add that this strategy works reasonably well for data sets in the 600 - 700 mbyte range with the 64-bit LabVIEW.

With LabVIEW 32-bit, I00 - 200 mbyte sets were about the limit before I experienced problems.

So I definitely noticed an improvement.

I use the queuing strategy to move this large amount of data in RAM. We could have used other means such a LV2 globals. But the idea of clustering the 2-d array (image) and then having a series of those clustered arrays in an array (to see the final structure I showed in my diagram) versus using a 3-D array I believe even allowed me to get this far using RAM instead of recalling the images from disk.

I am sure data copies are being made - yes, the memory is ballooning to 15 gbyte. I probably need to have someone examine this code while I am explaining things to them live. This is a very large application, and a significant amount of time would be required to simplify it, and that might not allow us to duplicate the problem. In some of my applications, I use the in-place structure for indexing data out of arrays to minimize data copies. I expect I might have to consider this strategy now here as well. Just a thought.

What I can do is send someone (in US) via large file transfer a 1.3 - 2.7 gbyte set of image data - and see how they would best advise on storing and extracting the images using RAM, how best to optimize the RAM usage, and not make data copies. The operations that I apply on the images are irrelevant. It is the storage, movement, and extractions that are causing the problems. I can also show a screen shot(s) of how I extract the images (but I have major problems even before I get to that point),

Can someone else comment on how data value references may help here, or how they have helped in one of their applications? Would the use of this eliminate copies? I currently have to wait for 64-bit version of the Advanced Signal Processing Toolkit for LabVIEW 2010 before I can move to LabVIEW 2010.

Don

01-22-2011 09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The operations themselves are not important, but _how_ you perform them might very well be, if you dont make any operations, do the memory bloat, does it bloat linearly with the amount of operations? If you perform another set of the same operations? Are you modifying the arrays of data as part of the operations, how do you modify them?

This thread from just a couple of days ago show an example of array operations that bloats memory in a unnecessary manner. (Unnecessary as it wasn't needed for the operation of the program)

/Y

01-22-2011 11:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Data Value References were introduced in LV2009, so you should have access to them now. They help to ensure that you have exactly one copy of a data value in memory. It's very similar to a single-element queue, like you have in your current architecture. However, when you do a Preview Queue function, you are making a copy of the data. There is one copy in the queue, and one copy output to be previewed.

One thing you can do that shouldn't change your current architecture much at all is to simply dequeue your large data set, operate on it, then enqueue it back into the single-element queue. This will be much more efficient than using Preview, because it's possible that no copies of the data will ever be made.

This is very similar to what a Data Value Reference does. It has two nodes on the In-Place Element Structure: one to dequeue the data, and one to enqueue it back. Data Value References simply help ensure that you don't forget to re-enqueue the data when you're done.

National Instruments

01-24-2011 07:29 AM - edited 01-24-2011 07:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is interesting because I switched a long time ago from the 'dequeue + re-enqueue' approach to 'preview' strategy. I found that previewing allowed more rapid access to the data (which became very noticeable with large data sets).

Don

01-24-2011 08:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I would definitely switch to the data value reference. Its semantics are virtually identical to a single-element queue (except you can't name it), and it is much safer (you always "requeue", there is always one copy unless you explicitly split it, errors dont' cause issues with requeue, etc.), and it is a bit faster. You should also be using the In Place Element structure extensively. Pulling arrays out of clusters, manipulating them, and putting them back in is fraught with peril. You can do it without the In Place Element, but it can be hard to get all the copies eliminated.

From your description, it sounds like you are simply running out of memory and getting into the swap space. What does Task Manager have to say about memory usage with smaller array sizes that work and larger ones that are too slow?

01-24-2011 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Damien -

Thanks a lot for chiming in. Yes, this is exactly what is happenning. I am running out of memory with the larger data sets and disk swapping was apparent. This is evident from viewing Windows task manager.

I'm going to immediately begin upgrading this program to use the data value reference + in-place structure strategy. I will report back when this is completed. Based on your input in years past, for prior applications using 32-bit LabVIEW, I was successful using the in-place structure to manage large waveform-based data sets. And that got me thinking about using it again here - which you are confirming is a good idea.

Perhaps it is time for you to revisit your presentations on memory and large data sets with respect to 64-bit LabVIEW - maybe provide an update at NIWeek 2011 with optimal strategies and case histories for the community at large.

Sincerely,

Don

01-24-2011 02:31 PM - edited 01-24-2011 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Damien - Do you see any way to improve this basic strategy for using RAM optimally for my situation?

Thanks,

Don