From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

Country: United Kingdom

Year Submitted: 2018

University: Loughborough University

List of Team Members (with year of graduation): Leah Edwards, 2019

Faculty Advisers: Antony Sutton

Main Contact Email Address: l.m.edwards-14@student.lboro.ac.uk

Title: Automated Noughts and Crosses System

Description:

Taking a noughts and crosses simulator to a whole new level! Pitch yourself against a robot arm who sees your moves, writes with a pen and will never lose.

Products:

Software

Hardware/ Other

The Challenge:

How better to showcase your Engineering course than a robot that plays noughts and crosses?

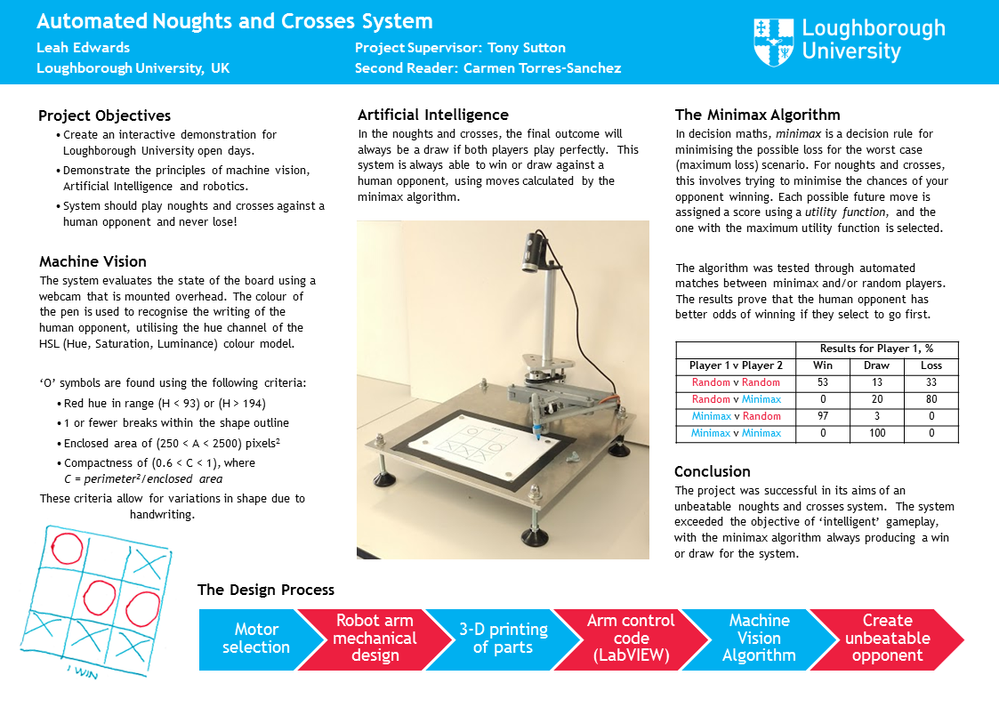

Loughborough University were looking for an engaging new demo for their open days that would bring together the concepts of machine vision, mechanical design and programming. As a 3rd year individual student project, the goal is to create a robotic opponent that will never lose!

The features of the course that Loughborough were seeking to demonstrate were its:

This project would have to bring all of these elements together into one fun demonstration.

The Solution:

I built a fully-automated system that plays the game noughts and crosses, also called tic-tac-toe in some parts of the world.

The system consists of 3 major elements:

LabVIEW ties these elements together as the ‘brain’ of the system; controlling the movement of the arm, interpreting the board as viewed through the webcam and calculating the next move. I’ll explain each element in more detail, but first, watch a short video of the system in action!

Artificial Intelligence

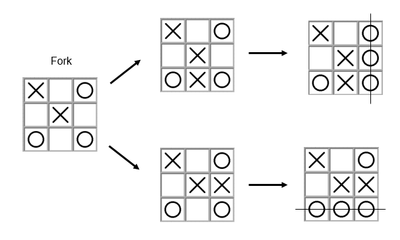

If you’ve played much noughts and crosses, you probably know some strategies to win or at least draw. But explaining these strategies can be difficult! I used the minimax algorithm to ‘look ahead’ after every move and choose the position that minimises the chances of loss. Each possible future move is assigned a score using a utility function, and the one with the highest utility function is selected.

This is a ‘brute force’ approach that can be very computationally intensive: for the first turn the system considers 19683 (39) future boards. After extensive testing (of course, automated with LabVIEW!), I can confirm that my perfect player will never lose, with at worst the game ending in a tie. Tied games can only occur if the human opponent makes no mistakes. The results of my tests are shown below, with 30 tests carried out for each player combination.

|

|

Results for Player 1, % |

||

|

Player 1 v Player 2 |

Win |

Draw |

Loss |

|

Random v Random |

53 |

13 |

33 |

|

Random v Minimax |

0 |

20 |

80 |

|

Minimax v Random |

97 |

3 |

0 |

|

Minimax v Minimax |

0 |

100 |

0 |

The tests show that my ‘perfect’ opponent beats a random player 97% of the time when it takes the first move, and 80% going second. This proves that if you want to minimise your chances of a loss, you should ask to go first! When presented with two or more moves that are equally good, the system will pick randomly between them: meaning no two games are likely to be the same. In terms of strategy, the system often uses a move called a 'fork' where it sets up two possible ways of winning on the next turn. This can be quite difficult for a person to spot, and I have been caught out by it multiple times!

Machine Vision

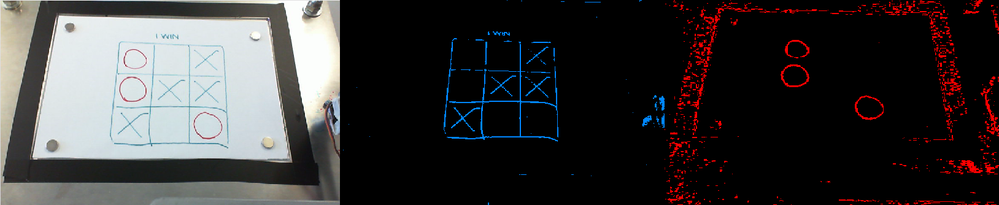

Rather than entering their move on the screen, the human opponent makes their move by drawing in the grid that the robot has created. Therefore the system must be able to see and ‘understand’ the board. Machine vision is the discipline that makes this possible.

Without the benefit of special lighting or a machine vision camera, it was important to create a robust algorithm that could cope with different angles and lighting conditions. Several different approaches were trialled using Vision Builder for Automated Inspection, and later converted to LabVIEW code for use in the final program. This made it really easy to quickly compare different ideas.

The chosen approach relies on the robot and the human using pens of different colours. The hue channel of the HSL (Hue, Saturation, Luminance) colour model allows the hue (tint) of a colour to be decoupled from shadows that show up in the luminance channel. This property is used to isolate one player’s moves (shown below), and the position of the symbols within the grid are established.

Because the system always plays as 'X', it was only necessary to identify the 'O' symbol. The following criteria were used:

These criteria allow for variations in the 'O' shape due to handwriting.

Robotic Manipulator Control

Not content with a run-of-the-mill x-y Cartesian plotter, I decided to create a bespoke manipulator which is a cross between a SCARA arm and a pantograph. The result looks rather humanoid. Two stepper motors are used to control the position of the pen on the page, while a tiny servo lifts the pen from the paper.

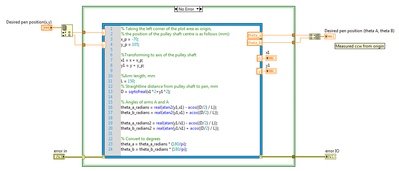

The LINX package from the LabVIEW tools network allowed me to control these motors using an Arduino, leading to a very low-cost solution. I also took advantage of Mathscript nodes for the inverse kinematics of the arm, allowing me to re-use code from my original MATLAB design script.

Below is a game played by the system - it produced the writing in blue.

I’m quite proud of my LabVIEW code for this application, it’s one of the largest applications that I have ever written! Last year I passed my CLD (Certified LabVIEW Developer) exam, and I really enjoyed the chance to put the concepts I had learned into practice through this project. Studying for this exam trained me to write scalable and modular code: I wrote lots of SubVIs that were re-used throughout the application. This can be seen through the project’s VI hierarchy, shown below.

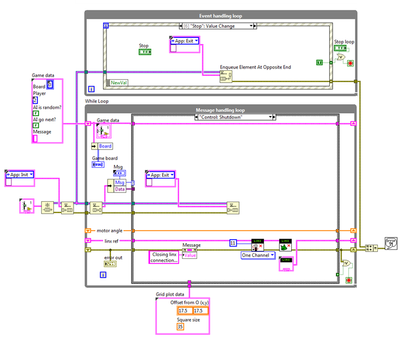

The code was initially developed as 3 separate modules (AI, Vision and Control), which were later integrated into an event-driven producer consumer architecture. The parallel loops shown below allow the program to process the webcam images at the same time as moving the arm and calculating moves.

For me, the real strength of LabVIEW for this application is the visual aspect of the code. As an engineer, explanations of any concept are always accompanied with a diagram: it is how our minds work! Personally, I much prefer a block diagram to a screen full of text.

The entire project spanned 7 months from inception to testing, and was part of my university course. The element that required the most time was probably the mechanical design of the arm – I made a lot of cardboard models to check that my inverse kinematic calculations were correct. One of the assembly drawings that I made is shown below. As well as designing the parts, I also had to make them - hurrah for 3-d printing!

Further Work

Now that I have this robot arm, there are lots of other exciting possible uses. For example, I could teach it to play other paper-based games such as hangman. Perhaps it could also draw portraits of people!

I’m very excited for my demo to be running at Loughborough University open days in the future - if you’re thinking of doing an engineering degree, do come and check it out! I’m hoping that it will start some engaging conversations and encourage the next generation of engineering students to study in our excellent department.

If you missed the video at the top of the page, it can be seen here.