View Ideas...

Labels

Idea Statuses

- New 2,936

- In Development 0

- In Beta 1

- Declined 2,616

- Duplicate 698

- Completed 323

- Already Implemented 111

- Archived 0

Top Authors

| User | Kudos |

|---|---|

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Make numeric display format auto-set to what you input

Submitted by

Dominik-E2

on

07-09-2013

01:55 PM

30 Comments (30 New)

Dominik-E2

on

07-09-2013

01:55 PM

30 Comments (30 New)

Status:

New

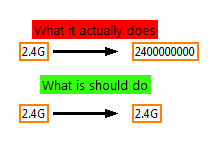

Instead of having to open the "Display Format" window everytime you want to drop a control/indicator/constant with a special format value (i.e. 2400000000 as 2.4G) make the control/indicator/constant automatically set to whatever format you put in. For example

Typing in 2.4G sets the Display Format to "SI notation".

Typing in 2.4E9 sets the Display Format to "Scientific"

Typing in 2400000000 sets Display Format to "Automatic formatting" etc

Labels:

30 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.