My original

problem was that I in the FPGA have an array of data, where I need to do some

calculation, where the only appropriate way was to use a pipeline. The pipeline

is a very strong tool in the FPGA, but I think the LabVIEW tools could be changed

so the pipeline is easier.

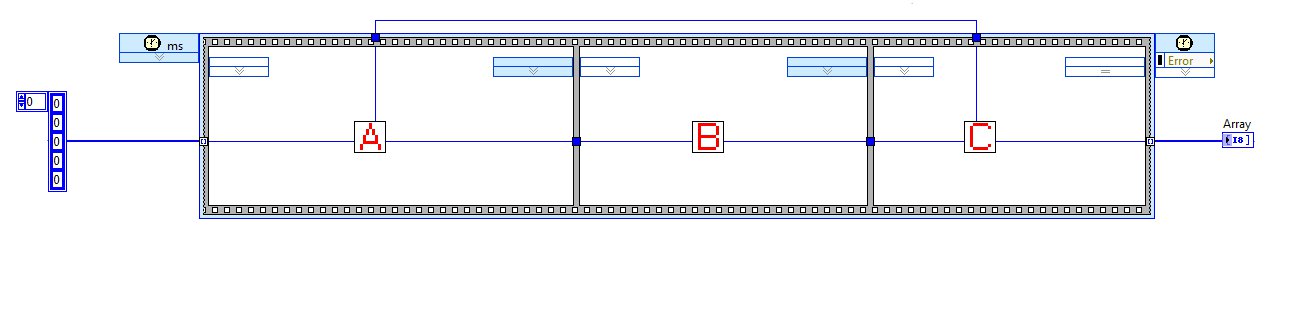

My old

implementation if the pipeline:

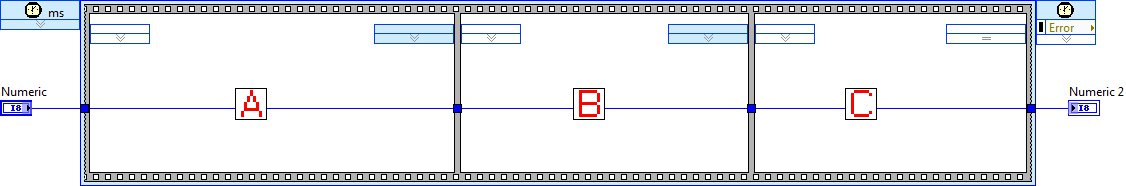

Suggested implementation

of single cycle pipeline, with shift registers in the tunnels, so all the code

is run on each of the 5 elements in the array.

Maybe there

should be added a number of iterations (like the “for loop”), if the number of

data, is not defined by the array size.

In another

project I have a continuous running pipeline, I have implemented in different

ways, but one simple is as shown:

Before

Here the

new pipeline sequence could also be used, maybe like following:

Here it

should be stated in the loop tunnel, that the input data is read continuous.

Here I have

shown in both examples, that it should be single cycle times loops, but maybe the

pipeline structure should also be able to run with another timing (determined

by the slowest frame).

I have seen

the idea about the timed frame, it will help on the last example, but there

will still be need for a pipeline structure.